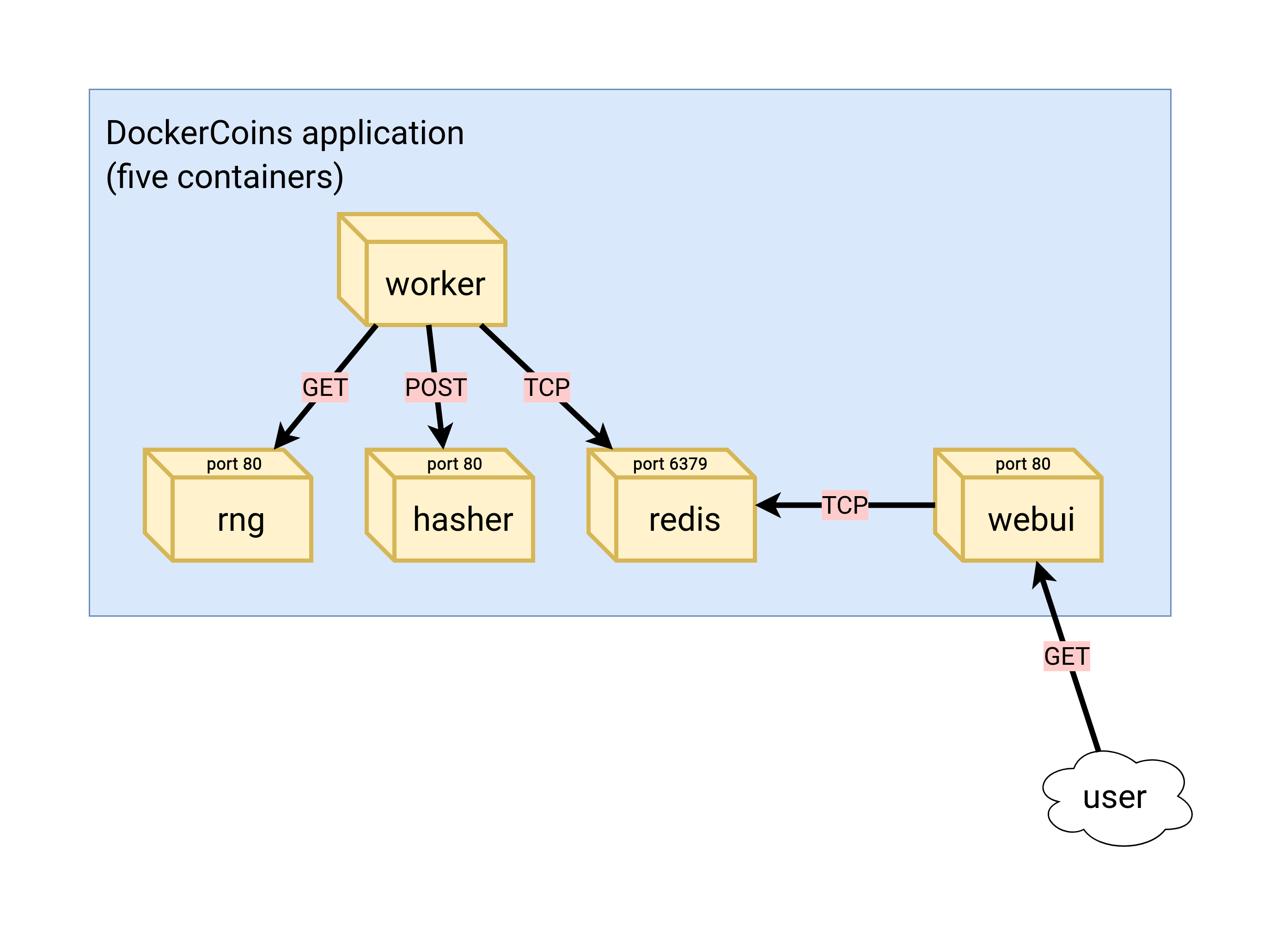

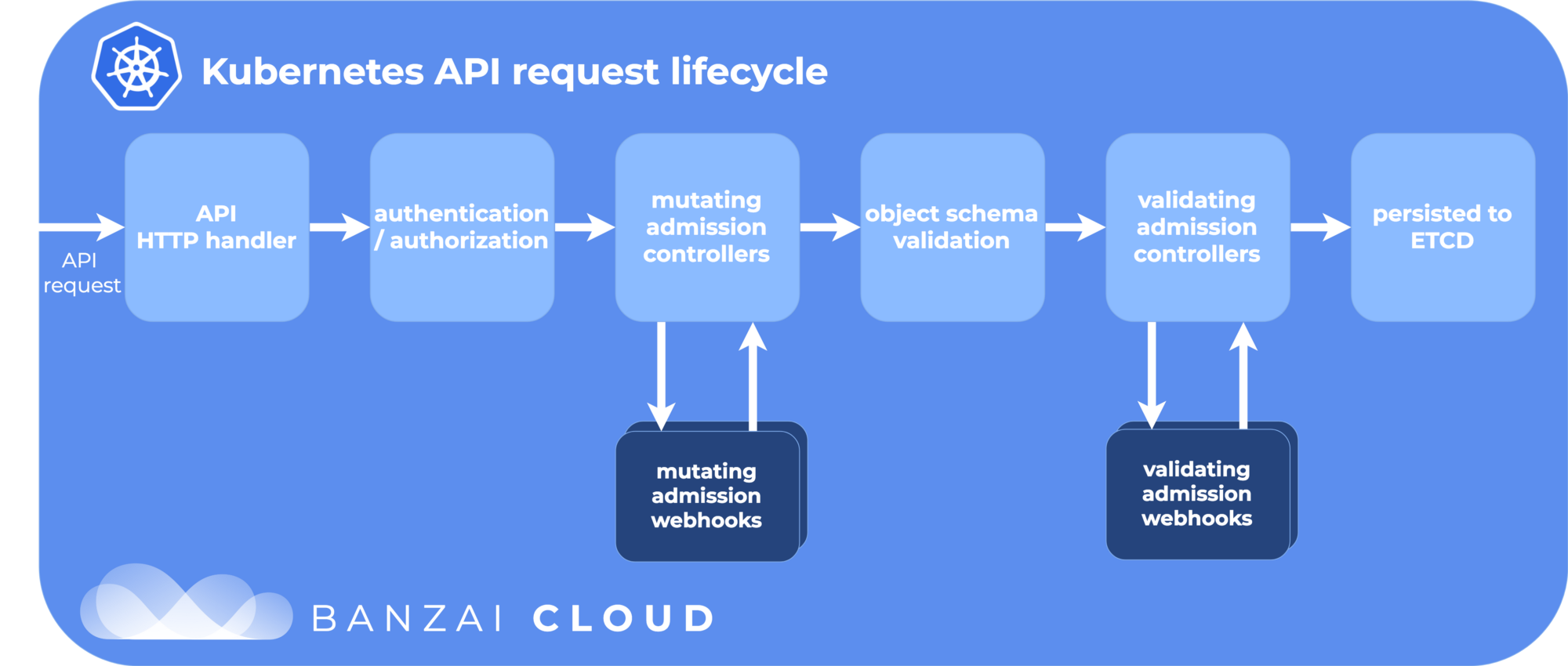

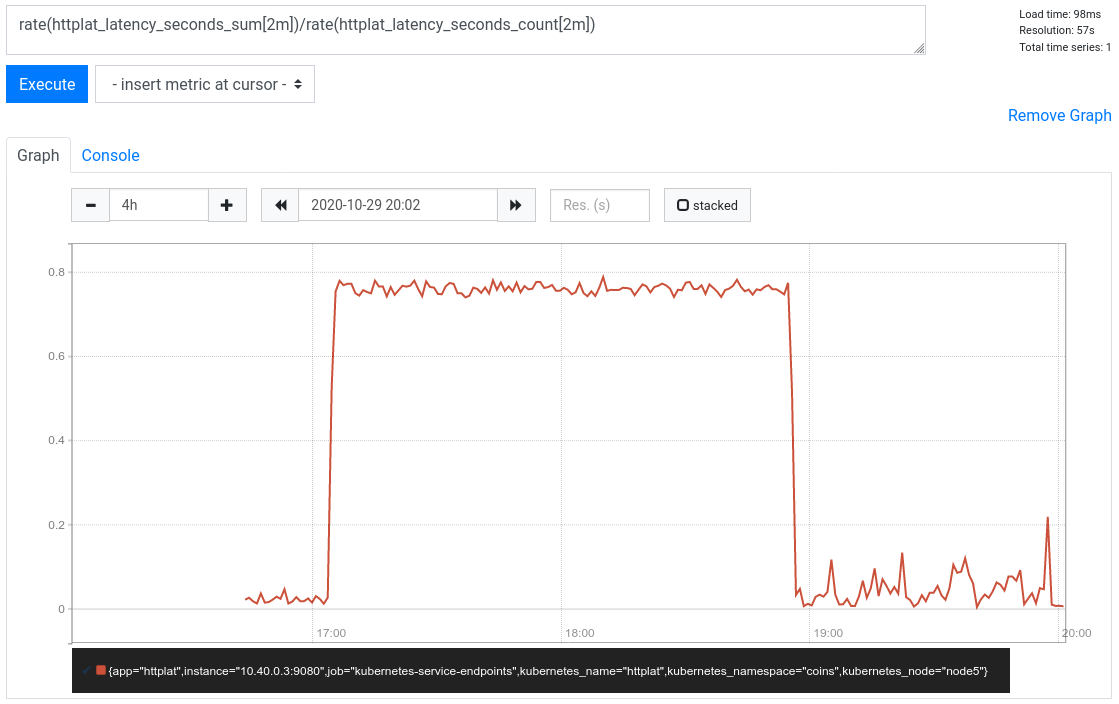

class: title, self-paced Kubernetes Avancé<br/> .nav[*Self-paced version*] .debug[ ``` ``` These slides have been built from commit: af86f36 [shared/title.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/title.md)] --- class: title, in-person Kubernetes Avancé<br/><br/></br> .footnote[ **Slides[:](https://www.youtube.com/watch?v=h16zyxiwDLY) https://2022-02-enix.container.training/** ] <!-- WiFi: **Something**<br/> Password: **Something** **Be kind to the WiFi!**<br/> *Use the 5G network.* *Don't use your hotspot.*<br/> *Don't stream videos or download big files during the workshop*<br/> *Thank you!* --> .debug[[shared/title.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/title.md)] --- ## Introductions - Hello! - On stage: Jérôme ([@jpetazzo]) - Backstage: Alexandre, Amy, Antoine, Aurélien (x2), Benji, David, Julien, Kostas, Nicolas, Thibault - The training will run from 9:30 to 13:00 - There will be a break at (approximately) 11:00 - You ~~should~~ must ask questions! Lots of questions! - Use [Mattermost](https://highfive.container.training/mattermost) to ask questions, get help, etc. [@alexbuisine]: https://twitter.com/alexbuisine [EphemeraSearch]: https://ephemerasearch.com/ [@jpetazzo]: https://twitter.com/jpetazzo [@s0ulshake]: https://twitter.com/s0ulshake [Quantgene]: https://www.quantgene.com/ .debug[[logistics.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/logistics.md)] --- ## Exercises - At the end of each day, there is a series of exercises - To make the most out of the training, please try the exercises! (it will help to practice and memorize the content of the day) - We recommend to take at least one hour to work on the exercises (if you understood the content of the day, it will be much faster) - Each day will start with a quick review of the exercises of the previous day .debug[[logistics.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/logistics.md)] --- ## A brief introduction - This was initially written by [Jérôme Petazzoni](https://twitter.com/jpetazzo) to support in-person, instructor-led workshops and tutorials - Credit is also due to [multiple contributors](https://github.com/jpetazzo/container.training/graphs/contributors) — thank you! - You can also follow along on your own, at your own pace - We included as much information as possible in these slides - We recommend having a mentor to help you ... - ... Or be comfortable spending some time reading the Kubernetes [documentation](https://kubernetes.io/docs/) ... - ... And looking for answers on [StackOverflow](http://stackoverflow.com/questions/tagged/kubernetes) and other outlets .debug[[k8s/intro.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/intro.md)] --- class: self-paced ## Hands on, you shall practice - Nobody ever became a Jedi by spending their lives reading Wookiepedia - Likewise, it will take more than merely *reading* these slides to make you an expert - These slides include *tons* of demos, exercises, and examples - They assume that you have access to a Kubernetes cluster - If you are attending a workshop or tutorial: <br/>you will be given specific instructions to access your cluster - If you are doing this on your own: <br/>the first chapter will give you various options to get your own cluster .debug[[k8s/intro.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/intro.md)] --- ## Accessing these slides now - We recommend that you open these slides in your browser: https://2022-02-enix.container.training/ - Use arrows to move to next/previous slide (up, down, left, right, page up, page down) - Type a slide number + ENTER to go to that slide - The slide number is also visible in the URL bar (e.g. .../#123 for slide 123) .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/about-slides.md)] --- ## Accessing these slides later - Slides will remain online so you can review them later if needed (let's say we'll keep them online at least 1 year, how about that?) - You can download the slides using that URL: https://2022-02-enix.container.training/slides.zip (then open the file `4.yml.html`) - You will find new versions of these slides on: https://container.training/ .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/about-slides.md)] --- ## These slides are open source - You are welcome to use, re-use, share these slides - These slides are written in Markdown - The sources of these slides are available in a public GitHub repository: https://github.com/jpetazzo/container.training - Typos? Mistakes? Questions? Feel free to hover over the bottom of the slide ... .footnote[👇 Try it! The source file will be shown and you can view it on GitHub and fork and edit it.] <!-- .lab[ ```open https://github.com/jpetazzo/container.training/tree/master/slides/common/about-slides.md``` ] --> .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/about-slides.md)] --- class: extra-details ## Extra details - This slide has a little magnifying glass in the top left corner - This magnifying glass indicates slides that provide extra details - Feel free to skip them if: - you are in a hurry - you are new to this and want to avoid cognitive overload - you want only the most essential information - You can review these slides another time if you want, they'll be waiting for you ☺ .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/about-slides.md)] --- ## Chat room - We've set up a chat room that we will monitor during the workshop - Don't hesitate to use it to ask questions, or get help, or share feedback - The chat room will also be available after the workshop - Join the chat room: [Mattermost](https://highfive.container.training/mattermost) - Say hi in the chat room! .debug[[shared/chat-room-im.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/chat-room-im.md)] --- ## Pre-requirements - Be comfortable with the UNIX command line - navigating directories - editing files - a little bit of bash-fu (environment variables, loops) - Some Docker knowledge - `docker run`, `docker ps`, `docker build` - ideally, you know how to write a Dockerfile and build it <br/> (even if it's a `FROM` line and a couple of `RUN` commands) - It's totally OK if you are not a Docker expert! .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: title *Tell me and I forget.* <br/> *Teach me and I remember.* <br/> *Involve me and I learn.* Misattributed to Benjamin Franklin [(Probably inspired by Chinese Confucian philosopher Xunzi)](https://www.barrypopik.com/index.php/new_york_city/entry/tell_me_and_i_forget_teach_me_and_i_may_remember_involve_me_and_i_will_lear/) .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- ## Hands-on sections - The whole workshop is hands-on - We are going to build, ship, and run containers! - You are invited to reproduce all the demos - All hands-on sections are clearly identified, like the gray rectangle below .lab[ - This is the stuff you're supposed to do! - Go to https://2022-02-enix.container.training/ to view these slides <!-- ```open https://2022-02-enix.container.training/``` --> ] .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: in-person ## Where are we going to run our containers? .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: in-person, pic  .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: in-person ## You get a cluster of cloud VMs - Each person gets a private cluster of cloud VMs (not shared with anybody else) - They'll remain up for the duration of the workshop - You should have a little card with login+password+IP addresses - You can automatically SSH from one VM to another - The nodes have aliases: `node1`, `node2`, etc. .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: in-person ## Why don't we run containers locally? - Installing this stuff can be hard on some machines (32 bits CPU or OS... Laptops without administrator access... etc.) - *"The whole team downloaded all these container images from the WiFi! <br/>... and it went great!"* (Literally no-one ever) - All you need is a computer (or even a phone or tablet!), with: - an Internet connection - a web browser - an SSH client .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: in-person ## SSH clients - On Linux, OS X, FreeBSD... you are probably all set - On Windows, get one of these: - [putty](http://www.putty.org/) - Microsoft [Win32 OpenSSH](https://github.com/PowerShell/Win32-OpenSSH/wiki/Install-Win32-OpenSSH) - [Git BASH](https://git-for-windows.github.io/) - [MobaXterm](http://mobaxterm.mobatek.net/) - On Android, [JuiceSSH](https://juicessh.com/) ([Play Store](https://play.google.com/store/apps/details?id=com.sonelli.juicessh)) works pretty well - Nice-to-have: [Mosh](https://mosh.org/) instead of SSH, if your Internet connection tends to lose packets .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: in-person, extra-details ## What is this Mosh thing? *You don't have to use Mosh or even know about it to follow along. <br/> We're just telling you about it because some of us think it's cool!* - Mosh is "the mobile shell" - It is essentially SSH over UDP, with roaming features - It retransmits packets quickly, so it works great even on lossy connections (Like hotel or conference WiFi) - It has intelligent local echo, so it works great even in high-latency connections (Like hotel or conference WiFi) - It supports transparent roaming when your client IP address changes (Like when you hop from hotel to conference WiFi) .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- class: in-person, extra-details ## Using Mosh - To install it: `(apt|yum|brew) install mosh` - It has been pre-installed on the VMs that we are using - To connect to a remote machine: `mosh user@host` (It is going to establish an SSH connection, then hand off to UDP) - It requires UDP ports to be open (By default, it uses a UDP port between 60000 and 61000) .debug[[shared/prereqs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/prereqs.md)] --- ## WebSSH - The virtual machines are also accessible via WebSSH - This can be useful if: - you can't install an SSH client on your machine - SSH connections are blocked (by firewall or local policy) - To use WebSSH, connect to the IP address of the remote VM on port 1080 (each machine runs a WebSSH server) - Then provide the login and password indicated on your card .debug[[shared/webssh.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/webssh.md)] --- ## Good to know - WebSSH uses WebSocket - If you're having connections issues, try to disable your HTTP proxy (many HTTP proxies can't handle WebSocket properly) - Most keyboard shortcuts should work, except Ctrl-W (as it is hardwired by the browser to "close this tab") .debug[[shared/webssh.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/webssh.md)] --- class: in-person ## Connecting to our lab environment .lab[ - Log into the first VM (`node1`) with your SSH client: ```bash ssh `user`@`A.B.C.D` ``` (Replace `user` and `A.B.C.D` with the user and IP address provided to you) <!-- ```bash for N in $(awk '/\Wnode/{print $2}' /etc/hosts); do ssh -o StrictHostKeyChecking=no $N true done ``` ```bash ### FIXME find a way to reset the cluster, maybe? ``` --> ] You should see a prompt looking like this: ``` [A.B.C.D] (...) user@node1 ~ $ ``` If anything goes wrong — ask for help! .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- class: in-person ## `tailhist` - The shell history of the instructor is available online in real time - Note the IP address of the instructor's virtual machine (A.B.C.D) - Open http://A.B.C.D:1088 in your browser and you should see the history - The history is updated in real time (using a WebSocket connection) - It should be green when the WebSocket is connected (if it turns red, reloading the page should fix it) .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- ## Doing or re-doing the workshop on your own? - Use something like [Play-With-Docker](http://play-with-docker.com/) or [Play-With-Kubernetes](https://training.play-with-kubernetes.com/) Zero setup effort; but environment are short-lived and might have limited resources - Create your own cluster (local or cloud VMs) Small setup effort; small cost; flexible environments - Create a bunch of clusters for you and your friends ([instructions](https://github.com/jpetazzo/container.training/tree/master/prepare-vms)) Bigger setup effort; ideal for group training .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- ## For a consistent Kubernetes experience ... - If you are using your own Kubernetes cluster, you can use [jpetazzo/shpod](https://github.com/jpetazzo/shpod) - `shpod` provides a shell running in a pod on your own cluster - It comes with many tools pre-installed (helm, stern...) - These tools are used in many demos and exercises in these slides - `shpod` also gives you completion and a fancy prompt - It can also be used as an SSH server if needed .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- class: self-paced ## Get your own Docker nodes - If you already have some Docker nodes: great! - If not: let's get some thanks to Play-With-Docker .lab[ - Go to http://www.play-with-docker.com/ - Log in - Create your first node <!-- ```open http://www.play-with-docker.com/``` --> ] You will need a Docker ID to use Play-With-Docker. (Creating a Docker ID is free.) .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- ## We will (mostly) interact with node1 only *These remarks apply only when using multiple nodes, of course.* - Unless instructed, **all commands must be run from the first VM, `node1`** - We will only check out/copy the code on `node1` - During normal operations, we do not need access to the other nodes - If we had to troubleshoot issues, we would use a combination of: - SSH (to access system logs, daemon status...) - Docker API (to check running containers and container engine status) .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- ## Terminals Once in a while, the instructions will say: <br/>"Open a new terminal." There are multiple ways to do this: - create a new window or tab on your machine, and SSH into the VM; - use screen or tmux on the VM and open a new window from there. You are welcome to use the method that you feel the most comfortable with. .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- ## Tmux cheat sheet [Tmux](https://en.wikipedia.org/wiki/Tmux) is a terminal multiplexer like `screen`. *You don't have to use it or even know about it to follow along. <br/> But some of us like to use it to switch between terminals. <br/> It has been preinstalled on your workshop nodes.* - Ctrl-b c → creates a new window - Ctrl-b n → go to next window - Ctrl-b p → go to previous window - Ctrl-b " → split window top/bottom - Ctrl-b % → split window left/right - Ctrl-b Alt-1 → rearrange windows in columns - Ctrl-b Alt-2 → rearrange windows in rows - Ctrl-b arrows → navigate to other windows - Ctrl-b d → detach session - tmux attach → re-attach to session .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/connecting.md)] --- name: toc-part-1 ## Part 1 - [Our demo apps](#toc-our-demo-apps) - [Network policies](#toc-network-policies) - [Authentication and authorization](#toc-authentication-and-authorization) - [Sealed Secrets](#toc-sealed-secrets) - [cert-manager](#toc-cert-manager) - [Ingress and TLS certificates](#toc-ingress-and-tls-certificates) - [Exercise — Sealed Secrets](#toc-exercise--sealed-secrets) .debug[(auto-generated TOC)] --- name: toc-part-2 ## Part 2 - [Extending the Kubernetes API](#toc-extending-the-kubernetes-api) - [Custom Resource Definitions](#toc-custom-resource-definitions) - [Operators](#toc-operators) - [Dynamic Admission Control](#toc-dynamic-admission-control) - [Policy Management with Kyverno](#toc-policy-management-with-kyverno) - [Exercise — Generating Ingress With Kyverno](#toc-exercise--generating-ingress-with-kyverno) .debug[(auto-generated TOC)] --- name: toc-part-3 ## Part 3 - [Resource Limits](#toc-resource-limits) - [Defining min, max, and default resources](#toc-defining-min-max-and-default-resources) - [Namespace quotas](#toc-namespace-quotas) - [Limiting resources in practice](#toc-limiting-resources-in-practice) - [Checking Node and Pod resource usage](#toc-checking-node-and-pod-resource-usage) - [Cluster sizing](#toc-cluster-sizing) - [The Horizontal Pod Autoscaler](#toc-the-horizontal-pod-autoscaler) - [API server internals](#toc-api-server-internals) - [The Aggregation Layer](#toc-the-aggregation-layer) - [Scaling with custom metrics](#toc-scaling-with-custom-metrics) .debug[(auto-generated TOC)] --- name: toc-part-4 ## Part 4 - [Stateful sets](#toc-stateful-sets) - [Running a Consul cluster](#toc-running-a-consul-cluster) - [PV, PVC, and Storage Classes](#toc-pv-pvc-and-storage-classes) - [OpenEBS ](#toc-openebs-) - [Stateful failover](#toc-stateful-failover) - [Designing an operator](#toc-designing-an-operator) - [Writing an tiny operator](#toc-writing-an-tiny-operator) - [Owners and dependents](#toc-owners-and-dependents) - [Events](#toc-events) - [Finalizers](#toc-finalizers) .debug[(auto-generated TOC)] .debug[[shared/toc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/shared/toc.md)] --- ## Exercise — Sealed Secrets - Install the sealed secrets operator - Create a secret, seal it, load it in the cluster - Check that sealed secrets are "locked" (can't be used with a different name, namespace, or cluster) - Bonus: migrate a sealing key to another cluster - Set RBAC permissions to grant selective access to secrets .debug[[exercises/sealed-secrets-brief.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/sealed-secrets-brief.md)] --- ## Exercise — Generating Ingress With Kyverno - When a Service gets created, automatically generate an Ingress - Step 1: expose all services with a hard-coded domain name - Step 2: only expose services that have a port named `http` - Step 3: configure the domain name with a per-namespace ConfigMap .debug[[exercises/kyverno-ingress-domain-name-brief.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/kyverno-ingress-domain-name-brief.md)] --- class: pic .interstitial[] --- name: toc-our-demo-apps class: title Our demo apps .nav[ [Previous part](#toc-) | [Back to table of contents](#toc-part-1) | [Next part](#toc-network-policies) ] .debug[(automatically generated title slide)] --- # Our demo apps - We are going to use a few demo apps for demos and labs - Let's get acquainted with them before we dive in! .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## The `color` app - Image name: `jpetazzo/color`, `ghcr.io/jpetazzo/color` - Available for linux/amd64, linux/arm64, linux/arm/v7 platforms - HTTP server listening on port 80 - Serves a web page with a single line of text - The background of the page is derived from the hostname (e.g. if the hostname is `blue-xyz-123`, the background is `blue`) - The web page is "curl-friendly" (it contains `\r` characters to hide HTML tags and declutter the output) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## The `color` app in action - Create a Deployment called `blue` using image `jpetazzo/color` - Expose that Deployment with a Service - Connect to the Service with a web browser - Connect to the Service with `curl` .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## Dockercoins - App with 5 microservices: - `worker` (runs an infinite loop connecting to the other services) - `rng` (web service; generates random numbers) - `hasher` (web service; computes SHA sums) - `redis` (holds a single counter incremented by the `worker` at each loop) - `webui` (web app; displays a graph showing the rate of increase of the counter) - Uses a mix of Node, Python, Ruby - Very simple components (approx. 50 lines of code for the most complicated one) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- class: pic  .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## Deploying Dockercoins - Pre-built images available as `dockercoins/<component>:v0.1` (e.g. `dockercoins/worker:v0.1`) - Containers "discover" each other through DNS (e.g. worker connects to `http://hasher/`) - A Kubernetes YAML manifest is available in *the* repo .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## The repository - When we refer to "the" repository, it means: https://github.com/jpetazzo/container.training - It hosts slides, demo apps, deployment scripts... - All the sample commands, labs, etc. will assume that it's available in: `~/container.training` - Let's clone the repo in our environment! .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## Cloning the repo .lab[ - There is a convenient shortcut to clone the repository: ```bash git clone https://container.training ``` ] While the repository clones, fork it, star it ~~subscribe and hit the bell!~~ .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## Running Dockercoins - All the Kubernetes manifests are in the `k8s` subdirectory - This directory has a `dockercoins.yaml` manifest .lab[ - Deploy Dockercoins: ```bash kubectl apply -f ~/container.training/k8s/dockercoins.yaml ``` ] - The `webui` is exposed with a `NodePort` service - Connect to it (through the `NodePort` or `port-forward`) - Note, it might take a minute for the worker to start .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## Details - If the `worker` Deployment is scaled up, the graph should go up - The `rng` Service is meant to be a bottleneck (capping the graph to 10/second until `rng` is scaled up) - There is artificial latency in the different services (so that the app doesn't consume CPU/RAM/network) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- ## More colors - The repository also contains a `rainbow.yaml` manifest - It creates three namespaces (`blue`, `green`, `red`) - In each namespace, there is an instance of the `color` app (we can use that later to do *literal* blue-green deployment!) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/demo-apps.md)] --- class: pic .interstitial[] --- name: toc-network-policies class: title Network policies .nav[ [Previous part](#toc-our-demo-apps) | [Back to table of contents](#toc-part-1) | [Next part](#toc-authentication-and-authorization) ] .debug[(automatically generated title slide)] --- # Network policies - Namespaces help us to *organize* resources - Namespaces do not provide isolation - By default, every pod can contact every other pod - By default, every service accepts traffic from anyone - If we want this to be different, we need *network policies* .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## What's a network policy? A network policy is defined by the following things. - A *pod selector* indicating which pods it applies to e.g.: "all pods in namespace `blue` with the label `zone=internal`" - A list of *ingress rules* indicating which inbound traffic is allowed e.g.: "TCP connections to ports 8000 and 8080 coming from pods with label `zone=dmz`, and from the external subnet 4.42.6.0/24, except 4.42.6.5" - A list of *egress rules* indicating which outbound traffic is allowed A network policy can provide ingress rules, egress rules, or both. .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## How do network policies apply? - A pod can be "selected" by any number of network policies - If a pod isn't selected by any network policy, then its traffic is unrestricted (In other words: in the absence of network policies, all traffic is allowed) - If a pod is selected by at least one network policy, then all traffic is blocked ... ... unless it is explicitly allowed by one of these network policies .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- class: extra-details ## Traffic filtering is flow-oriented - Network policies deal with *connections*, not individual packets - Example: to allow HTTP (80/tcp) connections to pod A, you only need an ingress rule (You do not need a matching egress rule to allow response traffic to go through) - This also applies for UDP traffic (Allowing DNS traffic can be done with a single rule) - Network policy implementations use stateful connection tracking .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Pod-to-pod traffic - Connections from pod A to pod B have to be allowed by both pods: - pod A has to be unrestricted, or allow the connection as an *egress* rule - pod B has to be unrestricted, or allow the connection as an *ingress* rule - As a consequence: if a network policy restricts traffic going from/to a pod, <br/> the restriction cannot be overridden by a network policy selecting another pod - This prevents an entity managing network policies in namespace A (but without permission to do so in namespace B) from adding network policies giving them access to namespace B .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## The rationale for network policies - In network security, it is generally considered better to "deny all, then allow selectively" (The other approach, "allow all, then block selectively" makes it too easy to leave holes) - As soon as one network policy selects a pod, the pod enters this "deny all" logic - Further network policies can open additional access - Good network policies should be scoped as precisely as possible - In particular: make sure that the selector is not too broad (Otherwise, you end up affecting pods that were otherwise well secured) .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Our first network policy This is our game plan: - run a web server in a pod - create a network policy to block all access to the web server - create another network policy to allow access only from specific pods .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Running our test web server .lab[ - Let's use the `nginx` image: ```bash kubectl create deployment testweb --image=nginx ``` <!-- ```bash kubectl wait deployment testweb --for condition=available ``` --> - Find out the IP address of the pod with one of these two commands: ```bash kubectl get pods -o wide -l app=testweb IP=$(kubectl get pods -l app=testweb -o json | jq -r .items[0].status.podIP) ``` - Check that we can connect to the server: ```bash curl $IP ``` ] The `curl` command should show us the "Welcome to nginx!" page. .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Adding a very restrictive network policy - The policy will select pods with the label `app=testweb` - It will specify an empty list of ingress rules (matching nothing) .lab[ - Apply the policy in this YAML file: ```bash kubectl apply -f ~/container.training/k8s/netpol-deny-all-for-testweb.yaml ``` - Check if we can still access the server: ```bash curl $IP ``` <!-- ```wait curl``` ```key ^C``` --> ] The `curl` command should now time out. .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Looking at the network policy This is the file that we applied: ```yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: deny-all-for-testweb spec: podSelector: matchLabels: app: testweb ingress: [] ``` .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Allowing connections only from specific pods - We want to allow traffic from pods with the label `run=testcurl` - Reminder: this label is automatically applied when we do `kubectl run testcurl ...` .lab[ - Apply another policy: ```bash kubectl apply -f ~/container.training/k8s/netpol-allow-testcurl-for-testweb.yaml ``` ] .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Looking at the network policy This is the second file that we applied: ```yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: allow-testcurl-for-testweb spec: podSelector: matchLabels: app: testweb ingress: - from: - podSelector: matchLabels: run: testcurl ``` .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Testing the network policy - Let's create pods with, and without, the required label .lab[ - Try to connect to testweb from a pod with the `run=testcurl` label: ```bash kubectl run testcurl --rm -i --image=centos -- curl -m3 $IP ``` - Try to connect to testweb with a different label: ```bash kubectl run testkurl --rm -i --image=centos -- curl -m3 $IP ``` ] The first command will work (and show the "Welcome to nginx!" page). The second command will fail and time out after 3 seconds. (The timeout is obtained with the `-m3` option.) .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## An important warning - Some network plugins only have partial support for network policies - For instance, Weave added support for egress rules [in version 2.4](https://github.com/weaveworks/weave/pull/3313) (released in July 2018) - But only recently added support for ipBlock [in version 2.5](https://github.com/weaveworks/weave/pull/3367) (released in Nov 2018) - Unsupported features might be silently ignored (Making you believe that you are secure, when you're not) .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Network policies, pods, and services - Network policies apply to *pods* - A *service* can select multiple pods (And load balance traffic across them) - It is possible that we can connect to some pods, but not some others (Because of how network policies have been defined for these pods) - In that case, connections to the service will randomly pass or fail (Depending on whether the connection was sent to a pod that we have access to or not) .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Network policies and namespaces - A good strategy is to isolate a namespace, so that: - all the pods in the namespace can communicate together - other namespaces cannot access the pods - external access has to be enabled explicitly - Let's see what this would look like for the DockerCoins app! .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Network policies for DockerCoins - We are going to apply two policies - The first policy will prevent traffic from other namespaces - The second policy will allow traffic to the `webui` pods - That's all we need for that app! .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Blocking traffic from other namespaces This policy selects all pods in the current namespace. It allows traffic only from pods in the current namespace. (An empty `podSelector` means "all pods.") ```yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: deny-from-other-namespaces spec: podSelector: {} ingress: - from: - podSelector: {} ``` .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Allowing traffic to `webui` pods This policy selects all pods with label `app=webui`. It allows traffic from any source. (An empty `from` field means "all sources.") ```yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: allow-webui spec: podSelector: matchLabels: app: webui ingress: - from: [] ``` .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Applying both network policies - Both network policies are declared in the file [k8s/netpol-dockercoins.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/netpol-dockercoins.yaml) .lab[ - Apply the network policies: ```bash kubectl apply -f ~/container.training/k8s/netpol-dockercoins.yaml ``` - Check that we can still access the web UI from outside <br/> (and that the app is still working correctly!) - Check that we can't connect anymore to `rng` or `hasher` through their ClusterIP ] Note: using `kubectl proxy` or `kubectl port-forward` allows us to connect regardless of existing network policies. This allows us to debug and troubleshoot easily, without having to poke holes in our firewall. .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Cleaning up our network policies - The network policies that we have installed block all traffic to the default namespace - We should remove them, otherwise further demos and exercises will fail! .lab[ - Remove all network policies: ```bash kubectl delete networkpolicies --all ``` ] .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Protecting the control plane - Should we add network policies to block unauthorized access to the control plane? (etcd, API server, etc.) -- - At first, it seems like a good idea ... -- - But it *shouldn't* be necessary: - not all network plugins support network policies - the control plane is secured by other methods (mutual TLS, mostly) - the code running in our pods can reasonably expect to contact the API <br/> (and it can do so safely thanks to the API permission model) - If we block access to the control plane, we might disrupt legitimate code - ...Without necessarily improving security .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Tools and resources - [Cilium Network Policy Editor](https://editor.cilium.io/) - [Tufin Network Policy Viewer](https://orca.tufin.io/netpol/) - Two resources by [Ahmet Alp Balkan](https://ahmet.im/): - a [very good talk about network policies](https://www.youtube.com/watch?list=PLj6h78yzYM2P-3-xqvmWaZbbI1sW-ulZb&v=3gGpMmYeEO8) at KubeCon North America 2017 - a repository of [ready-to-use recipes](https://github.com/ahmetb/kubernetes-network-policy-recipes) for network policies .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- ## Documentation - As always, the [Kubernetes documentation](https://kubernetes.io/docs/concepts/services-networking/network-policies/) is a good starting point - The API documentation has a lot of detail about the format of various objects: <!-- ##VERSION## --> - [NetworkPolicy](https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.20/#networkpolicy-v1-networking-k8s-io) - [NetworkPolicySpec](https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.20/#networkpolicyspec-v1-networking-k8s-io) - [NetworkPolicyIngressRule](https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.20/#networkpolicyingressrule-v1-networking-k8s-io) - etc. ??? :EN:- Isolating workloads with Network Policies :FR:- Isolation réseau avec les *network policies* .debug[[k8s/netpol.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/netpol.md)] --- class: pic .interstitial[] --- name: toc-authentication-and-authorization class: title Authentication and authorization .nav[ [Previous part](#toc-network-policies) | [Back to table of contents](#toc-part-1) | [Next part](#toc-sealed-secrets) ] .debug[(automatically generated title slide)] --- # Authentication and authorization - In this section, we will: - define authentication and authorization - explain how they are implemented in Kubernetes - talk about tokens, certificates, service accounts, RBAC ... - But first: why do we need all this? .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## The need for fine-grained security - The Kubernetes API should only be available for identified users - we don't want "guest access" (except in very rare scenarios) - we don't want strangers to use our compute resources, delete our apps ... - our keys and passwords should not be exposed to the public - Users will often have different access rights - cluster admin (similar to UNIX "root") can do everything - developer might access specific resources, or a specific namespace - supervision might have read only access to *most* resources .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Example: custom HTTP load balancer - Let's imagine that we have a custom HTTP load balancer for multiple apps - Each app has its own *Deployment* resource - By default, the apps are "sleeping" and scaled to zero - When a request comes in, the corresponding app gets woken up - After some inactivity, the app is scaled down again - This HTTP load balancer needs API access (to scale up/down) - What if *a wild vulnerability appears*? .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Consequences of vulnerability - If the HTTP load balancer has the same API access as we do: *full cluster compromise (easy data leak, cryptojacking...)* - If the HTTP load balancer has `update` permissions on the Deployments: *defacement (easy), MITM / impersonation (medium to hard)* - If the HTTP load balancer only has permission to `scale` the Deployments: *denial-of-service* - All these outcomes are bad, but some are worse than others .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Definitions - Authentication = verifying the identity of a person On a UNIX system, we can authenticate with login+password, SSH keys ... - Authorization = listing what they are allowed to do On a UNIX system, this can include file permissions, sudoer entries ... - Sometimes abbreviated as "authn" and "authz" - In good modular systems, these things are decoupled (so we can e.g. change a password or SSH key without having to reset access rights) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Authentication in Kubernetes - When the API server receives a request, it tries to authenticate it (it examines headers, certificates... anything available) - Many authentication methods are available and can be used simultaneously (we will see them on the next slide) - It's the job of the authentication method to produce: - the user name - the user ID - a list of groups - The API server doesn't interpret these; that'll be the job of *authorizers* .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Authentication methods - TLS client certificates (that's the default for clusters provisioned with `kubeadm`) - Bearer tokens (a secret token in the HTTP headers of the request) - [HTTP basic auth](https://en.wikipedia.org/wiki/Basic_access_authentication) (carrying user and password in an HTTP header; [deprecated since Kubernetes 1.19](https://github.com/kubernetes/kubernetes/pull/89069)) - Authentication proxy (sitting in front of the API and setting trusted headers) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Anonymous requests - If any authentication method *rejects* a request, it's denied (`401 Unauthorized` HTTP code) - If a request is neither rejected nor accepted by anyone, it's anonymous - the user name is `system:anonymous` - the list of groups is `[system:unauthenticated]` - By default, the anonymous user can't do anything (that's what you get if you just `curl` the Kubernetes API) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Authentication with TLS certificates - Enabled in almost all Kubernetes deployments - The user name is indicated by the `CN` in the client certificate - The groups are indicated by the `O` fields in the client certificate - From the point of view of the Kubernetes API, users do not exist (i.e. there is no resource with `kind: User`) - The Kubernetes API can be set up to use your custom CA to validate client certs .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Authentication for kubelet - In most clusters, kubelets authenticate using certificates (`O=system:nodes`, `CN=system:node:name-of-the-node`) - The Kubernetse API can act as a CA (by wrapping an X509 CSR into a CertificateSigningRequest resource) - This enables kubelets to renew their own certificates - It can also be used to issue user certificates (but it lacks flexibility; e.g. validity can't be customized) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## User certificates in practice - The Kubernetes API server does not support certificate revocation (see issue [#18982](https://github.com/kubernetes/kubernetes/issues/18982)) - As a result, we don't have an easy way to terminate someone's access (if their key is compromised, or they leave the organization) - Issue short-lived certificates if you use them to authenticate users! (short-lived = a few hours) - This can be facilitated by e.g. Vault, cert-manager... .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## What if a certificate is compromised? - Option 1: wait for the certificate to expire (which is why short-lived certs are convenient!) - Option 2: remove access from that certificate's user and groups - if that user was `bob.smith`, create a new user `bob.smith.2` - if Bob was in groups `dev`, create a new group `dev.2` - let's agree that this is not a great solution! - Option 3: re-create a new CA and re-issue all certificates - let's agree that this is an even worse solution! .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Authentication with tokens - Tokens are passed as HTTP headers: `Authorization: Bearer and-then-here-comes-the-token` - Tokens can be validated through a number of different methods: - static tokens hard-coded in a file on the API server - [bootstrap tokens](https://kubernetes.io/docs/reference/access-authn-authz/bootstrap-tokens/) (special case to create a cluster or join nodes) - [OpenID Connect tokens](https://kubernetes.io/docs/reference/access-authn-authz/authentication/#openid-connect-tokens) (to delegate authentication to compatible OAuth2 providers) - service accounts (these deserve more details, coming right up!) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Service accounts - A service account is a user that exists in the Kubernetes API (it is visible with e.g. `kubectl get serviceaccounts`) - Service accounts can therefore be created / updated dynamically (they don't require hand-editing a file and restarting the API server) - A service account is associated with a set of secrets (the kind that you can view with `kubectl get secrets`) - Service accounts are generally used to grant permissions to applications, services... (as opposed to humans) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Checking our authentication method - Let's check our kubeconfig file - Do we have a certificate, a token, or something else? .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Inspecting a certificate If we have a certificate, let's use the following command: ```bash kubectl config view \ --raw \ -o json \ | jq -r .users[0].user[\"client-certificate-data\"] \ | openssl base64 -d -A \ | openssl x509 -text \ | grep Subject: ``` This command will show the `CN` and `O` fields for our certificate. .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Breaking down the command - `kubectl config view` shows the Kubernetes user configuration - `--raw` includes certificate information (which shows as REDACTED otherwise) - `-o json` outputs the information in JSON format - `| jq ...` extracts the field with the user certificate (in base64) - `| openssl base64 -d -A` decodes the base64 format (now we have a PEM file) - `| openssl x509 -text` parses the certificate and outputs it as plain text - `| grep Subject:` shows us the line that interests us → We are user `kubernetes-admin`, in group `system:masters`. (We will see later how and why this gives us the permissions that we have.) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Inspecting a token If we have a token, let's use the following command: ```bash kubectl config view \ --raw \ -o json \ | jq -r .users[0].user.token \ | base64 -d \ | cut -d. -f2 \ | base64 -d \ | jq . ``` If our token is a JWT / OIDC token, this command will show its content. .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Other authentication methods - Other types of tokens - these tokens are typically shorter than JWT or OIDC tokens - it is generally not possible to extract information from them - Plugins - some clusters use external `exec` plugins - these plugins typically use API keys to generate or obtain tokens - example: the AWS EKS authenticator works this way .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Token authentication in practice - We are going to list existing service accounts - Then we will extract the token for a given service account - And we will use that token to authenticate with the API .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Listing service accounts .lab[ - The resource name is `serviceaccount` or `sa` for short: ```bash kubectl get sa ``` ] There should be just one service account in the default namespace: `default`. .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Finding the secret .lab[ - List the secrets for the `default` service account: ```bash kubectl get sa default -o yaml SECRET=$(kubectl get sa default -o json | jq -r .secrets[0].name) ``` ] It should be named `default-token-XXXXX`. .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Extracting the token - The token is stored in the secret, wrapped with base64 encoding .lab[ - View the secret: ```bash kubectl get secret $SECRET -o yaml ``` - Extract the token and decode it: ```bash TOKEN=$(kubectl get secret $SECRET -o json \ | jq -r .data.token | openssl base64 -d -A) ``` ] .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Using the token - Let's send a request to the API, without and with the token .lab[ - Find the ClusterIP for the `kubernetes` service: ```bash kubectl get svc kubernetes API=$(kubectl get svc kubernetes -o json | jq -r .spec.clusterIP) ``` - Connect without the token: ```bash curl -k https://$API ``` - Connect with the token: ```bash curl -k -H "Authorization: Bearer $TOKEN" https://$API ``` ] .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Results - In both cases, we will get a "Forbidden" error - Without authentication, the user is `system:anonymous` - With authentication, it is shown as `system:serviceaccount:default:default` - The API "sees" us as a different user - But neither user has any rights, so we can't do nothin' - Let's change that! .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Authorization in Kubernetes - There are multiple ways to grant permissions in Kubernetes, called [authorizers](https://kubernetes.io/docs/reference/access-authn-authz/authorization/#authorization-modules): - [Node Authorization](https://kubernetes.io/docs/reference/access-authn-authz/node/) (used internally by kubelet; we can ignore it) - [Attribute-based access control](https://kubernetes.io/docs/reference/access-authn-authz/abac/) (powerful but complex and static; ignore it too) - [Webhook](https://kubernetes.io/docs/reference/access-authn-authz/webhook/) (each API request is submitted to an external service for approval) - [Role-based access control](https://kubernetes.io/docs/reference/access-authn-authz/rbac/) (associates permissions to users dynamically) - The one we want is the last one, generally abbreviated as RBAC .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Role-based access control - RBAC allows to specify fine-grained permissions - Permissions are expressed as *rules* - A rule is a combination of: - [verbs](https://kubernetes.io/docs/reference/access-authn-authz/authorization/#determine-the-request-verb) like create, get, list, update, delete... - resources (as in "API resource," like pods, nodes, services...) - resource names (to specify e.g. one specific pod instead of all pods) - in some case, [subresources](https://kubernetes.io/docs/reference/access-authn-authz/rbac/#referring-to-resources) (e.g. logs are subresources of pods) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Listing all possible verbs - The Kubernetes API is self-documented - We can ask it which resources, subresources, and verb exist - One way to do this is to use: - `kubectl get --raw /api/v1` (for core resources with `apiVersion: v1`) - `kubectl get --raw /apis/<group>/<version>` (for other resources) - The JSON response can be formatted with e.g. `jq` for readability .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Examples - List all verbs across all `v1` resources ```bash kubectl get --raw /api/v1 | jq -r .resources[].verbs[] | sort -u ``` - List all resources and subresources in `apps/v1` ```bash kubectl get --raw /apis/apps/v1 | jq -r .resources[].name ``` - List which verbs are available on which resources in `networking.k8s.io` ```bash kubectl get --raw /apis/networking.k8s.io/v1 | \ jq -r '.resources[] | .name + ": " + (.verbs | join(", "))' ``` .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## From rules to roles to rolebindings - A *role* is an API object containing a list of *rules* Example: role "external-load-balancer-configurator" can: - [list, get] resources [endpoints, services, pods] - [update] resources [services] - A *rolebinding* associates a role with a user Example: rolebinding "external-load-balancer-configurator": - associates user "external-load-balancer-configurator" - with role "external-load-balancer-configurator" - Yes, there can be users, roles, and rolebindings with the same name - It's a good idea for 1-1-1 bindings; not so much for 1-N ones .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Cluster-scope permissions - API resources Role and RoleBinding are for objects within a namespace - We can also define API resources ClusterRole and ClusterRoleBinding - These are a superset, allowing us to: - specify actions on cluster-wide objects (like nodes) - operate across all namespaces - We can create Role and RoleBinding resources within a namespace - ClusterRole and ClusterRoleBinding resources are global .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Pods and service accounts - A pod can be associated with a service account - by default, it is associated with the `default` service account - as we saw earlier, this service account has no permissions anyway - The associated token is exposed to the pod's filesystem (in `/var/run/secrets/kubernetes.io/serviceaccount/token`) - Standard Kubernetes tooling (like `kubectl`) will look for it there - So Kubernetes tools running in a pod will automatically use the service account .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## In practice - We are going to run a pod - This pod will use the default service account of its namespace - We will check our API permissions (there shouldn't be any) - Then we will bind a role to the service account - We will check that we were granted the corresponding permissions .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Running a pod - We'll use [Nixery](https://nixery.dev/) to run a pod with `curl` and `kubectl` - Nixery automatically generates images with the requested packages .lab[ - Run our pod: ```bash kubectl run eyepod --rm -ti --restart=Never \ --image nixery.dev/shell/curl/kubectl -- bash ``` ] .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Checking our permissions - Normally, at this point, we don't have any API permission .lab[ - Check our permissions with `kubectl`: ```bash kubectl get pods ``` ] - We should get a message telling us that our service account doesn't have permissions to list "pods" in the current namespace - We can also make requests to the API server directly (use `kubectl -v6` to see the exact request URI!) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Binding a role to the service account - Binding a role = creating a *rolebinding* object - We will call that object `can-view` (but again, we could call it `view` or whatever we like) .lab[ - Create the new role binding: ```bash kubectl create rolebinding can-view \ --clusterrole=view \ --serviceaccount=default:default ``` ] It's important to note a couple of details in these flags... .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Roles vs Cluster Roles - We used `--clusterrole=view` - What would have happened if we had used `--role=view`? - we would have bound the role `view` from the local namespace <br/>(instead of the cluster role `view`) - the command would have worked fine (no error) - but later, our API requests would have been denied - This is a deliberate design decision (we can reference roles that don't exist, and create/update them later) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Users vs Service Accounts - We used `--serviceaccount=default:default` - What would have happened if we had used `--user=default:default`? - we would have bound the role to a user instead of a service account - again, the command would have worked fine (no error) - ...but our API requests would have been denied later - What's about the `default:` prefix? - that's the namespace of the service account - yes, it could be inferred from context, but... `kubectl` requires it .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## Checking our new permissions - We should be able to *view* things, but not to *edit* them .lab[ - Check our permissions with `kubectl`: ```bash kubectl get pods ``` - Try to create something: ```bash kubectl create deployment can-i-do-this --image=nginx ``` - Exit the container with `exit` or `^D` <!-- ```key ^D``` --> ] .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## `kubectl run --serviceaccount` - `kubectl run` also has a `--serviceaccount` flag - ...But it's supposed to be deprecated "soon" (see [kubernetes/kubernetes#99732](https://github.com/kubernetes/kubernetes/pull/99732) for details) - It's possible to specify the service account with an override: ```bash kubectl run my-pod -ti --image=alpine --restart=Never \ --overrides='{ "spec": { "serviceAccountName" : "my-service-account" } }' ``` .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## `kubectl auth` and other CLI tools - The `kubectl auth can-i` command can tell us: - if we can perform an action - if someone else can perform an action - what actions we can perform - There are also other very useful tools to work with RBAC - Let's do a quick review! .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## `kubectl auth can-i dothis onthat` - These commands will give us a `yes`/`no` answer: ```bash kubectl auth can-i list nodes kubectl auth can-i create pods kubectl auth can-i get pod/name-of-pod kubectl auth can-i get /url-fragment-of-api-request/ kubectl auth can-i '*' services kubectl auth can-i get coffee kubectl auth can-i drink coffee ``` - The RBAC system is flexible - We can check permissions on resources that don't exist yet (e.g. CRDs) - We can check permissions for arbitrary actions .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## `kubectl auth can-i ... --as someoneelse` - We can check permissions on behalf of other users ```bash kubectl auth can-i list nodes \ --as some-user kubectl auth can-i list nodes \ --as system:serviceaccount:<namespace>:<name-of-service-account> ``` - We can also use `--as-group` to check permissions for members of a group - `--as` and `--as-group` leverage the *impersonation API* - These flags can be used with many other `kubectl` commands (not just `auth can-i`) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## `kubectl auth can-i --list` - We can list the actions that are available to us: ```bash kubectl auth can-i --list ``` - ... Or to someone else (with `--as SomeOtherUser`) - This is very useful to check users or service accounts for overly broad permissions (or when looking for ways to exploit a security vulnerability!) - To learn more about Kubernetes attacks and threat models around RBAC: 📽️ [Hacking into Kubernetes Security for Beginners](https://www.youtube.com/watch?v=mLsCm9GVIQg) by [Ellen Körbes](https://twitter.com/ellenkorbes) and [Tabitha Sable](https://twitter.com/TabbySable) .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Other useful tools - For auditing purposes, sometimes we want to know who can perform which actions - There are a few tools to help us with that, available as `kubectl` plugins: - `kubectl who-can` / [kubectl-who-can](https://github.com/aquasecurity/kubectl-who-can) by Aqua Security - `kubectl access-matrix` / [Rakkess (Review Access)](https://github.com/corneliusweig/rakkess) by Cornelius Weig - `kubectl rbac-lookup` / [RBAC Lookup](https://github.com/FairwindsOps/rbac-lookup) by FairwindsOps - `kubectl rbac-tool` / [RBAC Tool](https://github.com/alcideio/rbac-tool) by insightCloudSec - `kubectl` plugins can be installed and managed with `krew` - They can also be installed and executed as standalone programs .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Where does this `view` role come from? - Kubernetes defines a number of ClusterRoles intended to be bound to users - `cluster-admin` can do *everything* (think `root` on UNIX) - `admin` can do *almost everything* (except e.g. changing resource quotas and limits) - `edit` is similar to `admin`, but cannot view or edit permissions - `view` has read-only access to most resources, except permissions and secrets *In many situations, these roles will be all you need.* *You can also customize them!* .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Customizing the default roles - If you need to *add* permissions to these default roles (or others), <br/> you can do it through the [ClusterRole Aggregation](https://kubernetes.io/docs/reference/access-authn-authz/rbac/#aggregated-clusterroles) mechanism - This happens by creating a ClusterRole with the following labels: ```yaml metadata: labels: rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" ``` - This ClusterRole permissions will be added to `admin`/`edit`/`view` respectively .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## When should we use aggregation? - By default, CRDs aren't included in `view` / `edit` / etc. (Kubernetes cannot guess which one are security sensitive and which ones are not) - If we edit `view` / `edit` / etc directly, our edits will conflict (imagine if we have two CRDs and they both provide a custom `view` ClusterRole) - Using aggregated roles lets us enrich the default roles without touching them .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## How aggregation works - The corresponding roles will have `aggregationRules` like this: ```yaml aggregationRule: clusterRoleSelectors: - matchLabels: rbac.authorization.k8s.io/aggregate-to-view: "true" ``` - We can define our own custom roles with their own aggregation rules .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## Where do our permissions come from? - When interacting with the Kubernetes API, we are using a client certificate - We saw previously that this client certificate contained: `CN=kubernetes-admin` and `O=system:masters` - Let's look for these in existing ClusterRoleBindings: ```bash kubectl get clusterrolebindings -o yaml | grep -e kubernetes-admin -e system:masters ``` (`system:masters` should show up, but not `kubernetes-admin`.) - Where does this match come from? .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: extra-details ## The `system:masters` group - If we eyeball the output of `kubectl get clusterrolebindings -o yaml`, we'll find out! - It is in the `cluster-admin` binding: ```bash kubectl describe clusterrolebinding cluster-admin ``` - This binding associates `system:masters` with the cluster role `cluster-admin` - And the `cluster-admin` is, basically, `root`: ```bash kubectl describe clusterrole cluster-admin ``` .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- ## `list` vs. `get` ⚠️ `list` grants read permissions to resources! - It's not possible to give permission to list resources without also reading them - This has implications for e.g. Secrets (if a controller needs to be able to enumerate Secrets, it will be able to read them) ??? :EN:- Authentication and authorization in Kubernetes :EN:- Authentication with tokens and certificates :EN:- Authorization with RBAC (Role-Based Access Control) :EN:- Restricting permissions with Service Accounts :EN:- Working with Roles, Cluster Roles, Role Bindings, etc. :FR:- Identification et droits d'accès dans Kubernetes :FR:- Mécanismes d'identification par jetons et certificats :FR:- Le modèle RBAC *(Role-Based Access Control)* :FR:- Restreindre les permissions grâce aux *Service Accounts* :FR:- Comprendre les *Roles*, *Cluster Roles*, *Role Bindings*, etc. .debug[[k8s/authn-authz.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/authn-authz.md)] --- class: pic .interstitial[] --- name: toc-sealed-secrets class: title Sealed Secrets .nav[ [Previous part](#toc-authentication-and-authorization) | [Back to table of contents](#toc-part-1) | [Next part](#toc-cert-manager) ] .debug[(automatically generated title slide)] --- # Sealed Secrets - Kubernetes provides the "Secret" resource to store credentials, keys, passwords ... - Secrets can be protected with RBAC (e.g. "you can write secrets, but only the app's service account can read them") - [Sealed Secrets](https://github.com/bitnami-labs/sealed-secrets) is an operator that lets us store secrets in code repositories - It uses asymetric cryptography: - anyone can *encrypt* a secret - only the cluster can *decrypt* a secret .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Principle - The Sealed Secrets operator uses a *public* and a *private* key - The public key is available publicly (duh!) - We use the public key to encrypt secrets into a SealedSecret resource - the SealedSecret resource can be stored in a code repo (even a public one) - The SealedSecret resource is `kubectl apply`'d to the cluster - The Sealed Secrets controller decrypts the SealedSecret with the private key (this creates a classic Secret resource) - Nobody else can decrypt secrets, since only the controller has the private key .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## In action - We will install the Sealed Secrets operator - We will generate a Secret - We will "seal" that Secret (generate a SealedSecret) - We will load that SealedSecret on the cluster - We will check that we now have a Secret .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Installing the operator - The official installation is done through a single YAML file - There is also a Helm chart if you prefer that (if you're using Kubernetes 1.22+, see next slide!) <!-- #VERSION# --> .lab[ - Install the operator: .small[ ```bash kubectl apply -f \ https://github.com/bitnami-labs/sealed-secrets/releases/download/v0.16.0/controller.yaml ``` ] ] Note: it installs into `kube-system` by default. If you change that, you will also need to inform `kubeseal` later on. .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- class: extra-details ## Sealed Secrets on Kubernetes 1.22 - As of version 0.16, Sealed Secrets manifests uses RBAC v1beta1 - RBAC v1beta1 isn't supported anymore in Kubernetes 1.22 - Sealed Secerets Helm chart provides manifests using RBAC v1 - Conclusion: to install Sealed Secrets on Kubernetes 1.22, use the Helm chart: ```bash helm install --repo https://bitnami-labs.github.io/sealed-secrets/ \ sealed-secrets-controller sealed-secrets --namespace kube-system ``` - Make sure to install in the `kube-system` Namespace - Make sure that the release is named `sealed-secrets-controller` (or pass a `--controller-name` option to `kubeseal` later) .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Creating a Secret - Let's create a normal (unencrypted) secret .lab[ - Create a Secret with a couple of API tokens: ```bash kubectl create secret generic awskey \ --from-literal=AWS_ACCESS_KEY_ID=AKI... \ --from-literal=AWS_SECRET_ACCESS_KEY=abc123xyz... \ --dry-run=client -o yaml > secret-aws.yaml ``` ] - Note the `--dry-run` and `-o yaml` (we're just generating YAML, not sending the secrets to our Kubernetes cluster) - We could also write the YAML from scratch or generate it with other tools .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Creating a Sealed Secret - This is done with the `kubeseal` tool - It will obtain the public key from the cluster .lab[ - Create the Sealed Secret: ```bash kubeseal < secret-aws.yaml > sealed-secret-aws.json ``` ] - The file `sealed-secret-aws.json` can be committed to your public repo (if you prefer YAML output, you can add `-o yaml`) .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Using a Sealed Secret - Now let's `kubectl apply` that Sealed Secret to the cluster - The Sealed Secret controller will "unseal" it for us .lab[ - Check that our Secret doesn't exist (yet): ```bash kubectl get secrets ``` - Load the Sealed Secret into the cluster: ```bash kubectl create -f sealed-secret-aws.json ``` - Check that the secret is now available: ```bash kubectl get secrets ``` ] .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Tweaking secrets - Let's see what happens if we try to rename the Secret (or use it in a different namespace) .lab[ - Delete both the Secret and the SealedSecret - Edit `sealed-secret-aws.json` - Change the name of the secret, or its namespace (both in the SealedSecret metadata and in the Secret template) - `kubectl apply -f` the new JSON file and observe the results 🤔 ] .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Sealed Secrets are *scoped* - A SealedSecret cannot be renamed or moved to another namespace (at least, not by default!) - Otherwise, it would allow to evade RBAC rules: - if I can view Secrets in namespace `myapp` but not in namespace `yourapp` - I could take a SealedSecret belonging to namespace `yourapp` - ... and deploy it in `myapp` - ... and view the resulting decrypted Secret! - This can be changed with `--scope namespace-wide` or `--scope cluster-wide` .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Working offline - We can obtain the public key from the server (technically, as a PEM certificate) - Then we can use that public key offline (without contacting the server) - Relevant commands: `kubeseal --fetch-cert > seal.pem` `kubeseal --cert seal.pem < secret.yaml > sealedsecret.json` .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Key rotation - The controller generate new keys every month by default - The keys are kept as TLS Secrets in the `kube-system` namespace (named `sealed-secrets-keyXXXXX`) - When keys are "rotated", old decryption keys are kept (otherwise we can't decrypt previously-generated SealedSecrets) .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Key compromise - If the *sealing* key (obtained with `--fetch-cert` is compromised): *we don't need to do anything (it's a public key!)* - However, if the *unsealing* key (the TLS secret in `kube-system`) is compromised ... *we need to:* - rotate the key - rotate the SealedSecrets that were encrypted with that key <br/> (as they are compromised) .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Rotating the key - By default, new keys are generated every 30 days - To force the generation of a new key "right now": - obtain an RFC1123 timestamp with `date -R` - edit Deployment `sealed-secrets-controller` (in `kube-system`) - add `--key-cutoff-time=TIMESTAMP` to the command-line - *Then*, rotate the SealedSecrets that were encrypted with it (generate new Secrets, then encrypt them with the new key) .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Discussion (the good) - The footprint of the operator is rather small: - only one CRD - one Deployment, one Service - a few RBAC-related objects .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Discussion (the less good) - Events could be improved - `no key to decrypt secret` when there is a name/namespace mismatch - no event indicating that a SealedSecret was successfully unsealed - Key rotation could be improved (how to find secrets corresponding to a key?) - If the sealing keys are lost, it's impossible to unseal the SealedSecrets (e.g. cluster reinstall) - ... Which means that we need to back up the sealing keys - ... Which means that we need to be super careful with these backups! .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- ## Other approaches - [Kamus](https://kamus.soluto.io/) ([git](https://github.com/Soluto/kamus)) offers "zero-trust" secrets (the cluster cannot decrypt secrets; only the application can decrypt them) - [Vault](https://learn.hashicorp.com/tutorials/vault/kubernetes-sidecar?in=vault/kubernetes) can do ... a lot - dynamic secrets (generated on the fly for a consumer) - certificate management - integration outside of Kubernetes - and much more! ??? :EN:- The Sealed Secrets Operator :FR:- L'opérateur *Sealed Secrets* .debug[[k8s/sealed-secrets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/sealed-secrets.md)] --- class: pic .interstitial[] --- name: toc-cert-manager class: title cert-manager .nav[ [Previous part](#toc-sealed-secrets) | [Back to table of contents](#toc-part-1) | [Next part](#toc-ingress-and-tls-certificates) ] .debug[(automatically generated title slide)] --- # cert-manager - cert-manager¹ facilitates certificate signing through the Kubernetes API: - we create a Certificate object (that's a CRD) - cert-manager creates a private key - it signs that key ... - ... or interacts with a certificate authority to obtain the signature - it stores the resulting key+cert in a Secret resource - These Secret resources can be used in many places (Ingress, mTLS, ...) .footnote[.red[¹]Always lower case, words separated with a dash; see the [style guide](https://cert-manager.io/docs/faq/style/_.)] .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## Getting signatures - cert-manager can use multiple *Issuers* (another CRD), including: - self-signed - cert-manager acting as a CA - the [ACME protocol](https://en.wikipedia.org/wiki/Automated_Certificate_Management_Environment]) (notably used by Let's Encrypt) - [HashiCorp Vault](https://www.vaultproject.io/) - Multiple issuers can be configured simultaneously - Issuers can be available in a single namespace, or in the whole cluster (then we use the *ClusterIssuer* CRD) .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## cert-manager in action - We will install cert-manager - We will create a ClusterIssuer to obtain certificates with Let's Encrypt (this will involve setting up an Ingress Controller) - We will create a Certificate request - cert-manager will honor that request and create a TLS Secret .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## Installing cert-manager - It can be installed with a YAML manifest, or with Helm .lab[ - Let's install the cert-manager Helm chart with this one-liner: ```bash helm install cert-manager cert-manager \ --repo https://charts.jetstack.io \ --create-namespace --namespace cert-manager \ --set installCRDs=true ``` ] - If you prefer to install with a single YAML file, that's fine too! (see [the documentation](https://cert-manager.io/docs/installation/kubernetes/#installing-with-regular-manifests) for instructions) .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## ClusterIssuer manifest ```yaml apiVersion: cert-manager.io/v1 kind: ClusterIssuer metadata: name: letsencrypt-staging spec: acme: # Remember to update this if you use this manifest to obtain real certificates :) email: hello@example.com server: https://acme-staging-v02.api.letsencrypt.org/directory # To use the production environment, use the following line instead: #server: https://acme-v02.api.letsencrypt.org/directory privateKeySecretRef: name: issuer-letsencrypt-staging solvers: - http01: ingress: class: traefik ``` .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## Creating the ClusterIssuer - The manifest shown on the previous slide is in [k8s/cm-clusterissuer.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/cm-clusterissuer.yaml) .lab[ - Create the ClusterIssuer: ```bash kubectl apply -f ~/container.training/k8s/cm-clusterissuer.yaml ``` ] .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## Certificate manifest ```yaml apiVersion: cert-manager.io/v1 kind: Certificate metadata: name: xyz.A.B.C.D.nip.io spec: secretName: xyz.A.B.C.D.nip.io dnsNames: - xyz.A.B.C.D.nip.io issuerRef: name: letsencrypt-staging kind: ClusterIssuer ``` - The `name`, `secretName`, and `dnsNames` don't have to match - There can be multiple `dnsNames` - The `issuerRef` must match the ClusterIssuer that we created earlier .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## Creating the Certificate - The manifest shown on the previous slide is in [k8s/cm-certificate.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/cm-certificate.yaml) .lab[ - Edit the Certificate to update the domain name (make sure to replace A.B.C.D with the IP address of one of your nodes!) - Create the Certificate: ```bash kubectl apply -f ~/container.training/k8s/cm-certificate.yaml ``` ] .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## What's happening? - cert-manager will create: - the secret key - a Pod, a Service, and an Ingress to complete the HTTP challenge - then it waits for the challenge to complete .lab[ - View the resources created by cert-manager: ```bash kubectl get pods,services,ingresses \ --selector=acme.cert-manager.io/http01-solver=true ``` ] .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## HTTP challenge - The CA (in this case, Let's Encrypt) will fetch a particular URL: `http://<our-domain>/.well-known/acme-challenge/<token>` .lab[ - Check the *path* of the Ingress in particular: ```bash kubectl describe ingress --selector=acme.cert-manager.io/http01-solver=true ``` ] .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- ## What's missing ? -- An Ingress Controller! 😅 .lab[ - Install an Ingress Controller: ```bash kubectl apply -f ~/container.training/k8s/traefik-v2.yaml ``` - Wait a little bit, and check that we now have a `kubernetes.io/tls` Secret: ```bash kubectl get secrets ``` ] .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- class: extra-details ## Using the secret - For bonus points, try to use the secret in an Ingress! - This is what the manifest would look like: ```yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: xyz spec: tls: - secretName: xyz.A.B.C.D.nip.io hosts: - xyz.A.B.C.D.nip.io rules: ... ``` .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- class: extra-details ## Automatic TLS Ingress with annotations - It is also possible to annotate Ingress resources for cert-manager - If we annotate an Ingress resource with `cert-manager.io/cluster-issuer=xxx`: - cert-manager will detect that annotation - it will obtain a certificate using the specified ClusterIssuer (`xxx`) - it will store the key and certificate in the specified Secret - Note: the Ingress still needs the `tls` section with `secretName` and `hosts` .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- class: extra-details ## Let's Encrypt and nip.io - Let's Encrypt has [rate limits](https://letsencrypt.org/docs/rate-limits/) per domain (the limits only apply to the production environment, not staging) - There is a limit of 50 certificates per registered domain - If we try to use the production environment, we will probably hit the limit - It's fine to use the staging environment for these experiments (our certs won't validate in a browser, but we can always check the details of the cert to verify that it was issued by Let's Encrypt!) ??? :EN:- Obtaining certificates with cert-manager :FR:- Obtenir des certificats avec cert-manager :T: Obtaining TLS certificates with cert-manager .debug[[k8s/cert-manager.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cert-manager.md)] --- class: pic .interstitial[] --- name: toc-ingress-and-tls-certificates class: title Ingress and TLS certificates .nav[ [Previous part](#toc-cert-manager) | [Back to table of contents](#toc-part-1) | [Next part](#toc-exercise--sealed-secrets) ] .debug[(automatically generated title slide)] --- # Ingress and TLS certificates - Most ingress controllers support TLS connections (in a way that is standard across controllers) - The TLS key and certificate are stored in a Secret - The Secret is then referenced in the Ingress resource: ```yaml spec: tls: - secretName: XXX hosts: - YYY rules: - ZZZ ``` .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Obtaining a certificate - In the next section, we will need a TLS key and certificate - These usually come in [PEM](https://en.wikipedia.org/wiki/Privacy-Enhanced_Mail) format: ``` -----BEGIN CERTIFICATE----- MIIDATCCAemg... ... -----END CERTIFICATE----- ``` - We will see how to generate a self-signed certificate (easy, fast, but won't be recognized by web browsers) - We will also see how to obtain a certificate from [Let's Encrypt](https://letsencrypt.org/) (requires the cluster to be reachable through a domain name) .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- class: extra-details ## In production ... - A very popular option is to use the [cert-manager](https://cert-manager.io/docs/) operator - It's a flexible, modular approach to automated certificate management - For simplicity, in this section, we will use [certbot](https://certbot.eff.org/) - The method shown here works well for one-time certs, but lacks: - automation - renewal .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Which domain to use - If you're doing this in a training: *the instructor will tell you what to use* - If you're doing this on your own Kubernetes cluster: *you should use a domain that points to your cluster* - More precisely: *you should use a domain that points to your ingress controller* - If you don't have a domain name, you can use [nip.io](https://nip.io/) (if your ingress controller is on 1.2.3.4, you can use `whatever.1.2.3.4.nip.io`) .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Setting `$DOMAIN` - We will use `$DOMAIN` in the following section - Let's set it now .lab[ - Set the `DOMAIN` environment variable: ```bash export DOMAIN=... ``` ] .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Choose your adventure! - We present 3 methods to obtain a certificate - We suggest that we use method 1 (self-signed certificate) - it's the simplest and fastest method - it doesn't rely on other components - You're welcome to try methods 2 and 3 (leveraging certbot) - they're great if you want to understand "how the sausage is made" - they require some hacks (make sure port 80 is available) - they won't be used in production (cert-manager is better) .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Method 1, self-signed certificate - Thanks to `openssl`, generating a self-signed cert is just one command away! .lab[ - Generate a key and certificate: ```bash openssl req \ -newkey rsa -nodes -keyout privkey.pem \ -x509 -days 30 -subj /CN=$DOMAIN/ -out cert.pem ``` ] This will create two files, `privkey.pem` and `cert.pem`. .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Method 2, Let's Encrypt with certbot - `certbot` is an [ACME](https://tools.ietf.org/html/rfc8555) client (Automatic Certificate Management Environment) - We can use it to obtain certificates from Let's Encrypt - It needs to listen to port 80 (to complete the [HTTP-01 challenge](https://letsencrypt.org/docs/challenge-types/)) - If port 80 is already taken by our ingress controller, see method 3 .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- class: extra-details ## HTTP-01 challenge - `certbot` contacts Let's Encrypt, asking for a cert for `$DOMAIN` - Let's Encrypt gives a token to `certbot` - Let's Encrypt then tries to access the following URL: `http://$DOMAIN/.well-known/acme-challenge/<token>` - That URL needs to be routed to `certbot` - Once Let's Encrypt gets the response from `certbot`, it issues the certificate .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Running certbot - There is a very convenient container image, `certbot/certbot` - Let's use a volume to get easy access to the generated key and certificate .lab[ - Obtain a certificate from Let's Encrypt: ```bash EMAIL=your.address@example.com docker run --rm -p 80:80 -v $PWD/letsencrypt:/etc/letsencrypt \ certbot/certbot certonly \ -m $EMAIL \ --standalone --agree-tos -n \ --domain $DOMAIN \ --test-cert ``` ] This will get us a "staging" certificate. Remove `--test-cert` to obtain a *real* certificate. .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Copying the key and certificate - If everything went fine: - the key and certificate files are in `letsencrypt/live/$DOMAIN` - they are owned by `root` .lab[ - Grant ourselves permissions on these files: ```bash sudo chown -R $USER letsencrypt ``` - Copy the certificate and key to the current directory: ```bash cp letsencrypt/live/test/{cert,privkey}.pem . ``` ] .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Method 3, certbot with Ingress - Sometimes, we can't simply listen to port 80: - we might already have an ingress controller there - our nodes might be on an internal network - But we can define an Ingress to route the HTTP-01 challenge to `certbot`! - Our Ingress needs to route all requests to `/.well-known/acme-challenge` to `certbot` - There are at least two ways to do that: - run `certbot` in a Pod (and extract the cert+key when it's done) - run `certbot` in a container on a node (and manually route traffic to it) - We're going to use the second option (mostly because it will give us an excuse to tinker with Endpoints resources!) .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## The plan - We need the following resources: - an Endpoints¹ listing a hard-coded IP address and port <br/>(where our `certbot` container will be listening) - a Service corresponding to that Endpoints - an Ingress sending requests to `/.well-known/acme-challenge/*` to that Service <br/>(we don't even need to include a domain name in it) - Then we need to start `certbot` so that it's listening on the right address+port .footnote[¹Endpoints is always plural, because even a single resource is a list of endpoints.] .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Creating resources - We prepared a YAML file to create the three resources - However, the Endpoints needs to be adapted to put the current node's address .lab[ - Edit `~/containers.training/k8s/certbot.yaml` (replace `A.B.C.D` with the current node's address) - Create the resources: ```bash kubectl apply -f ~/containers.training/k8s/certbot.yaml ``` ] .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Obtaining the certificate - Now we can run `certbot`, listening on the port listed in the Endpoints (i.e. 8000) .lab[ - Run `certbot`: ```bash EMAIL=your.address@example.com docker run --rm -p 8000:80 -v $PWD/letsencrypt:/etc/letsencrypt \ certbot/certbot certonly \ -m $EMAIL \ --standalone --agree-tos -n \ --domain $DOMAIN \ --test-cert ``` ] This is using the staging environment. Remove `--test-cert` to get a production certificate. .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Copying the certificate - Just like in the previous method, the certificate is in `letsencrypt/live/$DOMAIN` (and owned by root) .lab[ - Grand ourselves permissions on these files: ```bash sudo chown -R $USER letsencrypt ``` - Copy the certificate and key to the current directory: ```bash cp letsencrypt/live/$DOMAIN/{cert,privkey}.pem . ``` ] .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Creating the Secret - We now have two files: - `privkey.pem` (the private key) - `cert.pem` (the certificate) - We can create a Secret to hold them .lab[ - Create the Secret: ```bash kubectl create secret tls $DOMAIN --cert=cert.pem --key=privkey.pem ``` ] .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Ingress with TLS - To enable TLS for an Ingress, we need to add a `tls` section to the Ingress: ```yaml spec: tls: - secretName: DOMAIN hosts: - DOMAIN rules: ... ``` - The list of hosts will be used by the ingress controller (to know which certificate to use with [SNI](https://en.wikipedia.org/wiki/Server_Name_Indication)) - Of course, the name of the secret can be different (here, for clarity and convenience, we set it to match the domain) .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## `kubectl create ingress` - We can also create an Ingress using TLS directly - To do it, add `,tls=secret-name` to an Ingress rule - Example: ```bash kubectl create ingress hello \ --rule=hello.example.com/*=hello:80,tls=hello ``` - The domain will automatically be inferred from the rule .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- class: extra-details ## About the ingress controller - Many ingress controllers can use different "stores" for keys and certificates - Our ingress controller needs to be configured to use secrets (as opposed to, e.g., obtain certificates directly with Let's Encrypt) .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Using the certificate .lab[ - Add the `tls` section to an existing Ingress - If you need to see what the `tls` section should look like, you can: - `kubectl explain ingress.spec.tls` - `kubectl create ingress --dry-run=client -o yaml ...` - check `~/container.training/k8s/ingress.yaml` for inspiration - read the docs - Check that the URL now works over `https` (it might take a minute to be picked up by the ingress controller) ] .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Discussion *To repeat something mentioned earlier ...* - The methods presented here are for *educational purpose only* - In most production scenarios, the certificates will be obtained automatically - A very popular option is to use the [cert-manager](https://cert-manager.io/docs/) operator .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- ## Security - Since TLS certificates are stored in Secrets... - ...It means that our Ingress controller must be able to read Secrets - A vulnerability in the Ingress controller can have dramatic consequences - See [CVE-2021-25742](https://github.com/kubernetes/ingress-nginx/issues/7837) for an example - This can be mitigated by limiting which Secrets the controller can access (RBAC rules can specify resource names) - Downside: each TLS secret must explicitly be listed in RBAC (but that's better than a full cluster compromise, isn't it?) ??? :EN:- Ingress and TLS :FR:- Certificats TLS et *ingress* .debug[[k8s/ingress-tls.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/ingress-tls.md)] --- class: pic .interstitial[] --- name: toc-exercise--sealed-secrets class: title Exercise — Sealed Secrets .nav[ [Previous part](#toc-ingress-and-tls-certificates) | [Back to table of contents](#toc-part-1) | [Next part](#toc-extending-the-kubernetes-api) ] .debug[(automatically generated title slide)] --- # Exercise — Sealed Secrets This is a "combo exercise" to practice the following concepts: - Secrets (exposing them in containers) - RBAC (granting specific permissions to specific users) - Operators (specifically, sealed secrets) - Migrations (copying/transferring resources from a cluster to another) For this exercise, you will need two clusters. (It can be two local clusters.) We will call them "dev cluster" and "prod cluster". .debug[[exercises/sealed-secrets-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/sealed-secrets-details.md)] --- ## Overview - For simplicity, our application will be NGINX (or `jpetazzo/color`) - Our application needs two secrets: - a *logging API token* (not too sensitive; same in dev and prod) - a *database password* (sensitive; different in dev and prod) - Secrets can be exposed as env vars, or mounted in volumes (it doesn't matter for this exercise) - We want to prepare and deploy the application in the dev cluster - ...Then deploy it to the prod cluster .debug[[exercises/sealed-secrets-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/sealed-secrets-details.md)] --- ## Step 1 (easy) - On the dev cluster, create a Namespace called `dev` - Create the two secrets, `logging-api-token` and `database-password` (the content doesn't matter; put a random string of your choice) - Create a Deployment called `app` using both secrets (use a mount or environment variables; whatever you prefer!) - Verify that the secrets are available to the Deployment (e.g. with `kubectl exec`) - Generate YAML manifests for the application (Deployment+Secrets) .debug[[exercises/sealed-secrets-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/sealed-secrets-details.md)] --- ## Step 2 (medium) - Deploy the sealed secrets operator on the dev cluster - In the YAML, replace the Secrets with SealedSecrets - Delete the `dev` Namespace, recreate it, redeploy the app (to make sure everything works fine) - Create a `staging` Namespace and try to deploy the app - If something doesn't work, fix it -- - Hint: set the *scope* of the sealed secrets .debug[[exercises/sealed-secrets-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/sealed-secrets-details.md)] --- ## Step 3 (hard) - On the prod cluster, create a Namespace called `prod` - Try to deploy the application using the YAML manifests - It won't work (the cluster needs the sealing key) - Fix it! (check the next slides if you need hints) -- - You will have to copy the Sealed Secret private key -- - And restart the operator so that it picks up the key .debug[[exercises/sealed-secrets-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/sealed-secrets-details.md)] --- ## Step 4 (medium) Let's say that we have a user called `alice` on the prod cluster. (You can use `kubectl --as=alice` to impersonate her.) We want Alice to be able to: - deploy the whole application in the `prod` namespace - access the *logging API token* secret - but *not* the *database password* secret - view the logs of the app .debug[[exercises/sealed-secrets-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/sealed-secrets-details.md)] --- class: pic .interstitial[] --- name: toc-extending-the-kubernetes-api class: title Extending the Kubernetes API .nav[ [Previous part](#toc-exercise--sealed-secrets) | [Back to table of contents](#toc-part-2) | [Next part](#toc-custom-resource-definitions) ] .debug[(automatically generated title slide)] --- # Extending the Kubernetes API There are multiple ways to extend the Kubernetes API. We are going to cover: - Controllers - Dynamic Admission Webhooks - Custom Resource Definitions (CRDs) - The Aggregation Layer But first, let's re(re)visit the API server ... .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Revisiting the API server - The Kubernetes API server is a central point of the control plane - Everything connects to the API server: - users (that's us, but also automation like CI/CD) - kubelets - network components (e.g. `kube-proxy`, pod network, NPC) - controllers; lots of controllers .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Some controllers - `kube-controller-manager` runs built-on controllers (watching Deployments, Nodes, ReplicaSets, and much more) - `kube-scheduler` runs the scheduler (it's conceptually not different from another controller) - `cloud-controller-manager` takes care of "cloud stuff" (e.g. provisioning load balancers, persistent volumes...) - Some components mentioned above are also controllers (e.g. Network Policy Controller) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## More controllers - Cloud resources can also be managed by additional controllers (e.g. the [AWS Load Balancer Controller](https://github.com/kubernetes-sigs/aws-load-balancer-controller)) - Leveraging Ingress resources requires an Ingress Controller (many options available here; we can even install multiple ones!) - Many add-ons (including CRDs and operators) have controllers as well 🤔 *What's even a controller ?!?* .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## What's a controller? According to the [documentation](https://kubernetes.io/docs/concepts/architecture/controller/): *Controllers are **control loops** that<br/> **watch** the state of your cluster,<br/> then make or request changes where needed.* *Each controller tries to move the current cluster state closer to the desired state.* .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## What controllers do - Watch resources - Make changes: - purely at the API level (e.g. Deployment, ReplicaSet controllers) - and/or configure resources (e.g. `kube-proxy`) - and/or provision resources (e.g. load balancer controller) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Extending Kubernetes with controllers - Random example: - watch resources like Deployments, Services ... - read annotations to configure monitoring - Technically, this is not extending the API (but it can still be very useful!) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Other ways to extend Kubernetes - Prevent or alter API requests before resources are committed to storage: *Admission Control* - Create new resource types leveraging Kubernetes storage facilities: *Custom Resource Definitions* - Create new resource types with different storage or different semantics: *Aggregation Layer* - Spoiler alert: often, we will combine multiple techniques (and involve controllers as well!) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Admission controllers - Admission controllers can vet or transform API requests - The diagram on the next slide shows the path of an API request (courtesy of Banzai Cloud) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- class: pic  .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Types of admission controllers - *Validating* admission controllers can accept/reject the API call - *Mutating* admission controllers can modify the API request payload - Both types can also trigger additional actions (e.g. automatically create a Namespace if it doesn't exist) - There are a number of built-in admission controllers (see [documentation](https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/#what-does-each-admission-controller-do) for a list) - We can also dynamically define and register our own .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- class: extra-details ## Some built-in admission controllers - ServiceAccount: automatically adds a ServiceAccount to Pods that don't explicitly specify one - LimitRanger: applies resource constraints specified by LimitRange objects when Pods are created - NamespaceAutoProvision: automatically creates namespaces when an object is created in a non-existent namespace *Note: #1 and #2 are enabled by default; #3 is not.* .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Dynamic Admission Control - We can set up *admission webhooks* to extend the behavior of the API server - The API server will submit incoming API requests to these webhooks - These webhooks can be *validating* or *mutating* - Webhooks can be set up dynamically (without restarting the API server) - To setup a dynamic admission webhook, we create a special resource: a `ValidatingWebhookConfiguration` or a `MutatingWebhookConfiguration` - These resources are created and managed like other resources (i.e. `kubectl create`, `kubectl get`...) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Webhook Configuration - A ValidatingWebhookConfiguration or MutatingWebhookConfiguration contains: - the address of the webhook - the authentication information to use with the webhook - a list of rules - The rules indicate for which objects and actions the webhook is triggered (to avoid e.g. triggering webhooks when setting up webhooks) - The webhook server can be hosted in or out of the cluster .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Dynamic Admission Examples - Policy control ([Kyverno](https://kyverno.io/), [Open Policy Agent](https://www.openpolicyagent.org/docs/latest/)) - Sidecar injection (Used by some service meshes) - Type validation (More on this later, in the CRD section) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Kubernetes API types - Almost everything in Kubernetes is materialized by a resource - Resources have a type (or "kind") (similar to strongly typed languages) - We can see existing types with `kubectl api-resources` - We can list resources of a given type with `kubectl get <type>` .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Creating new types - We can create new types with Custom Resource Definitions (CRDs) - CRDs are created dynamically (without recompiling or restarting the API server) - CRDs themselves are resources: - we can create a new type with `kubectl create` and some YAML - we can see all our custom types with `kubectl get crds` - After we create a CRD, the new type works just like built-in types .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Examples - Representing composite resources (e.g. clusters like databases, messages queues ...) - Representing external resources (e.g. virtual machines, object store buckets, domain names ...) - Representing configuration for controllers and operators (e.g. custom Ingress resources, certificate issuers, backups ...) - Alternate representations of other objects; services and service instances (e.g. encrypted secret, git endpoints ...) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## The aggregation layer - We can delegate entire parts of the Kubernetes API to external servers - This is done by creating APIService resources (check them with `kubectl get apiservices`!) - The APIService resource maps a type (kind) and version to an external service - All requests concerning that type are sent (proxied) to the external service - This allows to have resources like CRDs, but that aren't stored in etcd - Example: `metrics-server` .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Why? - Using a CRD for live metrics would be extremely inefficient (etcd **is not** a metrics store; write performance is way too slow) - Instead, `metrics-server`: - collects metrics from kubelets - stores them in memory - exposes them as PodMetrics and NodeMetrics (in API group metrics.k8s.io) - is registered as an APIService .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Drawbacks - Requires a server - ... that implements a non-trivial API (aka the Kubernetes API semantics) - If we need REST semantics, CRDs are probably way simpler - *Sometimes* synchronizing external state with CRDs might do the trick (unless we want the external state to be our single source of truth) .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Service catalog - *Service catalog* is another extension mechanism - It's not extending the Kubernetes API strictly speaking (but it still provides new features!) - It doesn't create new types; it uses: - ClusterServiceBroker - ClusterServiceClass - ClusterServicePlan - ServiceInstance - ServiceBinding - It uses the Open service broker API .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- ## Documentation - [Custom Resource Definitions: when to use them](https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/custom-resources/) - [Custom Resources Definitions: how to use them](https://kubernetes.io/docs/tasks/access-kubernetes-api/custom-resources/custom-resource-definitions/) - [Service Catalog](https://kubernetes.io/docs/concepts/extend-kubernetes/service-catalog/) - [Built-in Admission Controllers](https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/) - [Dynamic Admission Controllers](https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/) - [Aggregation Layer](https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/apiserver-aggregation/) ??? :EN:- Overview of Kubernetes API extensions :FR:- Comment étendre l'API Kubernetes .debug[[k8s/extending-api.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/extending-api.md)] --- class: pic .interstitial[] --- name: toc-custom-resource-definitions class: title Custom Resource Definitions .nav[ [Previous part](#toc-extending-the-kubernetes-api) | [Back to table of contents](#toc-part-2) | [Next part](#toc-operators) ] .debug[(automatically generated title slide)] --- # Custom Resource Definitions - CRDs are one of the (many) ways to extend the API - CRDs can be defined dynamically (no need to recompile or reload the API server) - A CRD is defined with a CustomResourceDefinition resource (CustomResourceDefinition is conceptually similar to a *metaclass*) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Creating a CRD - We will create a CRD to represent the different species of coffee (arabica, liberica, and robusta) - We will be able to run `kubectl get coffees` and it will list the species - Then we can label, edit, etc. the species to attach some information (e.g. the taste profile of the coffee, or whatever we want) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## First shot of coffee ```yaml # Note: apiextensions.k8s.io/v1beta1 is deprecated, and won't be served # in Kubernetes 1.22 and later versions. This YAML manifest is here just # for reference, but it's not intended to be used in modern trainings. apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: coffees.container.training spec: group: container.training version: v1alpha1 scope: Namespaced names: plural: coffees singular: coffee kind: Coffee shortNames: - cof ``` .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## The joys of API deprecation - Unfortunately, the CRD manifest on the previous slide is deprecated! - It is using `apiextensions.k8s.io/v1beta1`, which is dropped in Kubernetes 1.22 - We need to use `apiextensions.k8s.io/v1`, which is a little bit more complex (a few optional things become mandatory, see [this guide](https://kubernetes.io/docs/reference/using-api/deprecation-guide/#customresourcedefinition-v122) for details) - `apiextensions.k8s.io/v1beta1` is available since Kubernetes 1.16 .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Second shot of coffee - The next slide will show file [k8s/coffee-2.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/coffee-2.yaml) - Note the `spec.versions` list - we need exactly one version with `storage: true` - we can have multiple versions with `served: true` - `spec.versions[].schema.openAPI3Schema` is required (and must be a valid OpenAPI schema; here it's a trivial one) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ```yaml apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition metadata: name: coffees.container.training spec: group: container.training versions: - name: v1alpha1 served: true storage: true schema: openAPIV3Schema: type: object scope: Namespaced names: plural: coffees singular: coffee kind: Coffee shortNames: - cof ``` .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Creating our Coffee CRD - Let's create the Custom Resource Definition for our Coffee resource .lab[ - Load the CRD: ```bash kubectl apply -f ~/container.training/k8s/coffee-2.yaml ``` - Confirm that it shows up: ```bash kubectl get crds ``` ] .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Creating custom resources The YAML below defines a resource using the CRD that we just created: ```yaml kind: Coffee apiVersion: container.training/v1alpha1 metadata: name: arabica spec: taste: strong ``` .lab[ - Create a few types of coffee beans: ```bash kubectl apply -f ~/container.training/k8s/coffees.yaml ``` ] .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Viewing custom resources - By default, `kubectl get` only shows name and age of custom resources .lab[ - View the coffee beans that we just created: ```bash kubectl get coffees ``` ] - We'll see in a bit how to improve that .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## What can we do with CRDs? There are many possibilities! - *Operators* encapsulate complex sets of resources (e.g.: a PostgreSQL replicated cluster; an etcd cluster... <br/> see [awesome operators](https://github.com/operator-framework/awesome-operators) and [OperatorHub](https://operatorhub.io/) to find more) - Custom use-cases like [gitkube](https://gitkube.sh/) - creates a new custom type, `Remote`, exposing a git+ssh server - deploy by pushing YAML or Helm charts to that remote - Replacing built-in types with CRDs (see [this lightning talk by Tim Hockin](https://www.youtube.com/watch?v=ji0FWzFwNhA)) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## What's next? - Creating a basic CRD is quick and easy - But there is a lot more that we can (and probably should) do: - improve input with *data validation* - improve output with *custom columns* - And of course, we probably need a *controller* to go with our CRD! (otherwise, we're just using the Kubernetes API as a fancy data store) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Additional printer columns - We can specify `additionalPrinterColumns` in the CRD - This is similar to `-o custom-columns` (map a column name to a path in the object, e.g. `.spec.taste`) ```yaml additionalPrinterColumns: - jsonPath: .spec.taste description: Subjective taste of that kind of coffee bean name: Taste type: string - jsonPath: .metadata.creationTimestamp name: Age type: date ``` .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Using additional printer columns - Let's update our CRD using [k8s/coffee-3.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/coffee-3.yaml) .lab[ - Update the CRD: ```bash kubectl apply -f ~/container.training/k8s/coffee-3.yaml ``` - Look at our Coffee resources: ```bash kubectl get coffees ``` ] Note: we can update a CRD without having to re-create the corresponding resources. (Good news, right?) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Data validation - CRDs are validated with the OpenAPI v3 schema that we specify (with older versions of the API, when the schema was optional, <br/> no schema = no validation at all) - Otherwise, we can put anything we want in the `spec` - More advanced validation can also be done with admission webhooks, e.g.: - consistency between parameters - advanced integer filters (e.g. odd number of replicas) - things that can change in one direction but not the other .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## OpenAPI v3 schema example This is what we have in [k8s/coffee-3.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/coffee-3.yaml): ```yaml schema: openAPIV3Schema: type: object required: [ spec ] properties: spec: type: object properties: taste: description: Subjective taste of that kind of coffee bean type: string required: [ taste ] ``` .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Validation *a posteriori* - Some of the "coffees" that we defined earlier *do not* pass validation - How is that possible? -- - Validation happens at *admission* (when resources get written into the database) - Therefore, we can have "invalid" resources in etcd (they are invalid from the CRD perspective, but the CRD can be changed) 🤔 How should we handle that ? .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Versions - If the data format changes, we can roll out a new version of the CRD (e.g. go from `v1alpha1` to `v1alpha2`) - In a CRD we can specify the versions that exist, that are *served*, and *stored* - multiple versions can be *served* - only one can be *stored* - Kubernetes doesn't automatically migrate the content of the database - However, it can convert between versions when resources are read/written .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Conversion - When *creating* a new resource, the *stored* version is used (if we create it with another version, it gets converted) - When *getting* or *watching* resources, the *requested* version is used (if it is stored with another version, it gets converted) - By default, "conversion" only changes the `apiVersion` field - ... But we can register *conversion webhooks* (see [that doc page](https://kubernetes.io/docs/tasks/extend-kubernetes/custom-resources/custom-resource-definition-versioning/#webhook-conversion) for details) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## Migrating database content - We need to *serve* a version as long as we *store* objects in that version (=as long as the database has at least one object with that version) - If we want to "retire" a version, we need to migrate these objects first - All we have to do is to read and re-write them (the [kube-storage-version-migrator](https://github.com/kubernetes-sigs/kube-storage-version-migrator) tool can help) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## What's next? - Generally, when creating a CRD, we also want to run a *controller* (otherwise nothing will happen when we create resources of that type) - The controller will typically *watch* our custom resources (and take action when they are created/updated) .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## CRDs in the wild - [gitkube](https://storage.googleapis.com/gitkube/gitkube-setup-stable.yaml) - [A redis operator](https://github.com/amaizfinance/redis-operator/blob/master/deploy/crds/k8s_v1alpha1_redis_crd.yaml) - [cert-manager](https://github.com/jetstack/cert-manager/releases/download/v1.0.4/cert-manager.yaml) *How big are these YAML files?* *What's the size (e.g. in lines) of each resource?* .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## CRDs in practice - Production-grade CRDs can be extremely verbose (because of the openAPI schema validation) - This can (and usually will) be managed by a framework .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- ## (Ab)using the API server - If we need to store something "safely" (as in: in etcd), we can use CRDs - This gives us primitives to read/write/list objects (and optionally validate them) - The Kubernetes API server can run on its own (without the scheduler, controller manager, and kubelets) - By loading CRDs, we can have it manage totally different objects (unrelated to containers, clusters, etc.) ??? :EN:- Custom Resource Definitions (CRDs) :FR:- Les CRDs *(Custom Resource Definitions)* .debug[[k8s/crd.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/crd.md)] --- class: pic .interstitial[] --- name: toc-operators class: title Operators .nav[ [Previous part](#toc-custom-resource-definitions) | [Back to table of contents](#toc-part-2) | [Next part](#toc-dynamic-admission-control) ] .debug[(automatically generated title slide)] --- # Operators *An operator represents **human operational knowledge in software,** <br/> to reliably manage an application. — [CoreOS](https://coreos.com/blog/introducing-operators.html)* Examples: - Deploying and configuring replication with MySQL, PostgreSQL ... - Setting up Elasticsearch, Kafka, RabbitMQ, Zookeeper ... - Reacting to failures when intervention is needed - Scaling up and down these systems .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## What are they made from? - Operators combine two things: - Custom Resource Definitions - controller code watching the corresponding resources and acting upon them - A given operator can define one or multiple CRDs - The controller code (control loop) typically runs within the cluster (running as a Deployment with 1 replica is a common scenario) - But it could also run elsewhere (nothing mandates that the code run on the cluster, as long as it has API access) .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## Why use operators? - Kubernetes gives us Deployments, StatefulSets, Services ... - These mechanisms give us building blocks to deploy applications - They work great for services that are made of *N* identical containers (like stateless ones) - They also work great for some stateful applications like Consul, etcd ... (with the help of highly persistent volumes) - They're not enough for complex services: - where different containers have different roles - where extra steps have to be taken when scaling or replacing containers .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## Use-cases for operators - Systems with primary/secondary replication Examples: MariaDB, MySQL, PostgreSQL, Redis ... - Systems where different groups of nodes have different roles Examples: ElasticSearch, MongoDB ... - Systems with complex dependencies (that are themselves managed with operators) Examples: Flink or Kafka, which both depend on Zookeeper .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## More use-cases - Representing and managing external resources (Example: [AWS S3 Operator](https://operatorhub.io/operator/awss3-operator-registry)) - Managing complex cluster add-ons (Example: [Istio operator](https://operatorhub.io/operator/istio)) - Deploying and managing our applications' lifecycles (more on that later) .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## How operators work - An operator creates one or more CRDs (i.e., it creates new "Kinds" of resources on our cluster) - The operator also runs a *controller* that will watch its resources - Each time we create/update/delete a resource, the controller is notified (we could write our own cheap controller with `kubectl get --watch`) .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## Deploying our apps with operators - It is very simple to deploy with `kubectl create deployment` / `kubectl expose` - We can unlock more features by writing YAML and using `kubectl apply` - Kustomize or Helm let us deploy in multiple environments (and adjust/tweak parameters in each environment) - We can also use an operator to deploy our application .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## Pros and cons of deploying with operators - The app definition and configuration is persisted in the Kubernetes API - Multiple instances of the app can be manipulated with `kubectl get` - We can add labels, annotations to the app instances - Our controller can execute custom code for any lifecycle event - However, we need to write this controller - We need to be careful about changes (what happens when the resource `spec` is updated?) .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- ## Operators are not magic - Look at this ElasticSearch resource definition: [k8s/eck-elasticsearch.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/eck-elasticsearch.yaml) - What should happen if we flip the TLS flag? Twice? - What should happen if we add another group of nodes? - What if we want different images or parameters for the different nodes? *Operators can be very powerful. <br/> But we need to know exactly the scenarios that they can handle.* ??? :EN:- Kubernetes operators :FR:- Les opérateurs .debug[[k8s/operators.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators.md)] --- class: pic .interstitial[] --- name: toc-dynamic-admission-control class: title Dynamic Admission Control .nav[ [Previous part](#toc-operators) | [Back to table of contents](#toc-part-2) | [Next part](#toc-policy-management-with-kyverno) ] .debug[(automatically generated title slide)] --- # Dynamic Admission Control - This is one of the many ways to extend the Kubernetes API - High level summary: dynamic admission control relies on webhooks that are ... - dynamic (can be added/removed on the fly) - running inside our outside the cluster - *validating* (yay/nay) or *mutating* (can change objects that are created/updated) - selective (can be configured to apply only to some kinds, some selectors...) - mandatory or optional (should it block operations when webhook is down?) - Used for themselves (e.g. policy enforcement) or as part of operators .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Use cases Some examples ... - Stand-alone admission controllers *validating:* policy enforcement (e.g. quotas, naming conventions ...) *mutating:* inject or provide default values (e.g. pod presets) - Admission controllers part of a greater system *validating:* advanced typing for operators *mutating:* inject sidecars for service meshes .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## You said *dynamic?* - Some admission controllers are built in the API server - They are enabled/disabled through Kubernetes API server configuration (e.g. `--enable-admission-plugins`/`--disable-admission-plugins` flags) - Here, we're talking about *dynamic* admission controllers - They can be added/remove while the API server is running (without touching the configuration files or even having access to them) - This is done through two kinds of cluster-scope resources: ValidatingWebhookConfiguration and MutatingWebhookConfiguration .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## You said *webhooks?* - A ValidatingWebhookConfiguration or MutatingWebhookConfiguration contains: - a resource filter <br/> (e.g. "all pods", "deployments in namespace xyz", "everything"...) - an operations filter <br/> (e.g. CREATE, UPDATE, DELETE) - the address of the webhook server - Each time an operation matches the filters, it is sent to the webhook server .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## What gets sent exactly? - The API server will `POST` a JSON object to the webhook - That object will be a Kubernetes API message with `kind` `AdmissionReview` - It will contain a `request` field, with, notably: - `request.uid` (to be used when replying) - `request.object` (the object created/deleted/changed) - `request.oldObject` (when an object is modified) - `request.userInfo` (who was making the request to the API in the first place) (See [the documentation](https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#request) for a detailed example showing more fields.) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## How should the webhook respond? - By replying with another `AdmissionReview` in JSON - It should have a `response` field, with, notably: - `response.uid` (matching the `request.uid`) - `response.allowed` (`true`/`false`) - `response.status.message` (optional string; useful when denying requests) - `response.patchType` (when a mutating webhook changes the object; e.g. `json`) - `response.patch` (the patch, encoded in base64) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## What if the webhook *does not* respond? - If "something bad" happens, the API server follows the `failurePolicy` option - this is a per-webhook option (specified in the webhook configuration) - it can be `Fail` (the default) or `Ignore` ("allow all, unmodified") - What's "something bad"? - webhook responds with something invalid - webhook takes more than 10 seconds to respond <br/> (this can be changed with `timeoutSeconds` field in the webhook config) - webhook is down or has invalid certificates <br/> (TLS! It's not just a good idea; for admission control, it's the law!) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## What did you say about TLS? - The webhook configuration can indicate: - either `url` of the webhook server (has to begin with `https://`) - or `service.name` and `service.namespace` of a Service on the cluster - In the latter case, the Service has to accept TLS connections on port 443 - It has to use a certificate with CN `<name>.<namespace>.svc` (**and** a `subjectAltName` extension with `DNS:<name>.<namespace>.svc`) - The certificate needs to be valid (signed by a CA trusted by the API server) ... alternatively, we can pass a `caBundle` in the webhook configuration .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Webhook server inside or outside - "Outside" webhook server is defined with `url` option - convenient for external webooks (e.g. tamper-resistent audit trail) - also great for initial development (e.g. with ngrok) - requires outbound connectivity (duh) and can become a SPOF - "Inside" webhook server is defined with `service` option - convenient when the webhook needs to be deployed and managed on the cluster - also great for air gapped clusters - development can be harder (but tools like [Tilt](https://tilt.dev) can help) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Developing a simple admission webhook - We're going to register a custom webhook! - First, we'll just dump the `AdmissionRequest` object (using a little Node app) - Then, we'll implement a strict policy on a specific label (using a little Flask app) - Development will happen in local containers, plumbed with ngrok - The we will deploy to the cluster 🔥 .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Running the webhook locally - We prepared a Docker Compose file to start the whole stack (the Node "echo" app, the Flask app, and one ngrok tunnel for each of them) .lab[ - Go to the webhook directory: ```bash cd ~/container.training/webhooks/admission ``` - Start the webhook in Docker containers: ```bash docker-compose up ``` ] *Note the URL in `ngrok-echo_1` looking like `url=https://xxxx.ngrok.io`.* .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- class: extra-details ## What's ngrok? - Ngrok provides secure tunnels to access local services - Example: run `ngrok http 1234` - `ngrok` will display a publicly-available URL (e.g. https://xxxxyyyyzzzz.ngrok.io) - Connections to https://xxxxyyyyzzzz.ngrok.io will terminate at `localhost:1234` - Basic product is free; extra features (vanity domains, end-to-end TLS...) for $$$ - Perfect to develop our webhook! - Probably not for production, though (webhook requests and responses now pass through the ngrok platform) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Update the webhook configuration - We have a webhook configuration in `k8s/webhook-configuration.yaml` - We need to update the configuration with the correct `url` .lab[ - Edit the webhook configuration manifest: ```bash vim k8s/webhook-configuration.yaml ``` - **Uncomment** the `url:` line - **Update** the `.ngrok.io` URL with the URL shown by Compose - Save and quit ] .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Register the webhook configuration - Just after we register the webhook, it will be called for each matching request (CREATE and UPDATE on Pods in all namespaces) - The `failurePolicy` is `Ignore` (so if the webhook server is down, we can still create pods) .lab[ - Register the webhook: ```bash kubectl apply -f k8s/webhook-configuration.yaml ``` ] It is strongly recommended to tail the logs of the API server while doing that. .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Create a pod - Let's create a pod and try to set a `color` label .lab[ - Create a pod named `chroma`: ```bash kubectl run --restart=Never chroma --image=nginx ``` - Add a label `color` set to `pink`: ```bash kubectl label pod chroma color=pink ``` ] We should see the `AdmissionReview` objects in the Compose logs. Note: the webhook doesn't do anything (other than printing the request payload). .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Use the "real" admission webhook - We have a small Flask app implementing a particular policy on pod labels: - if a pod sets a label `color`, it must be `blue`, `green`, `red` - once that `color` label is set, it cannot be removed or changed - That Flask app was started when we did `docker-compose up` earlier - It is exposed through its own ngrok tunnel - We are going to use that webhook instead of the other one (by changing only the `url` field in the ValidatingWebhookConfiguration) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Update the webhook configuration .lab[ - First, check the ngrok URL of the tunnel for the Flask app: ```bash docker-compose logs ngrok-flask ``` - Then, edit the webhook configuration: ```bash kubectl edit validatingwebhookconfiguration admission.container.training ``` - Find the `url:` field with the `.ngrok.io` URL and update it - Save and quit; the new configuration is applied immediately ] .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Verify the behavior of the webhook - Try to create a few pods and/or change labels on existing pods - What happens if we try to make changes to the earlier pod? (the one that has `label=pink`) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Deploying the webhook on the cluster - Let's see what's needed to self-host the webhook server! - The webhook needs to be reachable through a Service on our cluster - The Service needs to accept TLS connections on port 443 - We need a proper TLS certificate: - with the right `CN` and `subjectAltName` (`<servicename>.<namespace>.svc`) - signed by a trusted CA - We can either use a "real" CA, or use the `caBundle` option to specify the CA cert (the latter makes it easy to use self-signed certs) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## In practice - We're going to generate a key pair and a self-signed certificate - We will store them in a Secret - We will run the webhook in a Deployment, exposed with a Service - We will update the webhook configuration to use that Service - The Service will be named `admission`, in Namespace `webhooks` (keep in mind that the ValidatingWebhookConfiguration itself is at cluster scope) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Let's get to work! .lab[ - Make sure we're in the right directory: ```bash cd ~/container.training/webhooks/admission ``` - Create the namespace: ```bash kubectl create namespace webhooks ``` - Switch to the namespace: ```bash kubectl config set-context --current --namespace=webhooks ``` ] .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Deploying the webhook - *Normally,* we would author an image for this - Since our webhook is just *one* Python source file ... ... we'll store it in a ConfigMap, and install dependencies on the fly .lab[ - Load the webhook source in a ConfigMap: ```bash kubectl create configmap admission --from-file=flask/webhook.py ``` - Create the Deployment and Service: ```bash kubectl apply -f k8s/webhook-server.yaml ``` ] .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Generating the key pair and certificate - Let's call OpenSSL to the rescue! (of course, there are plenty others options; e.g. `cfssl`) .lab[ - Generate a self-signed certificate: ```bash NAMESPACE=webhooks SERVICE=admission CN=$SERVICE.$NAMESPACE.svc openssl req -x509 -newkey rsa:4096 -nodes -keyout key.pem -out cert.pem \ -days 30 -subj /CN=$CN -addext subjectAltName=DNS:$CN ``` - Load up the key and cert in a Secret: ```bash kubectl create secret tls admission --cert=cert.pem --key=key.pem ``` ] .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Update the webhook configuration - Let's reconfigure the webhook to use our Service instead of ngrok .lab[ - Edit the webhook configuration manifest: ```bash vim k8s/webhook-configuration.yaml ``` - Comment out the `url:` line - Uncomment the `service:` section - Save, quit - Update the webhook configuration: ```bash kubectl apply -f k8s/webhook-configuration.yaml ``` ] .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Add our self-signed cert to the `caBundle` - The API server won't accept our self-signed certificate - We need to add it to the `caBundle` field in the webhook configuration - The `caBundle` will be our `cert.pem` file, encoded in base64 .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- Shell to the rescue! .lab[ - Load up our cert and encode it in base64: ```bash CA=$(base64 -w0 < cert.pem) ``` - Define a patch operation to update the `caBundle`: ```bash PATCH='[{ "op": "replace", "path": "/webhooks/0/clientConfig/caBundle", "value":"'$CA'" }]' ``` - Patch the webhook configuration: ```bash kubectl patch validatingwebhookconfiguration \ admission.webhook.container.training \ --type='json' -p="$PATCH" ``` ] .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- ## Try it out! - Keep an eye on the API server logs - Tail the logs of the pod running the webhook server - Create a few pods; we should see requests in the webhook server logs - Check that the label `color` is enforced correctly (it should only allow values of `red`, `green`, `blue`) ??? :EN:- Dynamic admission control with webhooks :FR:- Contrôle d'admission dynamique (webhooks) .debug[[k8s/admission.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/admission.md)] --- class: pic .interstitial[] --- name: toc-policy-management-with-kyverno class: title Policy Management with Kyverno .nav[ [Previous part](#toc-dynamic-admission-control) | [Back to table of contents](#toc-part-2) | [Next part](#toc-exercise--generating-ingress-with-kyverno) ] .debug[(automatically generated title slide)] --- # Policy Management with Kyverno - The Kubernetes permission management system is very flexible ... - ... But it can't express *everything!* - Examples: - forbid using `:latest` image tag - enforce that each Deployment, Service, etc. has an `owner` label <br/>(except in e.g. `kube-system`) - enforce that each container has at least a `readinessProbe` healthcheck - How can we address that, and express these more complex *policies?* .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Admission control - The Kubernetes API server provides a generic mechanism called *admission control* - Admission controllers will examine each write request, and can: - approve/deny it (for *validating* admission controllers) - additionally *update* the object (for *mutating* admission controllers) - These admission controllers can be: - plug-ins built into the Kubernetes API server <br/>(selectively enabled/disabled by e.g. command-line flags) - webhooks registered dynamically with the Kubernetes API server .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## What's Kyverno? - Policy management solution for Kubernetes - Open source (https://github.com/kyverno/kyverno/) - Compatible with all clusters (doesn't require to reconfigure the control plane, enable feature gates...) - We don't endorse / support it in a particular way, but we think it's cool - It's not the only solution! (see e.g. [Open Policy Agent](https://www.openpolicyagent.org/docs/v0.12.2/kubernetes-admission-control/)) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## What can Kyverno do? - *Validate* resource manifests (accept/deny depending on whether they conform to our policies) - *Mutate* resources when they get created or updated (to add/remove/change fields on the fly) - *Generate* additional resources when a resource gets created (e.g. when namespace is created, automatically add quotas and limits) - *Audit* existing resources (warn about resources that violate certain policies) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## How does it do it? - Kyverno is implemented as a *controller* or *operator* - It typically runs as a Deployment on our cluster - Policies are defined as *custom resource definitions* - They are implemented with a set of *dynamic admission control webhooks* -- 🤔 -- - Let's unpack that! .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Custom resource definitions - When we install Kyverno, it will register new resource types: - Policy and ClusterPolicy (per-namespace and cluster-scope policies) - PolicyReport and ClusterPolicyReport (used in audit mode) - GenerateRequest (used internally when generating resources asynchronously) - We will be able to do e.g. `kubectl get clusterpolicyreports --all-namespaces` (to see policy violations across all namespaces) - Policies will be defined in YAML and registered/updated with e.g. `kubectl apply` .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Dynamic admission control webhooks - When we install Kyverno, it will register a few webhooks for its use (by creating ValidatingWebhookConfiguration and MutatingWebhookConfiguration resources) - All subsequent resource modifications are submitted to these webhooks (creations, updates, deletions) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Controller - When we install Kyverno, it creates a Deployment (and therefore, a Pod) - That Pod runs the server used by the webhooks - It also runs a controller that will: - run checks in the background (and generate PolicyReport objects) - process GenerateRequest objects asynchronously .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Kyverno in action - We're going to install Kyverno on our cluster - Then, we will use it to implement a few policies .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Installing Kyverno - Kyverno can be installed with a (big) YAML manifest - ... or with Helm charts (which allows to customize a few things) .lab[ - Install Kyverno: ```bash kubectl create -f https://raw.githubusercontent.com/kyverno/kyverno/release-1.5/definitions/release/install.yaml ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Kyverno policies in a nutshell - Which resources does it *select?* - can specify resources to *match* and/or *exclude* - can specify *kinds* and/or *selector* and/or users/roles doing the action - Which operation should be done? - validate, mutate, or generate - For validation, whether it should *enforce* or *audit* failures - Operation details (what exactly to validate, mutate, or generate) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Painting pods - As an example, we'll implement a policy regarding "Pod color" - The color of a Pod is the value of the label `color` - Example: `kubectl label pod hello color=yellow` to paint a Pod in yellow - We want to implement the following policies: - color is optional (i.e. the label is not required) - if color is set, it *must* be `red`, `green`, or `blue` - once the color has been set, it cannot be changed - once the color has been set, it cannot be removed .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Immutable primary colors, take 1 - First, we will add a policy to block forbidden colors (i.e. only allow `red`, `green`, or `blue`) - One possible approach: - *match* all pods that have a `color` label that is not `red`, `green`, or `blue` - *deny* these pods - We could also *match* all pods, then *deny* with a condition .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- .small[ ```yaml apiVersion: kyverno.io/v1 kind: ClusterPolicy metadata: name: pod-color-policy-1 spec: validationFailureAction: enforce rules: - name: ensure-pod-color-is-valid match: resources: kinds: - Pod selector: matchExpressions: - key: color operator: Exists - key: color operator: NotIn values: [ red, green, blue ] validate: message: "If it exists, the label color must be red, green, or blue." deny: {} ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Testing without the policy - First, let's create a pod with an "invalid" label (while we still can!) - We will use this later .lab[ - Create a pod: ```bash kubectl run test-color-0 --image=nginx ``` - Apply a color label: ```bash kubectl label pod test-color-0 color=purple ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Load and try the policy .lab[ - Load the policy: ```bash kubectl apply -f ~/container.training/k8s/kyverno-pod-color-1.yaml ``` - Create a pod: ```bash kubectl run test-color-1 --image=nginx ``` - Try to apply a few color labels: ```bash kubectl label pod test-color-1 color=purple kubectl label pod test-color-1 color=red kubectl label pod test-color-1 color- ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Immutable primary colors, take 2 - Next rule: once a `color` label has been added, it cannot be changed (i.e. if `color=red`, we can't change it to `color=blue`) - Our approach: - *match* all pods - add a *precondition* matching pods that have a `color` label <br/> (both in their "before" and "after" states) - *deny* these pods if their `color` label has changed - Again, other approaches are possible! .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- .small[ ```yaml apiVersion: kyverno.io/v1 kind: ClusterPolicy metadata: name: pod-color-policy-2 spec: validationFailureAction: enforce background: false rules: - name: prevent-color-change match: resources: kinds: - Pod preconditions: - key: "{{ request.operation }}" operator: Equals value: UPDATE - key: "{{ request.oldObject.metadata.labels.color }}" operator: NotEquals value: "" - key: "{{ request.object.metadata.labels.color }}" operator: NotEquals value: "" validate: message: "Once label color has been added, it cannot be changed." deny: conditions: - key: "{{ request.object.metadata.labels.color }}" operator: NotEquals value: "{{ request.oldObject.metadata.labels.color }}" ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Invalid references - We can access the `color` label through `{{ request.object.metadata.labels.color }}` - If we reference a label (or any field) that doesn't exist, the policy fails - Except in *preconditions*: it then evaluates to an empty string - We use a *precondition* to makes sure the label exists in both "old" and "new" objects - Then in the *deny* block we can compare the old and new values (and reject changes) - "Old" and "new" versions of the pod can be referenced through `{{ request.oldObject }}` and `{{ request.object }}` .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Load and try the policy .lab[ - Load the policy: ```bash kubectl apply -f ~/container.training/k8s/kyverno-pod-color-2.yaml ``` - Create a pod: ```bash kubectl run test-color-2 --image=nginx ``` - Try to apply a few color labels: ```bash kubectl label pod test-color-2 color=purple kubectl label pod test-color-2 color=red kubectl label pod test-color-2 color=blue --overwrite ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## `background` - What is this `background: false` option, and why do we need it? -- - Admission controllers are only invoked when we change an object - Existing objects are not affected (e.g. if we have a pod with `color=pink` *before* installing our policy) - Kyvero can also run checks in the background, and report violations (we'll see later how they are reported) - `background: false` disables that -- - Alright, but ... *why* do we need it? .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Accessing `AdmissionRequest` context - In this specific policy, we want to prevent an *update* (as opposed to a mere *create* operation) - We want to compare the *old* and *new* version (to check if a specific label was removed) - The `AdmissionRequest` object has `object` and `oldObject` fields (the `AdmissionRequest` object is the thing that gets submitted to the webhook) - We access the `AdmissionRequest` object through `{{ request }}` -- - Alright, but ... what's the link with `background: false`? .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## `{{ request }}` - The `{{ request }}` context is only available when there is an `AdmissionRequest` - When a resource is "at rest", there is no `{{ request }}` (and no old/new) - Therefore, a policy that uses `{{ request }}` cannot validate existing objects (it can only be used when an object is actually created/updated/deleted) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Immutable primary colors, take 3 - Last rule: once a `color` label has been added, it cannot be removed - Our approach is to match all pods that: - *had* a `color` label (in `request.oldObject`) - *don't have* a `color` label (in `request.Object`) - And *deny* these pods - Again, other approaches are possible! .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- .small[ ```yaml apiVersion: kyverno.io/v1 kind: ClusterPolicy metadata: name: pod-color-policy-3 spec: validationFailureAction: enforce background: false rules: - name: prevent-color-change match: resources: kinds: - Pod preconditions: - key: "{{ request.operation }}" operator: Equals value: UPDATE - key: "{{ request.oldObject.metadata.labels.color }}" operator: NotEquals value: "" - key: "{{ request.object.metadata.labels.color }}" operator: Equals value: "" validate: message: "Once label color has been added, it cannot be removed." deny: conditions: ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Load and try the policy .lab[ - Load the policy: ```bash kubectl apply -f ~/container.training/k8s/kyverno-pod-color-3.yaml ``` - Create a pod: ```bash kubectl run test-color-3 --image=nginx ``` - Try to apply a few color labels: ```bash kubectl label pod test-color-3 color=purple kubectl label pod test-color-3 color=red kubectl label pod test-color-3 color- ``` ] .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Background checks - What about the `test-color-0` pod that we create initially? (remember: we did set `color=purple`) - We can see the infringing Pod in a PolicyReport .lab[ - Check that the pod still an "invalid" color: ```bash kubectl get pods -L color ``` - List PolicyReports: ```bash kubectl get policyreports kubectl get polr ``` ] (Sometimes it takes a little while for the infringement to show up, though.) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Generating objects - When we create a Namespace, we also want to automatically create: - a LimitRange (to set default CPU and RAM requests and limits) - a ResourceQuota (to limit the resources used by the namespace) - a NetworkPolicy (to isolate the namespace) - We can do that with a Kyverno policy with a *generate* action (it is mutually exclusive with the *validate* action) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Overview - The *generate* action must specify: - the `kind` of resource to generate - the `name` of the resource to generate - its `namespace`, when applicable - *either* a `data` structure, to be used to populate the resource - *or* a `clone` reference, to copy an existing resource Note: the `apiVersion` field appears to be optional. .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## In practice - We will use the policy [k8s/kyverno-namespace-setup.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/kyverno-namespace-setup.yaml) - We need to generate 3 resources, so we have 3 rules in the policy - Excerpt: ```yaml generate: kind: LimitRange name: default-limitrange namespace: "{{request.object.metadata.name}}" data: spec: limits: ``` - Note that we have to specify the `namespace` (and we infer it from the name of the resource being created, i.e. the Namespace) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Lifecycle - After generated objects have been created, we can change them (Kyverno won't update them) - Except if we use `clone` together with the `synchronize` flag (in that case, Kyverno will watch the cloned resource) - This is convenient for e.g. ConfigMaps shared between Namespaces - Objects are generated only at *creation* (not when updating an old object) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Asynchronous creation - Kyverno creates resources asynchronously (by creating a GenerateRequest resource first) - This is useful when the resource cannot be created (because of permissions or dependency issues) - Kyverno will periodically loop through the pending GenerateRequests - Once the ressource is created, the GenerateRequest is marked as Completed .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Footprint - 7 CRDs - 5 webhooks - 2 Services, 1 Deployment, 2 ConfigMaps - Internal resources (GenerateRequest) "parked" in a Namespace - Kyverno packs a lot of features in a small footprint .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Strengths - Kyverno is very easy to install (it's harder to get easier than one `kubectl apply -f`) - The setup of the webhooks is fully automated (including certificate generation) - It offers both namespaced and cluster-scope policies - The policy language leverages existing constructs (e.g. `matchExpressions`) .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- ## Caveats - The `{{ request }}` context is powerful, but difficult to validate (Kyverno can't know ahead of time how it will be populated) - Advanced policies (with conditionals) have unique, exotic syntax: ```yaml spec: =(volumes): =(hostPath): path: "!/var/run/docker.sock" ``` - Writing and validating policies can be difficult .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- class: extra-details ## Pods created by controllers - When e.g. a ReplicaSet or DaemonSet creates a pod, it "owns" it (the ReplicaSet or DaemonSet is listed in the Pod's `.metadata.ownerReferences`) - Kyverno treats these Pods differently - If my understanding of the code is correct (big *if*): - it skips validation for "owned" Pods - instead, it validates their controllers - this way, Kyverno can report errors on the controller instead of the pod - This can be a bit confusing when testing policies on such pods! ??? :EN:- Policy Management with Kyverno :FR:- Gestion de *policies* avec Kyverno .debug[[k8s/kyverno.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/kyverno.md)] --- class: pic .interstitial[] --- name: toc-exercise--generating-ingress-with-kyverno class: title Exercise — Generating Ingress With Kyverno .nav[ [Previous part](#toc-policy-management-with-kyverno) | [Back to table of contents](#toc-part-2) | [Next part](#toc-resource-limits) ] .debug[(automatically generated title slide)] --- # Exercise — Generating Ingress With Kyverno When a Service gets created... *(for instance, Service `blue` in Namespace `rainbow`)* ...Automatically generate an Ingress. *(for instance, with host name `blue.rainbow.MYDOMAIN.COM`)* .debug[[exercises/kyverno-ingress-domain-name-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/kyverno-ingress-domain-name-details.md)] --- ## Goals - Step 1: expose all services with a hard-coded domain name - Step 2: only expose services that have a port named `http` - Step 3: configure the domain name with a per-namespace ConfigMap (e.g. `kubectl create configmap ingress-domain-name --from-literal=domain=1.2.3.4.nip.io`) .debug[[exercises/kyverno-ingress-domain-name-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/kyverno-ingress-domain-name-details.md)] --- ## Hints - We want to use a Kyverno `generate` ClusterPolicy - For step 1, check [Generate Resources](https://kyverno.io/docs/writing-policies/generate/) documentation - For step 2, check [Preconditions](https://kyverno.io/docs/writing-policies/preconditions/) documentation - For step 3, check [External Data Sources](https://kyverno.io/docs/writing-policies/external-data-sources/) documentation .debug[[exercises/kyverno-ingress-domain-name-details.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/exercises/kyverno-ingress-domain-name-details.md)] --- class: pic .interstitial[] --- name: toc-resource-limits class: title Resource Limits .nav[ [Previous part](#toc-exercise--generating-ingress-with-kyverno) | [Back to table of contents](#toc-part-3) | [Next part](#toc-defining-min-max-and-default-resources) ] .debug[(automatically generated title slide)] --- # Resource Limits - We can attach resource indications to our pods (or rather: to the *containers* in our pods) - We can specify *limits* and/or *requests* - We can specify quantities of CPU and/or memory .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## CPU vs memory - CPU is a *compressible resource* (it can be preempted immediately without adverse effect) - Memory is an *incompressible resource* (it needs to be swapped out to be reclaimed; and this is costly) - As a result, exceeding limits will have different consequences for CPU and memory .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Exceeding CPU limits - CPU can be reclaimed instantaneously (in fact, it is preempted hundreds of times per second, at each context switch) - If a container uses too much CPU, it can be throttled (it will be scheduled less often) - The processes in that container will run slower (or rather: they will not run faster) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: extra-details ## CPU limits implementation details - A container with a CPU limit will be "rationed" by the kernel - Every `cfs_period_us`, it will receive a CPU quota, like an "allowance" (that interval defaults to 100ms) - Once it has used its quota, it will be stalled until the next period - This can easily result in throttling for bursty workloads (see details on next slide) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: extra-details ## A bursty example - Web service receives one request per minute - Each request takes 1 second of CPU - Average load: 1.66% - Let's say we set a CPU limit of 10% - This means CPU quotas of 10ms every 100ms - Obtaining the quota for 1 second of CPU will take 10 seconds - Observed latency will be 10 seconds (... actually 9.9s) instead of 1 second (real-life scenarios will of course be less extreme, but they do happen!) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: extra-details ## Multi-core scheduling details - Each core gets a small share of the container's CPU quota (this avoids locking and contention on the "global" quota for the container) - By default, the kernel distributes that quota to CPUs in 5ms increments (tunable with `kernel.sched_cfs_bandwidth_slice_us`) - If a containerized process (or thread) uses up its local CPU quota: *it gets more from the "global" container quota (if there's some left)* - If it "yields" (e.g. sleeps for I/O) before using its local CPU quota: *the quota is **soon** returned to the "global" container quota, **minus** 1ms* .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: extra-details ## Low quotas on machines with many cores - The local CPU quota is not immediately returned to the global quota - this reduces locking and contention on the global quota - but this can cause starvation when many threads/processes become runnable - That 1ms that "stays" on the local CPU quota is often useful - if the thread/process becomes runnable, it can be scheduled immediately - again, this reduces locking and contention on the global quota - but if the thread/process doesn't become runnable, it is wasted! - this can become a huge problem on machines with many cores .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: extra-details ## CPU limits in a nutshell - Beware if you run small bursty workloads on machines with many cores! ("highly-threaded, user-interactive, non-cpu bound applications") - Check the `nr_throttled` and `throttled_time` metrics in `cpu.stat` - Possible solutions/workarounds: - be generous with the limits - make sure your kernel has the [appropriate patch](https://lkml.org/lkml/2019/5/17/581) - use [static CPU manager policy](https://kubernetes.io/docs/tasks/administer-cluster/cpu-management-policies/#static-policy) For more details, check [this blog post](https://erickhun.com/posts/kubernetes-faster-services-no-cpu-limits/) or these ones ([part 1](https://engineering.indeedblog.com/blog/2019/12/unthrottled-fixing-cpu-limits-in-the-cloud/), [part 2](https://engineering.indeedblog.com/blog/2019/12/cpu-throttling-regression-fix/)). .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Exceeding memory limits - Memory needs to be swapped out before being reclaimed - "Swapping" means writing memory pages to disk, which is very slow - On a classic system, a process that swaps can get 1000x slower (because disk I/O is 1000x slower than memory I/O) - Exceeding the memory limit (even by a small amount) can reduce performance *a lot* - Kubernetes *does not support swap* (more on that later!) - Exceeding the memory limit will cause the container to be killed .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Limits vs requests - Limits are "hard limits" (they can't be exceeded) - a container exceeding its memory limit is killed - a container exceeding its CPU limit is throttled - Requests are used for scheduling purposes - a container using *less* than what it requested will never be killed or throttled - the scheduler uses the requested sizes to determine placement - the resources requested by all pods on a node will never exceed the node size .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Pod quality of service Each pod is assigned a QoS class (visible in `status.qosClass`). - If limits = requests: - as long as the container uses less than the limit, it won't be affected - if all containers in a pod have *(limits=requests)*, QoS is considered "Guaranteed" - If requests < limits: - as long as the container uses less than the request, it won't be affected - otherwise, it might be killed/evicted if the node gets overloaded - if at least one container has *(requests<limits)*, QoS is considered "Burstable" - If a pod doesn't have any request nor limit, QoS is considered "BestEffort" .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Quality of service impact - When a node is overloaded, BestEffort pods are killed first - Then, Burstable pods that exceed their requests - Burstable and Guaranteed pods below their requests are never killed (except if their node fails) - If we only use Guaranteed pods, no pod should ever be killed (as long as they stay within their limits) (Pod QoS is also explained in [this page](https://kubernetes.io/docs/tasks/configure-pod-container/quality-service-pod/) of the Kubernetes documentation and in [this blog post](https://medium.com/google-cloud/quality-of-service-class-qos-in-kubernetes-bb76a89eb2c6).) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Where is my swap? - The semantics of memory and swap limits on Linux cgroups are complex - With cgroups v1, it's not possible to disable swap for a cgroup (the closest option is to [reduce "swappiness"](https://unix.stackexchange.com/questions/77939/turning-off-swapping-for-only-one-process-with-cgroups)) - It is possible with cgroups v2 (see the [kernel docs](https://www.kernel.org/doc/html/latest/admin-guide/cgroup-v2.html) and the [fbatx docs](https://facebookmicrosites.github.io/cgroup2/docs/memory-controller.html#using-swap)) - Cgroups v2 aren't widely deployed yet - The architects of Kubernetes wanted to ensure that Guaranteed pods never swap - The simplest solution was to disable swap entirely .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Alternative point of view - Swap enables paging¹ of anonymous² memory - Even when swap is disabled, Linux will still page memory for: - executables, libraries - mapped files - Disabling swap *will reduce performance and available resources* - For a good time, read [kubernetes/kubernetes#53533](https://github.com/kubernetes/kubernetes/issues/53533) - Also read this [excellent blog post about swap](https://jvns.ca/blog/2017/02/17/mystery-swap/) ¹Paging: reading/writing memory pages from/to disk to reclaim physical memory ²Anonymous memory: memory that is not backed by files or blocks .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Enabling swap anyway - If you don't care that pods are swapping, you can enable swap - You will need to add the flag `--fail-swap-on=false` to kubelet (otherwise, it won't start!) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Specifying resources - Resource requests are expressed at the *container* level - CPU is expressed in "virtual CPUs" (corresponding to the virtual CPUs offered by some cloud providers) - CPU can be expressed with a decimal value, or even a "milli" suffix (so 100m = 0.1) - Memory is expressed in bytes - Memory can be expressed with k, M, G, T, ki, Mi, Gi, Ti suffixes (corresponding to 10^3, 10^6, 10^9, 10^12, 2^10, 2^20, 2^30, 2^40) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Specifying resources in practice This is what the spec of a Pod with resources will look like: ```yaml containers: - name: httpenv image: jpetazzo/httpenv resources: limits: memory: "100Mi" cpu: "100m" requests: memory: "100Mi" cpu: "10m" ``` This set of resources makes sure that this service won't be killed (as long as it stays below 100 MB of RAM), but allows its CPU usage to be throttled if necessary. .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Default values - If we specify a limit without a request: the request is set to the limit - If we specify a request without a limit: there will be no limit (which means that the limit will be the size of the node) - If we don't specify anything: the request is zero and the limit is the size of the node *Unless there are default values defined for our namespace!* .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## We need default resource values - If we do not set resource values at all: - the limit is "the size of the node" - the request is zero - This is generally *not* what we want - a container without a limit can use up all the resources of a node - if the request is zero, the scheduler can't make a smart placement decision - To address this, we can set default values for resources - This is done with a LimitRange object .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: pic .interstitial[] --- name: toc-defining-min-max-and-default-resources class: title Defining min, max, and default resources .nav[ [Previous part](#toc-resource-limits) | [Back to table of contents](#toc-part-3) | [Next part](#toc-namespace-quotas) ] .debug[(automatically generated title slide)] --- # Defining min, max, and default resources - We can create LimitRange objects to indicate any combination of: - min and/or max resources allowed per pod - default resource *limits* - default resource *requests* - maximal burst ratio (*limit/request*) - LimitRange objects are namespaced - They apply to their namespace only .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## LimitRange example ```yaml apiVersion: v1 kind: LimitRange metadata: name: my-very-detailed-limitrange spec: limits: - type: Container min: cpu: "100m" max: cpu: "2000m" memory: "1Gi" default: cpu: "500m" memory: "250Mi" defaultRequest: cpu: "500m" ``` .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Example explanation The YAML on the previous slide shows an example LimitRange object specifying very detailed limits on CPU usage, and providing defaults on RAM usage. Note the `type: Container` line: in the future, it might also be possible to specify limits per Pod, but it's not [officially documented yet](https://github.com/kubernetes/website/issues/9585). .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## LimitRange details - LimitRange restrictions are enforced only when a Pod is created (they don't apply retroactively) - They don't prevent creation of e.g. an invalid Deployment or DaemonSet (but the pods will not be created as long as the LimitRange is in effect) - If there are multiple LimitRange restrictions, they all apply together (which means that it's possible to specify conflicting LimitRanges, <br/>preventing any Pod from being created) - If a LimitRange specifies a `max` for a resource but no `default`, <br/>that `max` value becomes the `default` limit too .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: pic .interstitial[] --- name: toc-namespace-quotas class: title Namespace quotas .nav[ [Previous part](#toc-defining-min-max-and-default-resources) | [Back to table of contents](#toc-part-3) | [Next part](#toc-limiting-resources-in-practice) ] .debug[(automatically generated title slide)] --- # Namespace quotas - We can also set quotas per namespace - Quotas apply to the total usage in a namespace (e.g. total CPU limits of all pods in a given namespace) - Quotas can apply to resource limits and/or requests (like the CPU and memory limits that we saw earlier) - Quotas can also apply to other resources: - "extended" resources (like GPUs) - storage size - number of objects (number of pods, services...) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Creating a quota for a namespace - Quotas are enforced by creating a ResourceQuota object - ResourceQuota objects are namespaced, and apply to their namespace only - We can have multiple ResourceQuota objects in the same namespace - The most restrictive values are used .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Limiting total CPU/memory usage - The following YAML specifies an upper bound for *limits* and *requests*: ```yaml apiVersion: v1 kind: ResourceQuota metadata: name: a-little-bit-of-compute spec: hard: requests.cpu: "10" requests.memory: 10Gi limits.cpu: "20" limits.memory: 20Gi ``` These quotas will apply to the namespace where the ResourceQuota is created. .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Limiting number of objects - The following YAML specifies how many objects of specific types can be created: ```yaml apiVersion: v1 kind: ResourceQuota metadata: name: quota-for-objects spec: hard: pods: 100 services: 10 secrets: 10 configmaps: 10 persistentvolumeclaims: 20 services.nodeports: 0 services.loadbalancers: 0 count/roles.rbac.authorization.k8s.io: 10 ``` (The `count/` syntax allows limiting arbitrary objects, including CRDs.) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## YAML vs CLI - Quotas can be created with a YAML definition - ...Or with the `kubectl create quota` command - Example: ```bash kubectl create quota my-resource-quota --hard=pods=300,limits.memory=300Gi ``` - With both YAML and CLI form, the values are always under the `hard` section (there is no `soft` quota) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Viewing current usage When a ResourceQuota is created, we can see how much of it is used: ``` kubectl describe resourcequota my-resource-quota Name: my-resource-quota Namespace: default Resource Used Hard -------- ---- ---- pods 12 100 services 1 5 services.loadbalancers 0 0 services.nodeports 0 0 ``` .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Advanced quotas and PriorityClass - Pods can have a *priority* - The priority is a number from 0 to 1000000000 (or even higher for system-defined priorities) - High number = high priority = "more important" Pod - Pods with a higher priority can *preempt* Pods with lower priority (= low priority pods will be *evicted* if needed) - Useful when mixing workloads in resource-constrained environments .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Setting the priority of a Pod - Create a PriorityClass (or use an existing one) - When creating the Pod, set the field `spec.priorityClassName` - If the field is not set: - if there is a PriorityClass with `globalDefault`, it is used - otherwise, the default priority will be zero .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: extra-details ## PriorityClass and ResourceQuotas - A ResourceQuota can include a list of *scopes* or a *scope selector* - In that case, the quota will only apply to the scoped resources - Example: limit the resources allocated to "high priority" Pods - In that case, make sure that the quota is created in every Namespace (or use *admission configuration* to enforce it) - See the [resource quotas documentation][quotadocs] for details [quotadocs]: https://kubernetes.io/docs/concepts/policy/resource-quotas/#resource-quota-per-priorityclass .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: pic .interstitial[] --- name: toc-limiting-resources-in-practice class: title Limiting resources in practice .nav[ [Previous part](#toc-namespace-quotas) | [Back to table of contents](#toc-part-3) | [Next part](#toc-checking-node-and-pod-resource-usage) ] .debug[(automatically generated title slide)] --- # Limiting resources in practice - We have at least three mechanisms: - requests and limits per Pod - LimitRange per namespace - ResourceQuota per namespace - Let's see a simple recommendation to get started with resource limits .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Set a LimitRange - In each namespace, create a LimitRange object - Set a small default CPU request and CPU limit (e.g. "100m") - Set a default memory request and limit depending on your most common workload - for Java, Ruby: start with "1G" - for Go, Python, PHP, Node: start with "250M" - Set upper bounds slightly below your expected node size (80-90% of your node size, with at least a 500M memory buffer) .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Set a ResourceQuota - In each namespace, create a ResourceQuota object - Set generous CPU and memory limits (e.g. half the cluster size if the cluster hosts multiple apps) - Set generous objects limits - these limits should not be here to constrain your users - they should catch a runaway process creating many resources - example: a custom controller creating many pods .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Observe, refine, iterate - Observe the resource usage of your pods (we will see how in the next chapter) - Adjust individual pod limits - If you see trends: adjust the LimitRange (rather than adjusting every individual set of pod limits) - Observe the resource usage of your namespaces (with `kubectl describe resourcequota ...`) - Rinse and repeat regularly .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Viewing a namespace limits and quotas - `kubectl describe namespace` will display resource limits and quotas .lab[ - Try it out: ```bash kubectl describe namespace default ``` - View limits and quotas for *all* namespaces: ```bash kubectl describe namespace ``` ] .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- ## Additional resources - [A Practical Guide to Setting Kubernetes Requests and Limits](http://blog.kubecost.com/blog/requests-and-limits/) - explains what requests and limits are - provides guidelines to set requests and limits - gives PromQL expressions to compute good values <br/>(our app needs to be running for a while) - [Kube Resource Report](https://github.com/hjacobs/kube-resource-report/) - generates web reports on resource usage - [static demo](https://hjacobs.github.io/kube-resource-report/sample-report/output/index.html) | [live demo](https://kube-resource-report.demo.j-serv.de/applications.html) ??? :EN:- Setting compute resource limits :EN:- Defining default policies for resource usage :EN:- Managing cluster allocation and quotas :EN:- Resource management in practice :FR:- Allouer et limiter les ressources des conteneurs :FR:- Définir des ressources par défaut :FR:- Gérer les quotas de ressources au niveau du cluster :FR:- Conseils pratiques .debug[[k8s/resource-limits.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/resource-limits.md)] --- class: pic .interstitial[] --- name: toc-checking-node-and-pod-resource-usage class: title Checking Node and Pod resource usage .nav[ [Previous part](#toc-limiting-resources-in-practice) | [Back to table of contents](#toc-part-3) | [Next part](#toc-cluster-sizing) ] .debug[(automatically generated title slide)] --- # Checking Node and Pod resource usage - We've installed a few things on our cluster so far - How much resources (CPU, RAM) are we using? - We need metrics! .lab[ - Let's try the following command: ```bash kubectl top nodes ``` ] .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Is metrics-server installed? - If we see a list of nodes, with CPU and RAM usage: *great, metrics-server is installed!* - If we see `error: Metrics API not available`: *metrics-server isn't installed, so we'll install it!* .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## The resource metrics pipeline - The `kubectl top` command relies on the Metrics API - The Metrics API is part of the "[resource metrics pipeline]" - The Metrics API isn't served (built into) the Kubernetes API server - It is made available through the [aggregation layer] - It is usually served by a component called metrics-server - It is optional (Kubernetes can function without it) - It is necessary for some features (like the Horizontal Pod Autoscaler) [resource metrics pipeline]: https://kubernetes.io/docs/tasks/debug-application-cluster/resource-metrics-pipeline/ [aggregation layer]: https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/apiserver-aggregation/ .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Other ways to get metrics - We could use a SAAS like Datadog, New Relic... - We could use a self-hosted solution like Prometheus - Or we could use metrics-server - What's special about metrics-server? .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Pros/cons Cons: - no data retention (no history data, just instant numbers) - only CPU and RAM of nodes and pods (no disk or network usage or I/O...) Pros: - very lightweight - doesn't require storage - used by Kubernetes autoscaling .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Why metrics-server - We may install something fancier later (think: Prometheus with Grafana) - But metrics-server will work in *minutes* - It will barely use resources on our cluster - It's required for autoscaling anyway .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## How metric-server works - It runs a single Pod - That Pod will fetch metrics from all our Nodes - It will expose them through the Kubernetes API agregation layer (we won't say much more about that agregation layer; that's fairly advanced stuff!) .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Installing metrics-server - In a lot of places, this is done with a little bit of custom YAML (derived from the [official installation instructions](https://github.com/kubernetes-sigs/metrics-server#installation)) - We're going to use Helm one more time: ```bash helm upgrade --install metrics-server bitnami/metrics-server \ --create-namespace --namespace metrics-server \ --set apiService.create=true \ --set extraArgs.kubelet-insecure-tls=true \ --set extraArgs.kubelet-preferred-address-types=InternalIP ``` - What are these options for? .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Installation options - `apiService.create=true` register `metrics-server` with the Kubernetes agregation layer (create an entry that will show up in `kubectl get apiservices`) - `extraArgs.kubelet-insecure-tls=true` when connecting to nodes to collect their metrics, don't check kubelet TLS certs (because most kubelet certs include the node name, but not its IP address) - `extraArgs.kubelet-preferred-address-types=InternalIP` when connecting to nodes, use their internal IP address instead of node name (because the latter requires an internal DNS, which is rarely configured) .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Testing metrics-server - After a minute or two, metrics-server should be up - We should now be able to check Nodes resource usage: ```bash kubectl top nodes ``` - And Pods resource usage, too: ```bash kubectl top pods --all-namespaces ``` .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Keep some padding - The RAM usage that we see should correspond more or less to the Resident Set Size - Our pods also need some extra space for buffers, caches... - Do not aim for 100% memory usage! - Some more realistic targets: 50% (for workloads with disk I/O and leveraging caching) 90% (on very big nodes with mostly CPU-bound workloads) 75% (anywhere in between!) .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- ## Other tools - kube-capacity is a great CLI tool to view resources (https://github.com/robscott/kube-capacity) - It can show resource and limits, and compare them with usage - It can show utilization per node, or per pod - kube-resource-report can generate HTML reports (https://github.com/hjacobs/kube-resource-report) ??? :EN:- The resource metrics pipeline :EN:- Installing metrics-server :EN:- Le *resource metrics pipeline* :FR:- Installtion de metrics-server .debug[[k8s/metrics-server.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/metrics-server.md)] --- class: pic .interstitial[] --- name: toc-cluster-sizing class: title Cluster sizing .nav[ [Previous part](#toc-checking-node-and-pod-resource-usage) | [Back to table of contents](#toc-part-3) | [Next part](#toc-the-horizontal-pod-autoscaler) ] .debug[(automatically generated title slide)] --- # Cluster sizing - What happens when the cluster gets full? - How can we scale up the cluster? - Can we do it automatically? - What are other methods to address capacity planning? .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## When are we out of resources? - kubelet monitors node resources: - memory - node disk usage (typically the root filesystem of the node) - image disk usage (where container images and RW layers are stored) - For each resource, we can provide two thresholds: - a hard threshold (if it's met, it provokes immediate action) - a soft threshold (provokes action only after a grace period) - Resource thresholds and grace periods are configurable (by passing kubelet command-line flags) .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## What happens then? - If disk usage is too high: - kubelet will try to remove terminated pods - then, it will try to *evict* pods - If memory usage is too high: - it will try to evict pods - The node is marked as "under pressure" - This temporarily prevents new pods from being scheduled on the node .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## Which pods get evicted? - kubelet looks at the pods' QoS and PriorityClass - First, pods with BestEffort QoS are considered - Then, pods with Burstable QoS exceeding their *requests* (but only if the exceeding resource is the one that is low on the node) - Finally, pods with Guaranteed QoS, and Burstable pods within their requests - Within each group, pods are sorted by PriorityClass - If there are pods with the same PriorityClass, they are sorted by usage excess (i.e. the pods whose usage exceeds their requests the most are evicted first) .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- class: extra-details ## Eviction of Guaranteed pods - *Normally*, pods with Guaranteed QoS should not be evicted - A chunk of resources is reserved for node processes (like kubelet) - It is expected that these processes won't use more than this reservation - If they do use more resources anyway, all bets are off! - If this happens, kubelet must evict Guaranteed pods to preserve node stability (or Burstable pods that are still within their requested usage) .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## What happens to evicted pods? - The pod is terminated - It is marked as `Failed` at the API level - If the pod was created by a controller, the controller will recreate it - The pod will be recreated on another node, *if there are resources available!* - For more details about the eviction process, see: - [this documentation page](https://kubernetes.io/docs/tasks/administer-cluster/out-of-resource/) about resource pressure and pod eviction, - [this other documentation page](https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/) about pod priority and preemption. .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## What if there are no resources available? - Sometimes, a pod cannot be scheduled anywhere: - all the nodes are under pressure, - or the pod requests more resources than are available - The pod then remains in `Pending` state until the situation improves .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## Cluster scaling - One way to improve the situation is to add new nodes - This can be done automatically with the [Cluster Autoscaler](https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler) - The autoscaler will automatically scale up: - if there are pods that failed to be scheduled - The autoscaler will automatically scale down: - if nodes have a low utilization for an extended period of time .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## Restrictions, gotchas ... - The Cluster Autoscaler only supports a few cloud infrastructures (see [here](https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler/cloudprovider) for a list) - The Cluster Autoscaler cannot scale down nodes that have pods using: - local storage - affinity/anti-affinity rules preventing them from being rescheduled - a restrictive PodDisruptionBudget .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- ## Other way to do capacity planning - "Running Kubernetes without nodes" - Systems like [Virtual Kubelet](https://virtual-kubelet.io/) or [Kiyot](https://static.elotl.co/docs/latest/kiyot/kiyot.html) can run pods using on-demand resources - Virtual Kubelet can leverage e.g. ACI or Fargate to run pods - Kiyot runs pods in ad-hoc EC2 instances (1 instance per pod) - Economic advantage (no wasted capacity) - Security advantage (stronger isolation between pods) Check [this blog post](http://jpetazzo.github.io/2019/02/13/running-kubernetes-without-nodes-with-kiyot/) for more details. ??? :EN:- What happens when the cluster is at, or over, capacity :EN:- Cluster sizing and scaling :FR:- Ce qui se passe quand il n'y a plus assez de ressources :FR:- Dimensionner et redimensionner ses clusters .debug[[k8s/cluster-sizing.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/cluster-sizing.md)] --- class: pic .interstitial[] --- name: toc-the-horizontal-pod-autoscaler class: title The Horizontal Pod Autoscaler .nav[ [Previous part](#toc-cluster-sizing) | [Back to table of contents](#toc-part-3) | [Next part](#toc-api-server-internals) ] .debug[(automatically generated title slide)] --- # The Horizontal Pod Autoscaler - What is the Horizontal Pod Autoscaler, or HPA? - It is a controller that can perform *horizontal* scaling automatically - Horizontal scaling = changing the number of replicas (adding/removing pods) - Vertical scaling = changing the size of individual replicas (increasing/reducing CPU and RAM per pod) - Cluster scaling = changing the size of the cluster (adding/removing nodes) .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Principle of operation - Each HPA resource (or "policy") specifies: - which object to monitor and scale (e.g. a Deployment, ReplicaSet...) - min/max scaling ranges (the max is a safety limit!) - a target resource usage (e.g. the default is CPU=80%) - The HPA continuously monitors the CPU usage for the related object - It computes how many pods should be running: `TargetNumOfPods = ceil(sum(CurrentPodsCPUUtilization) / Target)` - It scales the related object up/down to this target number of pods .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Pre-requirements - The metrics server needs to be running (i.e. we need to be able to see pod metrics with `kubectl top pods`) - The pods that we want to autoscale need to have resource requests (because the target CPU% is not absolute, but relative to the request) - The latter actually makes a lot of sense: - if a Pod doesn't have a CPU request, it might be using 10% of CPU... - ...but only because there is no CPU time available! - this makes sure that we won't add pods to nodes that are already resource-starved .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Testing the HPA - We will start a CPU-intensive web service - We will send some traffic to that service - We will create an HPA policy - The HPA will automatically scale up the service for us .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## A CPU-intensive web service - Let's use `jpetazzo/busyhttp` (it is a web server that will use 1s of CPU for each HTTP request) .lab[ - Deploy the web server: ```bash kubectl create deployment busyhttp --image=jpetazzo/busyhttp ``` - Expose it with a ClusterIP service: ```bash kubectl expose deployment busyhttp --port=80 ``` - Get the ClusterIP allocated to the service: ```bash kubectl get svc busyhttp ``` ] .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Monitor what's going on - Let's start a bunch of commands to watch what is happening .lab[ - Monitor pod CPU usage: ```bash watch kubectl top pods -l app=busyhttp ``` <!-- ```wait NAME``` ```tmux split-pane -v``` ```bash CLUSTERIP=$(kubectl get svc busyhttp -o jsonpath={.spec.clusterIP})``` --> - Monitor service latency: ```bash httping http://`$CLUSTERIP`/ ``` <!-- ```wait connected to``` ```tmux split-pane -v``` --> - Monitor cluster events: ```bash kubectl get events -w ``` <!-- ```wait Normal``` ```tmux split-pane -v``` ```bash CLUSTERIP=$(kubectl get svc busyhttp -o jsonpath={.spec.clusterIP})``` --> ] .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Send traffic to the service - We will use `ab` (Apache Bench) to send traffic .lab[ - Send a lot of requests to the service, with a concurrency level of 3: ```bash ab -c 3 -n 100000 http://`$CLUSTERIP`/ ``` <!-- ```wait be patient``` ```tmux split-pane -v``` ```tmux selectl even-vertical``` --> ] The latency (reported by `httping`) should increase above 3s. The CPU utilization should increase to 100%. (The server is single-threaded and won't go above 100%.) .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Create an HPA policy - There is a helper command to do that for us: `kubectl autoscale` .lab[ - Create the HPA policy for the `busyhttp` deployment: ```bash kubectl autoscale deployment busyhttp --max=10 ``` ] By default, it will assume a target of 80% CPU usage. This can also be set with `--cpu-percent=`. -- *The autoscaler doesn't seem to work. Why?* .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## What did we miss? - The events stream gives us a hint, but to be honest, it's not very clear: `missing request for cpu` - We forgot to specify a resource request for our Deployment! - The HPA target is not an absolute CPU% - It is relative to the CPU requested by the pod .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Adding a CPU request - Let's edit the deployment and add a CPU request - Since our server can use up to 1 core, let's request 1 core .lab[ - Edit the Deployment definition: ```bash kubectl edit deployment busyhttp ``` <!-- ```wait Please edit``` ```keys /resources``` ```key ^J``` ```keys $xxxo requests:``` ```key ^J``` ```key Space``` ```key Space``` ```keys cpu: "1"``` ```key Escape``` ```keys :wq``` ```key ^J``` --> - In the `containers` list, add the following block: ```yaml resources: requests: cpu: "1" ``` ] .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Results - After saving and quitting, a rolling update happens (if `ab` or `httping` exits, make sure to restart it) - It will take a minute or two for the HPA to kick in: - the HPA runs every 30 seconds by default - it needs to gather metrics from the metrics server first - If we scale further up (or down), the HPA will react after a few minutes: - it won't scale up if it already scaled in the last 3 minutes - it won't scale down if it already scaled in the last 5 minutes .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## What about other metrics? - The HPA in API group `autoscaling/v1` only supports CPU scaling - The HPA in API group `autoscaling/v2beta2` supports metrics from various API groups: - metrics.k8s.io, aka metrics server (per-Pod CPU and RAM) - custom.metrics.k8s.io, custom metrics per Pod - external.metrics.k8s.io, external metrics (not associated to Pods) - Kubernetes doesn't implement any of these API groups - Using these metrics requires [registering additional APIs](https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-metrics-apis) - The metrics provided by metrics server are standard; everything else is custom - For more details, see [this great blog post](https://medium.com/uptime-99/kubernetes-hpa-autoscaling-with-custom-and-external-metrics-da7f41ff7846) or [this talk](https://www.youtube.com/watch?v=gSiGFH4ZnS8) .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- ## Cleanup - Since `busyhttp` uses CPU cycles, let's stop it before moving on .lab[ - Delete the `busyhttp` Deployment: ```bash kubectl delete deployment busyhttp ``` <!-- ```key ^D``` ```key ^C``` ```key ^D``` ```key ^C``` ```key ^D``` ```key ^C``` ```key ^D``` ```key ^C``` --> ] ??? :EN:- Auto-scaling resources :FR:- *Auto-scaling* (dimensionnement automatique) des ressources .debug[[k8s/horizontal-pod-autoscaler.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/horizontal-pod-autoscaler.md)] --- class: pic .interstitial[] --- name: toc-api-server-internals class: title API server internals .nav[ [Previous part](#toc-the-horizontal-pod-autoscaler) | [Back to table of contents](#toc-part-3) | [Next part](#toc-the-aggregation-layer) ] .debug[(automatically generated title slide)] --- # API server internals - Understanding the internals of the API server is useful.red[¹]: - when extending the Kubernetes API server (CRDs, webhooks...) - when running Kubernetes at scale - Let's dive into a bit of code! .footnote[.red[¹]And by *useful*, we mean *strongly recommended or else...*] .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- ## The main handler - The API server parses its configuration, and builds a `GenericAPIServer` - ... which contains an `APIServerHandler` ([src](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/server/handler.go#L37 )) - ... which contains a couple of `http.Handler` fields - Requests go through: - `FullhandlerChain` (a series of HTTP filters, see next slide) - `Director` (switches the request to `GoRestfulContainer` or `NonGoRestfulMux`) - `GoRestfulContainer` is for "normal" APIs; integrates nicely with OpenAPI - `NonGoRestfulMux` is for everything else (e.g. proxy, delegation) .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- ## The chain of handlers - API requests go through a complex chain of filters ([src](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/server/config.go#L671)) (note when reading that code: requests start at the bottom and go up) - This is where authentication, authorization, and admission happen (as well as a few other things!) - Let's review an arbitrary selection of some of these handlers! *In the following slides, the handlers are in chronological order.* *Note: handlers are nested; so they can act at the beginning and end of a request.* .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- ## `WithPanicRecovery` - Reminder about Go: there is no exception handling in Go; instead: - functions typically return a composite `(SomeType, error)` type - when things go really bad, the code can call `panic()` - `panic()` can be caught with `recover()` <br/> (but this is almost never used like an exception handler!) - The API server code is not supposed to `panic()` - But just in case, we have that handler to prevent (some) crashes .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- ## `WithRequestInfo` ([src](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/request/requestinfo.go#L163)) - Parse out essential information: API group, version, namespace, resource, subresource, verb ... - WithRequestInfo: parse out API group+version, Namespace, resource, subresource ... - Maps HTTP verbs (GET, PUT, ...) to Kubernetes verbs (list, get, watch, ...) .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- class: extra-details ## HTTP verb mapping - POST → create - PUT → update - PATCH → patch - DELETE <br/> → delete (if a resource name is specified) <br/> → deletecollection (otherwise) - GET, HEAD <br/> → get (if a resource name is specified) <br/> → list (otherwise) <br/> → watch (if the `?watch=true` option is specified) .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- ## `WithWaitGroup` - When we shutdown, tells clients (with in-flight requests) to retry - only for "short" requests - for long running requests, the client needs to do more - Long running requests include `watch` verb, `proxy` sub-resource (See also `WithTimeoutForNonLongRunningRequests`) .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- ## AuthN and AuthZ - `WithAuthentication`: the request goes through a *chain* of authenticators ([src](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/filters/authentication.go#L38)) - WithAudit - WithImpersonation: used for e.g. `kubectl ... --as another.user` - WithPriorityAndFairness or WithMaxInFlightLimit (`system:masters` can bypass these) - WithAuthorization .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- ## After all these handlers ... - We get to the "director" mentioned above - Api Groups get installed into the "gorestfulhandler" ([src](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/server/genericapiserver.go#L423)) - REST-ish resources are managed by various handlers (in [this directory](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/handlers/)) - These files show us the code path for each type of request .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- class: extra-details ## Request code path - [create.go](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/handlers/create.go): decode to HubGroupVersion; admission; mutating admission; store - [delete.go](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/handlers/delete.go): validating admission only; deletion - [get.go](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/handlers/get.go) (get, list): directly fetch from rest storage abstraction - [patch.go](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/handlers/patch.go): admission; mutating admission; patch - [update.go](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/handlers/update.go): decode to HubGroupVersion; admission; mutating admission; store - [watch.go](https://github.com/kubernetes/apiserver/blob/release-1.19/pkg/endpoints/handlers/watch.go): similar to get.go, but with watch logic (HubGroupVersion = in-memory, "canonical" version.) ??? :EN:- Kubernetes API server internals :FR:- Fonctionnement interne du serveur API .debug[[k8s/apiserver-deepdive.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/apiserver-deepdive.md)] --- class: pic .interstitial[] --- name: toc-the-aggregation-layer class: title The Aggregation Layer .nav[ [Previous part](#toc-api-server-internals) | [Back to table of contents](#toc-part-3) | [Next part](#toc-scaling-with-custom-metrics) ] .debug[(automatically generated title slide)] --- # The Aggregation Layer - The aggregation layer is a way to extend the Kubernetes API - It is similar to CRDs - it lets us define new resource types - these resources can then be used with `kubectl` and other clients - The implementation is very different - CRDs are handled within the API server - the aggregation layer offloads requests to another process - They are designed for very different use-cases .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## CRDs vs aggregation layer - The Kubernetes API is a REST-ish API with a hierarchical structure - It can be extended with Custom Resource Definifions (CRDs) - Custom resources are managed by the Kubernetes API server - we don't need to write code - the API server does all the heavy lifting - these resources are persisted in Kubernetes' "standard" database <br/> (for most installations, that's `etcd`) - We can also define resources that are *not* managed by the API server (the API server merely proxies the requests to another server) .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Which one is best? - For things that "map" well to objects stored in a traditional database: *probably CRDs* - For things that "exist" only in Kubernetes and don't represent external resources: *probably CRDs* - For things that are read-only, at least from Kubernetes' perspective: *probably aggregation layer* - For things that can't be stored in etcd because of size or access patterns: *probably aggregation layer* .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## How are resources organized? - Let's have a look at the Kubernetes API hierarchical structure - We'll ask `kubectl` to show us the exacts requests that it's making .lab[ - Check the URI for a cluster-scope, "core" resource, e.g. a Node: ```bash kubectl -v6 get node node1 ``` - Check the URI for a cluster-scope, "non-core" resource, e.g. a ClusterRole: ```bash kubectl -v6 get clusterrole view ``` ] .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Core vs non-core - This is the structure of the URIs that we just checked: ``` /api/v1/nodes/node1 ↑ ↑ ↑ `version` `kind` `name` /apis/rbac.authorization.k8s.io/v1/clusterroles/view ↑ ↑ ↑ ↑ `group` `version` `kind` `name` ``` - There is no group for "core" resources - Or, we could say that the group, `core`, is implied .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Group-Version-Kind - In the API server, the Group-Version-Kind triple maps to a Go type (look for all the "GVK" occurrences in the source code!) - In the API server URI router, the GVK is parsed "relatively early" (so that the server can know which resource we're talking about) - "Well, actually ..." Things are a bit more complicated, see next slides! .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- class: extra-details ## Namespaced resources - What about namespaced resources? .lab[ - Check the URI for a namespaced, "core" resource, e.g. a Service: ```bash kubectl -v6 get service kubernetes --namespace default ``` ] - Here are what namespaced resources URIs look like: ``` /api/v1/namespaces/default/services/kubernetes ↑ ↑ ↑ ↑ `version` `namespace` `kind` `name` /apis/apps/v1/namespaces/kube-system/daemonsets/kube-proxy ↑ ↑ ↑ ↑ ↑ `group` `version` `namespace` `kind` `name` ``` .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- class: extra-details ## Subresources - Many resources have *subresources*, for instance: - `/status` (decouples status updates from other updates) - `/scale` (exposes a consistent interface for autoscalers) - `/proxy` (allows access to HTTP resources) - `/portforward` (used by `kubectl port-forward`) - `/logs` (access pod logs) - These are added at the end of the URI .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- class: extra-details ## Accessing a subresource .lab[ - List `kube-proxy` pods: ```bash kubectl get pods --namespace=kube-system --selector=k8s-app=kube-proxy PODNAME=$( kubectl get pods --namespace=kube-system --selector=k8s-app=kube-proxy \ -o json | jq -r .items[0].metadata.name) ``` - Execute a command in a pod, showing the API requests: ```bash kubectl -v6 exec --namespace=kube-system $PODNAME -- echo hello world ``` ] -- The full request looks like: ``` POST https://.../api/v1/namespaces/kube-system/pods/kube-proxy-c7rlw/exec? command=echo&command=hello&command=world&container=kube-proxy&stderr=true&stdout=true ``` .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Listing what's supported on the server - There are at least three useful commands to introspect the API server .lab[ - List resources types, their group, kind, short names, and scope: ```bash kubectl api-resources ``` - List API groups + versions: ```bash kubectl api-versions ``` - List APIServices: ```bash kubectl get apiservices ``` ] -- 🤔 What's the difference between the last two? .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## API registration - `kubectl api-versions` shows all API groups, including `apiregistration.k8s.io` - `kubectl get apiservices` shows the "routing table" for API requests - The latter doesn't show `apiregistration.k8s.io` (APIServices belong to `apiregistration.k8s.io`) - Most API groups are `Local` (handled internally by the API server) - If we're running the `metrics-server`, it should handle `metrics.k8s.io` - This is an API group handled *outside* of the API server - This is the *aggregation layer!* .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Finding resources The following assumes that `metrics-server` is deployed on your cluster. .lab[ - Check that the metrics.k8s.io is registered with `metrics-server`: ```bash kubectl get apiservices | grep metrics.k8s.io ``` - Check the resource kinds registered in the metrics.k8s.io group: ```bash kubectl api-resources --api-group=metrics.k8s.io ``` ] (If the output of either command is empty, install `metrics-server` first.) .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## `nodes` vs `nodes` - We can have multiple resources with the same name .lab[ - Look for resources named `node`: ```bash kubectl api-resources | grep -w nodes ``` - Compare the output of both commands: ```bash kubectl get nodes kubectl get nodes.metrics.k8s.io ``` ] -- 🤔 What are the second kind of nodes? How can we see what's really in them? .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Node vs NodeMetrics - `nodes.metrics.k8s.io` (aka NodeMetrics) don't have fancy *printer columns* - But we can look at the raw data (with `-o json` or `-o yaml`) .lab[ - Look at NodeMetrics objects with one of these commands: ```bash kubectl get -o yaml nodes.metrics.k8s.io kubectl get -o yaml NodeMetrics ``` ] -- 💡 Alright, these are the live metrics (CPU, RAM) for our nodes. .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## An easier way to consume metrics - We might have seen these metrics before ... With an easier command! -- .lab[ - Display node metrics: ```bash kubectl top nodes ``` - Check which API requests happen behind the scenes: ```bash kubectl top nodes -v6 ``` ] .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Aggregation layer in practice - We can write an API server to handle a subset of the Kubernetes API - Then we can register that server by creating an APIService resource .lab[ - Check the definition used for the `metrics-server`: ```bash kubectl describe apiservices v1beta1.metrics.k8s.io ``` ] - Group priority is used when multiple API groups provide similar kinds (e.g. `nodes` and `nodes.metrics.k8s.io` as seen earlier) .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Authentication flow - We have two Kubernetes API servers: - "aggregator" (the main one; clients connect to it) - "aggregated" (the one providing the extra API; aggregator connects to it) - Aggregator deals with client authentication - Aggregator authenticates with aggregated using mutual TLS - Aggregator passes (/forwards/proxies/...) requests to aggregated - Aggregated performs authorization by calling back aggregator ("can subject X perform action Y on resource Z?") [This doc page](https://kubernetes.io/docs/tasks/extend-kubernetes/configure-aggregation-layer/#authentication-flow) has very nice swim lanes showing that flow. .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- ## Discussion - Aggregation layer is great for metrics (fast-changing, ephemeral data, that would be outrageously bad for etcd) - It *could* be a good fit to expose other REST APIs as a pass-thru (but it's more common to see CRDs instead) ??? :EN:- The aggregation layer :FR:- Étendre l'API avec le *aggregation layer* .debug[[k8s/aggregation-layer.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/aggregation-layer.md)] --- class: pic .interstitial[] --- name: toc-scaling-with-custom-metrics class: title Scaling with custom metrics .nav[ [Previous part](#toc-the-aggregation-layer) | [Back to table of contents](#toc-part-3) | [Next part](#toc-stateful-sets) ] .debug[(automatically generated title slide)] --- # Scaling with custom metrics - The HorizontalPodAutoscaler v1 can only scale on Pod CPU usage - Sometimes, we need to scale using other metrics: - memory - requests per second - latency - active sessions - items in a work queue - ... - The HorizontalPodAutoscaler v2 can do it! .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Requirements ⚠️ Autoscaling on custom metrics is fairly complex! - We need some metrics system (Prometheus is a popular option, but others are possible too) - We need our metrics (latency, traffic...) to be fed in the system (with Prometheus, this might require a custom exporter) - We need to expose these metrics to Kubernetes (Kubernetes doesn't "speak" the Prometheus API) - Then we can set up autoscaling! .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## The plan - We will deploy the DockerCoins demo app (one of its components has a bottleneck; its latency will increase under load) - We will use Prometheus to collect and store metrics - We will deploy a tiny HTTP latency monitor (a Prometheus *exporter*) - We will deploy the "Prometheus adapter" (mapping Prometheus metrics to Kubernetes-compatible metrics) - We will create an HorizontalPodAutoscaler 🎉 .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Deploying DockerCoins - That's the easy part! .lab[ - Create a new namespace and switch to it: ```bash kubectl create namespace customscaling kns customscaling ``` - Deploy DockerCoins, and scale up the `worker` Deployment: ```bash kubectl apply -f ~/container.training/k8s/dockercoins.yaml kubectl scale deployment worker --replicas=10 ``` ] .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Current state of affairs - The `rng` service is a bottleneck (it cannot handle more than 10 requests/second) - With enough traffic, its latency increases (by about 100ms per `worker` Pod after the 3rd worker) .lab[ - Check the `webui` port and open it in your browser: ```bash kubectl get service webui ``` - Check the `rng` ClusterIP and test it with e.g. `httping`: ```bash kubectl get service rng ``` ] .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Measuring latency - We will use a tiny custom Prometheus exporter, [httplat](https://github.com/jpetazzo/httplat) - `httplat` exposes Prometheus metrics on port 9080 (by default) - It monitors exactly one URL, that must be passed as a command-line argument .lab[ - Deploy `httplat`: ```bash kubectl create deployment httplat --image=jpetazzo/httplat -- httplat http://rng/ ``` - Expose it: ```bash kubectl expose deployment httplat --port=9080 ``` ] .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- class: extra-details ## Measuring latency in the real world - We are using this tiny custom exporter for simplicity - A more common method to collect latency is to use a service mesh - A service mesh can usually collect latency for *all* services automatically .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Install Prometheus - We will use the Prometheus community Helm chart (because we can configure it dynamically with annotations) .lab[ - If it's not installed yet on the cluster, install Prometheus: ```bash helm upgrade --install prometheus prometheus \ --repo https://prometheus-community.github.io/helm-charts \ --namespace prometheus --create-namespace \ --set server.service.type=NodePort \ --set server.service.nodePort=30090 \ --set server.persistentVolume.enabled=false \ --set alertmanager.enabled=false ``` ] .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Configure Prometheus - We can use annotations to tell Prometheus to collect the metrics .lab[ - Tell Prometheus to "scrape" our latency exporter: ```bash kubectl annotate service httplat \ prometheus.io/scrape=true \ prometheus.io/port=9080 \ prometheus.io/path=/metrics ``` ] If you deployed Prometheus differently, you might have to configure it manually. You'll need to instruct it to scrape http://httplat.customscaling.svc:9080/metrics. .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Make sure that metrics get collected - Before moving on, confirm that Prometheus has our metrics .lab[ - Connect to Prometheus (if you installed it like instructed above, it is exposed as a NodePort on port 30090) - Check that `httplat` metrics are available - You can try to graph the following PromQL expression: ``` rate(httplat_latency_seconds_sum[2m])/rate(httplat_latency_seconds_count[2m]) ``` ] .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Troubleshooting - Make sure that the exporter works: - get the ClusterIP of the exporter with `kubectl get svc httplat` - `curl http://<ClusterIP>:9080/metrics` - check that the result includes the `httplat` histogram - Make sure that Prometheus is scraping the exporter: - go to `Status` / `Targets` in Prometheus - make sure that `httplat` shows up in there .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Creating the autoscaling policy - We need custom YAML (we can't use the `kubectl autoscale` command) - It must specify `scaleTargetRef`, the resource to scale - any resource with a `scale` sub-resource will do - this includes Deployment, ReplicaSet, StatefulSet... - It must specify one or more `metrics` to look at - if multiple metrics are given, the autoscaler will "do the math" for each one - it will then keep the largest result .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Details about the `metrics` list - Each item will look like this: ```yaml - type: <TYPE-OF-METRIC> <TYPE-OF-METRIC>: metric: name: <NAME-OF-METRIC> <...optional selector (mandatory for External metrics)...> target: type: <TYPE-OF-TARGET> <TYPE-OF-TARGET>: <VALUE> <describedObject field, for Object metrics> ``` `<TYPE-OF-METRIC>` can be `Resource`, `Pods`, `Object`, or `External`. `<TYPE-OF-TARGET>` can be `Utilization`, `Value`, or `AverageValue`. Let's explain the 4 different `<TYPE-OF-METRIC>` values! .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## `Resource` Use "classic" metrics served by `metrics-server` (`cpu` and `memory`). ```yaml - type: Resource resource: name: cpu target: type: Utilization averageUtilization: 50 ``` Compute average *utilization* (usage/requests) across pods. It's also possible to specify `Value` or `AverageValue` instead of `Utilization`. (To scale according to "raw" CPU or memory usage.) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## `Pods` Use custom metrics. These are still "per-Pod" metrics. ```yaml - type: Pods pods: metric: name: packets-per-second target: type: AverageValue averageValue: 1k ``` `type:` *must* be `AverageValue`. (It cannot be `Utilization`, since these can't be used in Pod `requests`.) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## `Object` Use custom metrics. These metrics are "linked" to any arbitrary resource. (E.g. a Deployment, Service, Ingress, ...) ```yaml - type: Object object: metric: name: requests-per-second describedObject: apiVersion: networking.k8s.io/v1 kind: Ingress name: main-route target: type: AverageValue value: 100 ``` `type:` can be `Value` or `AverageValue` (see next slide for details). .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## `Value` vs `AverageValue` - `Value` - use the value as-is - useful to pace a client or producer - "target a specific total load on a specific endpoint or queue" - `AverageValue` - divide the value by the number of pods - useful to scale a server or consumer - "scale our systems to meet a given SLA/SLO" .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## `External` Use arbitrary metrics. The series to use is specified with a label selector. ```yaml - type: External external: metric: name: queue_messages_ready selector: "queue=worker_tasks" target: type: AverageValue averageValue: 30 ``` The `selector` will be passed along when querying the metrics API. Its meaninng is implementation-dependent. It may or may not correspond to Kubernetes labels. .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## One more thing ... - We can give a `behavior` set of options - Indicates: - how much to scale up/down in a single step - a *stabilization window* to avoid hysteresis effects - The default stabilization window is 15 seconds for `scaleUp` (we might want to change that!) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- Putting togeher [k8s/hpa-v2-pa-httplat.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/hpa-v2-pa-httplat.yaml): .small[ ```yaml kind: HorizontalPodAutoscaler apiVersion: autoscaling/v2beta2 metadata: name: rng spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: rng minReplicas: 1 maxReplicas: 20 behavior: scaleUp: stabilizationWindowSeconds: 60 scaleDown: stabilizationWindowSeconds: 180 metrics: - type: Object object: describedObject: apiVersion: v1 kind: Service name: httplat metric: name: httplat_latency_seconds target: type: Value value: 0.1 ``` ] .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Creating the autoscaling policy - We will register the policy - Of course, it won't quite work yet (we're missing the *Prometheus adapter*) .lab[ - Create the HorizontalPodAutoscaler: ```bash kubectl apply -f ~/container.training/k8s/hpa-v2-pa-httplat.yaml ``` - Check the logs of the `controller-manager`: ```bash stern --namespace=kube-system --tail=10 controller-manager ``` ] After a little while we should see messages like this: ``` no custom metrics API (custom.metrics.k8s.io) registered ``` .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## `custom.metrics.k8s.io` - The HorizontalPodAutoscaler will get the metrics *from the Kubernetes API itself* - In our specific case, it will access a resource like this one: .small[ ``` /apis/custom.metrics.k8s.io/v1beta1/namespaces/customscaling/services/httplat/httplat_latency_seconds ``` ] - By default, the Kubernetes API server doesn't implement `custom.metrics.k8s.io` (we can have a look at `kubectl get apiservices`) - We need to: - start an API service implementing this API group - register it with our API server .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## The Prometheus adapter - The Prometheus adapter is an open source project: https://github.com/DirectXMan12/k8s-prometheus-adapter - It's a Kubernetes API service implementing API group `custom.metrics.k8s.io` - It maps the requests it receives to Prometheus metrics - Exactly what we need! .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Deploying the Prometheus adapter - There is ~~an app~~ a Helm chart for that .lab[ - Install the Prometheus adapter: ```bash helm upgrade --install prometheus-adapter prometheus-adapter \ --repo https://prometheus-community.github.io/helm-charts \ --namespace=prometheus-adapter --create-namespace \ --set prometheus.url=http://prometheus-server.prometheus.svc \ --set prometheus.port=80 ``` ] - It comes with some default mappings - But we will need to add `httplat` to these mappings .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Configuring the Prometheus adapter - The Prometheus adapter can be configured/customized through a ConfigMap - We are going to edit that ConfigMap, then restart the adapter - We need to add a rule that will say: - all the metrics series named `httplat_latency_seconds_sum` ... - ... belong to *Services* ... - ... the name of the Service and its Namespace are indicated by the `kubernetes_name` and `kubernetes_namespace` Prometheus tags respectively ... - ... and the exact value to use should be the following PromQL expression .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## The mapping rule Here is the rule that we need to add to the configuration: ```yaml - seriesQuery: 'httplat_latency_seconds_sum{namespace!="",service!=""}' resources: overrides: namespace: resource: namespace service: resource: service name: matches: "httplat_latency_seconds_sum" as: "httplat_latency_seconds" metricsQuery: | rate(httplat_latency_seconds_sum{<<.LabelMatchers>>}[2m])/rate(httplat_latency_seconds_count{<<.LabelMatchers>>}[2m]) ``` (I built it following the [walkthrough](https://github.com/DirectXMan12/k8s-prometheus-adapter/blob/master/docs/config-walkthrough.md ) in the Prometheus adapter documentation.) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Editing the adapter's configuration .lab[ - Edit the adapter's ConfigMap: ```bash kubectl edit configmap prometheus-adapter --namespace=prometheus-adapter ``` - Add the new rule in the `rules` section, at the end of the configuration file - Save, quit - Restart the Prometheus adapter: ```bash kubectl rollout restart deployment --namespace=prometheus-adapter prometheus-adapter ``` ] .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Witness the marvel of custom autoscaling (Sort of) - After a short while, the `rng` Deployment will scale up - It should scale up until the latency drops below 100ms (and continue to scale up a little bit more after that) - Then, since the latency will be well below 100ms, it will scale down - ... and back up again, etc. (See pictures on next slides!) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- class: pic  .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- class: pic  .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## What's going on? - The autoscaler's information is slightly out of date (not by much; probably between 1 and 2 minute) - It's enough to cause the oscillations to happen - One possible fix is to tell the autoscaler to wait a bit after each action - It will reduce oscillations, but will also slow down its reaction time (and therefore, how fast it reacts to a peak of traffic) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## What's going on? Take 2 - As soon as the measured latency is *significantly* below our target (100ms) ... the autoscaler tries to scale down - If the latency is measured at 20ms ... the autoscaler will try to *divide the number of pods by five!* - One possible solution: apply a formula to the measured latency, so that values between e.g. 10 and 100ms get very close to 100ms. - Another solution: instead of targetting for a specific latency, target a 95th percentile latency or something similar, using a more advanced PromQL expression (and leveraging the fact that we have histograms instead of raw values). .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Troubleshooting Check that the adapter registered itself correctly: ```bash kubectl get apiservices | grep metrics ``` Check that the adapter correctly serves metrics: ```bash kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1 ``` Check that our `httplat` metrics are available: ```bash kubectl get --raw /apis/custom.metrics.k8s.io/v1beta1\ /namespaces/customscaling/services/httplat/httplat_latency_seconds ``` Also check the logs of the `prometheus-adapter` and the `kube-controller-manager`. .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Useful links - [Horizontal Pod Autoscaler walkthrough](https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/) in the Kubernetes documentation - [Autoscaling design proposal](https://github.com/kubernetes/community/tree/master/contributors/design-proposals/autoscaling) - [Kubernetes custom metrics API alternative implementations](https://github.com/kubernetes/metrics/blob/master/IMPLEMENTATIONS.md) - [Prometheus adapter configuration walkthrough](https://github.com/DirectXMan12/k8s-prometheus-adapter/blob/master/docs/config-walkthrough.md) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- ## Discussion - This system works great if we have a single, centralized metrics system (and the corresponding "adapter" to expose these metrics through the Kubernetes API) - If we have metrics in multiple places, we must aggregate them (good news: Prometheus has exporters for almost everything!) - It is complex and has a steep learning curve - Another approach is [KEDA](https://keda.sh/) ??? :EN:- Autoscaling with custom metrics :FR:- Suivi de charge avancé (HPAv2) .debug[[k8s/hpa-v2.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/hpa-v2.md)] --- class: pic .interstitial[] --- name: toc-stateful-sets class: title Stateful sets .nav[ [Previous part](#toc-scaling-with-custom-metrics) | [Back to table of contents](#toc-part-4) | [Next part](#toc-running-a-consul-cluster) ] .debug[(automatically generated title slide)] --- # Stateful sets - Stateful sets are a type of resource in the Kubernetes API (like pods, deployments, services...) - They offer mechanisms to deploy scaled stateful applications - At a first glance, they look like Deployments: - a stateful set defines a pod spec and a number of replicas *R* - it will make sure that *R* copies of the pod are running - that number can be changed while the stateful set is running - updating the pod spec will cause a rolling update to happen - But they also have some significant differences .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Stateful sets unique features - Pods in a stateful set are numbered (from 0 to *R-1*) and ordered - They are started and updated in order (from 0 to *R-1*) - A pod is started (or updated) only when the previous one is ready - They are stopped in reverse order (from *R-1* to 0) - Each pod knows its identity (i.e. which number it is in the set) - Each pod can discover the IP address of the others easily - The pods can persist data on attached volumes 🤔 Wait a minute ... Can't we already attach volumes to pods and deployments? .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Revisiting volumes - [Volumes](https://kubernetes.io/docs/concepts/storage/volumes/) are used for many purposes: - sharing data between containers in a pod - exposing configuration information and secrets to containers - accessing storage systems - Let's see examples of the latter usage .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Volumes types - There are many [types of volumes](https://kubernetes.io/docs/concepts/storage/volumes/#types-of-volumes) available: - public cloud storage (GCEPersistentDisk, AWSElasticBlockStore, AzureDisk...) - private cloud storage (Cinder, VsphereVolume...) - traditional storage systems (NFS, iSCSI, FC...) - distributed storage (Ceph, Glusterfs, Portworx...) - Using a persistent volume requires: - creating the volume out-of-band (outside of the Kubernetes API) - referencing the volume in the pod description, with all its parameters .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Using a cloud volume Here is a pod definition using an AWS EBS volume (that has to be created first): ```yaml apiVersion: v1 kind: Pod metadata: name: pod-using-my-ebs-volume spec: containers: - image: ... name: container-using-my-ebs-volume volumeMounts: - mountPath: /my-ebs name: my-ebs-volume volumes: - name: my-ebs-volume awsElasticBlockStore: volumeID: vol-049df61146c4d7901 fsType: ext4 ``` .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Using an NFS volume Here is another example using a volume on an NFS server: ```yaml apiVersion: v1 kind: Pod metadata: name: pod-using-my-nfs-volume spec: containers: - image: ... name: container-using-my-nfs-volume volumeMounts: - mountPath: /my-nfs name: my-nfs-volume volumes: - name: my-nfs-volume nfs: server: 192.168.0.55 path: "/exports/assets" ``` .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Shortcomings of volumes - Their lifecycle (creation, deletion...) is managed outside of the Kubernetes API (we can't just use `kubectl apply/create/delete/...` to manage them) - If a Deployment uses a volume, all replicas end up using the same volume - That volume must then support concurrent access - some volumes do (e.g. NFS servers support multiple read/write access) - some volumes support concurrent reads - some volumes support concurrent access for colocated pods - What we really need is a way for each replica to have its own volume .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Individual volumes - The Pods of a Stateful set can have individual volumes (i.e. in a Stateful set with 3 replicas, there will be 3 volumes) - These volumes can be either: - allocated from a pool of pre-existing volumes (disks, partitions ...) - created dynamically using a storage system - This introduces a bunch of new Kubernetes resource types: Persistent Volumes, Persistent Volume Claims, Storage Classes (and also `volumeClaimTemplates`, that appear within Stateful Set manifests!) .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Stateful set recap - A Stateful sets manages a number of identical pods (like a Deployment) - These pods are numbered, and started/upgraded/stopped in a specific order - These pods are aware of their number (e.g., #0 can decide to be the primary, and #1 can be secondary) - These pods can find the IP addresses of the other pods in the set (through a *headless service*) - These pods can each have their own persistent storage .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- ## Obtaining per-pod storage - Stateful Sets can have *persistent volume claim templates* (declared in `spec.volumeClaimTemplates` in the Stateful set manifest) - A claim template will create one Persistent Volume Claim per pod (the PVC will be named `<claim-name>.<stateful-set-name>.<pod-index>`) - Persistent Volume Claims are matched 1-to-1 with Persistent Volumes - Persistent Volume provisioning can be done: - automatically (by leveraging *dynamic provisioning* with a Storage Class) - manually (human operator creates the volumes ahead of time, or when needed) ??? :EN:- Deploying apps with Stateful Sets :EN:- Understanding Persistent Volume Claims and Storage Classes :FR:- Déployer une application avec un *Stateful Set* :FR:- Comprendre les *Persistent Volume Claims* et *Storage Classes* .debug[[k8s/statefulsets.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/statefulsets.md)] --- class: pic .interstitial[] --- name: toc-running-a-consul-cluster class: title Running a Consul cluster .nav[ [Previous part](#toc-stateful-sets) | [Back to table of contents](#toc-part-4) | [Next part](#toc-pv-pvc-and-storage-classes) ] .debug[(automatically generated title slide)] --- # Running a Consul cluster - Here is a good use-case for Stateful sets! - We are going to deploy a Consul cluster with 3 nodes - Consul is a highly-available key/value store (like etcd or Zookeeper) - One easy way to bootstrap a cluster is to tell each node: - the addresses of other nodes - how many nodes are expected (to know when quorum is reached) .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Bootstrapping a Consul cluster *After reading the Consul documentation carefully (and/or asking around), we figure out the minimal command-line to run our Consul cluster.* ``` consul agent -data-dir=/consul/data -client=0.0.0.0 -server -ui \ -bootstrap-expect=3 \ -retry-join=`X.X.X.X` \ -retry-join=`Y.Y.Y.Y` ``` - Replace X.X.X.X and Y.Y.Y.Y with the addresses of other nodes - A node can add its own address (it will work fine) - ... Which means that we can use the same command-line on all nodes (convenient!) .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Cloud Auto-join - Since version 1.4.0, Consul can use the Kubernetes API to find its peers - This is called [Cloud Auto-join] - Instead of passing an IP address, we need to pass a parameter like this: ``` consul agent -retry-join "provider=k8s label_selector=\"app=consul\"" ``` - Consul needs to be able to talk to the Kubernetes API - We can provide a `kubeconfig` file - If Consul runs in a pod, it will use the *service account* of the pod [Cloud Auto-join]: https://www.consul.io/docs/agent/cloud-auto-join.html#kubernetes-k8s- .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Setting up Cloud auto-join - We need to create a service account for Consul - We need to create a role that can `list` and `get` pods - We need to bind that role to the service account - And of course, we need to make sure that Consul pods use that service account .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Putting it all together - The file `k8s/consul-1.yaml` defines the required resources (service account, role, role binding, service, stateful set) - Inspired by this [excellent tutorial](https://github.com/kelseyhightower/consul-on-kubernetes) by Kelsey Hightower (many features from the original tutorial were removed for simplicity) .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Running our Consul cluster - We'll use the provided YAML file .lab[ - Create the stateful set and associated service: ```bash kubectl apply -f ~/container.training/k8s/consul-1.yaml ``` - Check the logs as the pods come up one after another: ```bash stern consul ``` <!-- ```wait Synced node info``` ```key ^C``` --> - Check the health of the cluster: ```bash kubectl exec consul-0 -- consul members ``` ] .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Caveats - The scheduler may place two Consul pods on the same node - if that node fails, we lose two Consul pods at the same time - this will cause the cluster to fail - Scaling down the cluster will cause it to fail - when a Consul member leaves the cluster, it needs to inform the others - otherwise, the last remaining node doesn't have quorum and stops functioning - This Consul cluster doesn't use real persistence yet - data is stored in the containers' ephemeral filesystem - if a pod fails, its replacement starts from a blank slate .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Improving pod placement - We need to tell the scheduler: *do not put two of these pods on the same node!* - This is done with an `affinity` section like the following one: ```yaml affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchLabels: app: consul topologyKey: kubernetes.io/hostname ``` .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Using a lifecycle hook - When a Consul member leaves the cluster, it needs to execute: ```bash consul leave ``` - This is done with a `lifecycle` section like the following one: ```yaml lifecycle: preStop: exec: command: [ "sh", "-c", "consul leave" ] ``` .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Running a better Consul cluster - Let's try to add the scheduling constraint and lifecycle hook - We can do that in the same namespace or another one (as we like) - If we do that in the same namespace, we will see a rolling update (pods will be replaced one by one) .lab[ - Deploy a better Consul cluster: ```bash kubectl apply -f ~/container.training/k8s/consul-2.yaml ``` ] .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Still no persistence, though - We aren't using actual persistence yet (no `volumeClaimTemplate`, Persistent Volume, etc.) - What happens if we lose a pod? - a new pod gets rescheduled (with an empty state) - the new pod tries to connect to the two others - it will be accepted (after 1-2 minutes of instability) - and it will retrieve the data from the other pods .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- ## Failure modes - What happens if we lose two pods? - manual repair will be required - we will need to instruct the remaining one to act solo - then rejoin new pods - What happens if we lose three pods? (aka all of them) - we lose all the data (ouch) ??? :EN:- Scheduling pods together or separately :EN:- Example: deploying a Consul cluster :FR:- Lancer des pods ensemble ou séparément :FR:- Example : lancer un cluster Consul .debug[[k8s/consul.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/consul.md)] --- class: pic .interstitial[] --- name: toc-pv-pvc-and-storage-classes class: title PV, PVC, and Storage Classes .nav[ [Previous part](#toc-running-a-consul-cluster) | [Back to table of contents](#toc-part-4) | [Next part](#toc-openebs-) ] .debug[(automatically generated title slide)] --- # PV, PVC, and Storage Classes - When an application needs storage, it creates a PersistentVolumeClaim (either directly, or through a volume claim template in a Stateful Set) - The PersistentVolumeClaim is initially `Pending` - Kubernetes then looks for a suitable PersistentVolume (maybe one is immediately available; maybe we need to wait for provisioning) - Once a suitable PersistentVolume is found, the PVC becomes `Bound` - The PVC can then be used by a Pod (as long as the PVC is `Pending`, the Pod cannot run) .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Access modes - PV and PVC have *access modes*: - ReadWriteOnce (only one node can access the volume at a time) - ReadWriteMany (multiple nodes can access the volume simultaneously) - ReadOnlyMany (multiple nodes can access, but they can't write) - ReadWriteOncePod (only one pod can access the volume; new in Kubernetes 1.22) - A PVC lists the access modes that it requires - A PV lists the access modes that it supports ⚠️ A PV with only ReadWriteMany won't satisfy a PVC with ReadWriteOnce! .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Capacity - A PVC must express a storage size request (field `spec.resources.requests.storage`, in bytes) - A PV must express its size (field `spec.capacity.storage`, in bytes) - Kubernetes will only match a PV and PVC if the PV is big enough - These fields are only used for "matchmaking" purposes: - nothing prevents the Pod mounting the PVC from using more space - nothing requires the PV to actually be that big .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Storage Class - What if we have multiple storage systems available? (e.g. NFS and iSCSI; or AzureFile and AzureDisk; or Cinder and Ceph...) - What if we have a storage system with multiple tiers? (e.g. SAN with RAID1 and RAID5; general purpose vs. io optimized EBS...) - Kubernetes lets us define *storage classes* to represent these (see if you have any available at the moment with `kubectl get storageclasses`) .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Using storage classes - Optionally, each PV and each PVC can reference a StorageClass (field `spec.storageClassName`) - When creating a PVC, specifying a StorageClass means “use that particular storage system to provision the volume!” - Storage classes are necessary for [dynamic provisioning](https://kubernetes.io/docs/concepts/storage/dynamic-provisioning/) (but we can also ignore them and perform manual provisioning) .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Default storage class - We can define a *default storage class* (by annotating it with `storageclass.kubernetes.io/is-default-class=true`) - When a PVC is created, **IF** it doesn't indicate which storage class to use **AND** there is a default storage class **THEN** the PVC `storageClassName` is set to the default storage class .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Additional constraints - A PersistentVolumeClaim can also specify a volume selector (referring to labels on the PV) - A PersistentVolume can also be created with a `claimRef` (indicating to which PVC it should be bound) .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- class: extra-details ## Which PV gets associated to a PVC? - The PV must be `Available` - The PV must satisfy the PVC constraints (access mode, size, optional selector, optional storage class) - The PVs with the closest access mode are picked - Then the PVs with the closest size - It is possible to specify a `claimRef` when creating a PV (this will associate it to the specified PVC, but only if the PV satisfies all the requirements of the PVC; otherwise another PV might end up being picked) - For all the details about the PersistentVolumeClaimBinder, check [this doc](https://github.com/kubernetes/design-proposals-archive/blob/main/storage/persistent-storage.md#matching-and-binding) .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Creating a PVC - Let's create a standalone PVC and see what happens! .lab[ - Check if we have a StorageClass: ```bash kubectl get storageclasses ``` - Create the PVC: ```bash kubectl create -f ~/container.training/k8s/pvc.yaml ``` - Check the PVC: ```bash kubectl get pvc ``` ] .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Four possibilities 1. If we have a default StorageClass with *immediate* binding: *a PV was created and associated to the PVC* 2. If we have a default StorageClass that *waits for first consumer*: *the PVC is still `Pending` but has a `STORAGECLASS`* ⚠️ 3. If we don't have a default StorageClass: *the PVC is still `Pending`, without a `STORAGECLASS`* 4. If we have a StorageClass, but it doesn't work: *the PVC is still `Pending` but has a `STORAGECLASS`* ⚠️ .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Immediate vs WaitForFirstConsumer - Immediate = as soon as there is a `Pending` PVC, create a PV - What if: - the PV is only available on a node (e.g. local volume) - ...or on a subset of nodes (e.g. SAN HBA, EBS AZ...) - the Pod that will use the PVC has scheduling constraints - these constraints turn out to be incompatible with the PV - WaitForFirstConsumer = don't provision the PV until a Pod mounts the PVC .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Using the PVC - Let's mount the PVC in a Pod - We will use a stray Pod (no Deployment, StatefulSet, etc.) - We will use [k8s/mounter.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/mounter.yaml), shown on the next slide - We'll need to update the `claimName`! ⚠️ .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ```yaml kind: Pod apiVersion: v1 metadata: generateName: mounter- labels: container.training/mounter: "" spec: volumes: - name: pvc persistentVolumeClaim: claimName: my-pvc-XYZ45 containers: - name: mounter image: alpine stdin: true tty: true volumeMounts: - name: pvc mountPath: /pvc workingDir: /pvc ``` .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Running the Pod .lab[ - Edit the `mounter.yaml` manifest - Update the `claimName` to put the name of our PVC - Create the Pod - Check the status of the PV and PVC ] Note: this "mounter" Pod can be useful to inspect the content of a PVC. .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Scenario 1 & 2 If we have a default Storage Class that can provision PVC dynamically... - We should now have a new PV - The PV and the PVC should be `Bound` together .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Scenario 3 If we don't have a default Storage Class, we must create the PV manually. ```bash kubectl create -f ~/container.training/k8s/pv.yaml ``` After a few seconds, check that the PV and PVC are bound: ```bash kubectl get pv,pvc ``` .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Scenario 4 If our default Storage Class can't provision a PV, let's do it manually. The PV must specify the correct `storageClassName`. ```bash STORAGECLASS=$(kubectl get pvc --selector=container.training/pvc \ -o jsonpath={..storageClassName}) kubectl patch -f ~/container.training/k8s/pv.yaml --dry-run=client -o yaml \ --patch '{"spec": {"storageClassName": "'$STORAGECLASS'"}}' \ | kubectl create -f- ``` Check that the PV and PVC are bound: ```bash kubectl get pv,pvc ``` .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Checking the Pod - If the PVC was `Pending`, then the Pod was `Pending` too - Once the PVC is `Bound`, the Pod can be scheduled and can run - Once the Pod is `Running`, check it out with `kubectl attach -ti` .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## PV and PVC lifecycle - We can't delete a PV if it's `Bound` - If we `kubectl delete` it, it goes to `Terminating` state - We can't delete a PVC if it's in use by a Pod - Likewise, if we `kubectl delete` it, it goes to `Terminating` state - Deletion is prevented by *finalizers* (=like a post-it note saying “don't delete me!”) - When the mounting Pods are deleted, their PVCs are freed up - When PVCs are deleted, their PVs are freed up ??? :EN:- Storage provisioning :EN:- PV, PVC, StorageClass :FR:- Création de volumes :FR:- PV, PVC, et StorageClass .debug[[k8s/pv-pvc-sc.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/pv-pvc-sc.md)] --- ## Putting it all together - We want to run that Consul cluster *and* actually persist data - We'll use a StatefulSet that will leverage PV and PVC - If we have a dynamic provisioner: *the cluster will come up right away* - If we don't have a dynamic provisioner: *we will need to create Persistent Volumes manually* .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Persistent Volume Claims and Stateful sets - A Stateful set can define one (or more) `volumeClaimTemplate` - Each `volumeClaimTemplate` will create one Persistent Volume Claim per Pod - Each Pod will therefore have its own individual volume - These volumes are numbered (like the Pods) - Example: - a Stateful set is named `consul` - it is scaled to replicas - it has a `volumeClaimTemplate` named `data` - then it will create pods `consul-0`, `consul-1`, `consul-2` - these pods will have volumes named `data`, referencing PersistentVolumeClaims named `data-consul-0`, `data-consul-1`, `data-consul-2` .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Persistent Volume Claims are sticky - When updating the stateful set (e.g. image upgrade), each pod keeps its volume - When pods get rescheduled (e.g. node failure), they keep their volume (this requires a storage system that is not node-local) - These volumes are not automatically deleted (when the stateful set is scaled down or deleted) - If a stateful set is scaled back up later, the pods get their data back .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Deploying Consul - Let's use a new manifest for our Consul cluster - The only differences between that file and the previous one are: - `volumeClaimTemplate` defined in the Stateful Set spec - the corresponding `volumeMounts` in the Pod spec .lab[ - Apply the persistent Consul YAML file: ```bash kubectl apply -f ~/container.training/k8s/consul-3.yaml ``` ] .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## No dynamic provisioner - If we don't have a dynamic provisioner, we need to create the PVs - We are going to use local volumes (similar conceptually to `hostPath` volumes) - We can use local volumes without installing extra plugins - However, they are tied to a node - If that node goes down, the volume becomes unavailable .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Observing the situation - Let's look at Persistent Volume Claims and Pods .lab[ - Check that we now have an unbound Persistent Volume Claim: ```bash kubectl get pvc ``` - We don't have any Persistent Volume: ```bash kubectl get pv ``` - The Pod `consul-0` is not scheduled yet: ```bash kubectl get pods -o wide ``` ] *Hint: leave these commands running with `-w` in different windows.* .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Explanations - In a Stateful Set, the Pods are started one by one - `consul-1` won't be created until `consul-0` is running - `consul-0` has a dependency on an unbound Persistent Volume Claim - The scheduler won't schedule the Pod until the PVC is bound (because the PVC might be bound to a volume that is only available on a subset of nodes; for instance EBS are tied to an availability zone) .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Creating Persistent Volumes - Let's create 3 local directories (`/mnt/consul`) on node2, node3, node4 - Then create 3 Persistent Volumes corresponding to these directories .lab[ - Create the local directories: ```bash for NODE in node2 node3 node4; do ssh $NODE sudo mkdir -p /mnt/consul done ``` - Create the PV objects: ```bash kubectl apply -f ~/container.training/k8s/volumes-for-consul.yaml ``` ] .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Check our Consul cluster - The PVs that we created will be automatically matched with the PVCs - Once a PVC is bound, its pod can start normally - Once the pod `consul-0` has started, `consul-1` can be created, etc. - Eventually, our Consul cluster is up, and backend by "persistent" volumes .lab[ - Check that our Consul clusters has 3 members indeed: ```bash kubectl exec consul-0 -- consul members ``` ] .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Devil is in the details (1/2) - The size of the Persistent Volumes is bogus (it is used when matching PVs and PVCs together, but there is no actual quota or limit) - The Pod might end up using more than the requested size - The PV may or may not have the capacity that it's advertising - It works well with dynamically provisioned block volumes - ...Less so in other scenarios! .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Devil is in the details (2/2) - This specific example worked because we had exactly 1 free PV per node: - if we had created multiple PVs per node ... - we could have ended with two PVCs bound to PVs on the same node ... - which would have required two pods to be on the same node ... - which is forbidden by the anti-affinity constraints in the StatefulSet - To avoid that, we need to associated the PVs with a Storage Class that has: ```yaml volumeBindingMode: WaitForFirstConsumer ``` (this means that a PVC will be bound to a PV only after being used by a Pod) - See [this blog post](https://kubernetes.io/blog/2018/04/13/local-persistent-volumes-beta/) for more details .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## If we have a dynamic provisioner These are the steps when dynamic provisioning happens: 1. The Stateful Set creates PVCs according to the `volumeClaimTemplate`. 2. The Stateful Set creates Pods using these PVCs. 3. The PVCs are automatically annotated with our Storage Class. 4. The dynamic provisioner provisions volumes and creates the corresponding PVs. 5. The PersistentVolumeClaimBinder associates the PVs and the PVCs together. 6. PVCs are now bound, the Pods can start. .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Validating persistence (1) - When the StatefulSet is deleted, the PVC and PV still exist - And if we recreate an identical StatefulSet, the PVC and PV are reused - Let's see that! .lab[ - Put some data in Consul: ```bash kubectl exec consul-0 -- consul kv put answer 42 ``` - Delete the Consul cluster: ```bash kubectl delete -f ~/container.training/k8s/consul-3.yaml ``` ] .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Validating persistence (2) .lab[ - Wait until the last Pod is deleted: ```bash kubectl wait pod consul-0 --for=delete ``` - Check that PV and PVC are still here: ```bash kubectl get pv,pvc ``` ] .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Validating persistence (3) .lab[ - Re-create the cluster: ```bash kubectl apply -f ~/container.training/k8s/consul-3.yaml ``` - Wait until it's up - Then access the key that we set earlier: ```bash kubectl exec consul-0 -- consul kv get answer ``` ] .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- ## Cleaning up - PV and PVC don't get deleted automatically - This is great (less risk of accidental data loss) - This is not great (storage usage increases) - Managing PVC lifecycle: - remove them manually - add their StatefulSet to their `ownerReferences` - delete the Namespace that they belong to ??? :EN:- Defining volumeClaimTemplates :FR:- Définir des volumeClaimTemplates .debug[[k8s/volume-claim-templates.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/volume-claim-templates.md)] --- class: pic .interstitial[] --- name: toc-openebs- class: title OpenEBS .nav[ [Previous part](#toc-pv-pvc-and-storage-classes) | [Back to table of contents](#toc-part-4) | [Next part](#toc-stateful-failover) ] .debug[(automatically generated title slide)] --- # OpenEBS - [OpenEBS] is a popular open-source storage solution for Kubernetes - Uses the concept of "Container Attached Storage" (1 volume = 1 dedicated controller pod + a set of replica pods) - Supports a wide range of storage engines: - LocalPV: local volumes (hostpath or device), no replication - Jiva: for lighter workloads with basic cloning/snapshotting - cStor: more powerful engine that also supports resizing, RAID, disk pools ... - [Mayastor]: newer, even more powerful engine with NVMe and vhost-user support [OpenEBS]: https://openebs.io/ [Mayastor]: https://github.com/openebs/MayaStor#mayastor .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- class: extra-details ## What are all these storage engines? - LocalPV is great if we want good performance, no replication, easy setup (it is similar to the Rancher local path provisioner) - Jiva is great if we want replication and easy setup (data is stored in containers' filesystems) - cStor is more powerful and flexible, but requires more extensive setup - Mayastor is designed to achieve extreme performance levels (with the right hardware and disks) - The OpenEBS documentation has a [good comparison of engines] to help us pick [good comparison of engines]: https://docs.openebs.io/docs/next/casengines.html#cstor-vs-jiva-vs-localpv-features-comparison .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Installing OpenEBS with Helm - The OpenEBS control plane can be installed with Helm - It will run as a set of containers on Kubernetes worker nodes .lab[ - Install OpenEBS: ```bash helm upgrade --install openebs openebs \ --repo https://openebs.github.io/charts \ --namespace openebs --create-namespace \ --version 2.12.9 ``` ] ⚠️ We stick to OpenEBS 2.x because 3.x requires additional configuration. .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Checking what was installed - Wait a little bit ... .lab[ - Look at the pods in the `openebs` namespace: ```bash kubectl get pods --namespace openebs ``` - And the StorageClasses that were created: ```bash kubectl get sc ``` ] .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## The default StorageClasses - OpenEBS typically creates three default StorageClasses - `openebs-jiva-default` provisions 3 replicated Jiva pods per volume - data is stored in `/openebs` in the replica pods - `/openebs` is a localpath volume mapped to `/var/openebs/pvc-...` on the node - `openebs-hostpath` uses LocalPV with local directories - volumes are hostpath volumes created in `/var/openebs/local` on each node - `openebs-device` uses LocalPV with local block devices - requires available disks and/or a bit of extra configuration - the default configuration filters out loop, LVM, MD devices .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## When do we need custom StorageClasses? - To store LocalPV hostpath volumes on a different path on the host - To change the number of replicated Jiva pods - To use a different Jiva pool (i.e. a different path on the host to store the Jiva volumes) - To create a cStor pool - ... .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- class: extra-details ## Defining a custom StorageClass Example for a LocalPV hostpath class using an extra mount on `/mnt/vol001`: ```yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: localpv-hostpath-mntvol001 annotations: openebs.io/cas-type: local cas.openebs.io/config: | - name: BasePath value: "/mnt/vol001" - name: StorageType value: "hostpath" provisioner: openebs.io/local ``` - `provisioner` needs to be set accordingly - Storage engine is chosen by specifying the annotation `openebs.io/cas-type` - Storage engine configuration is set with the annotation `cas.openebs.io/config` .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Checking the default hostpath StorageClass - Let's inspect the StorageClass that OpenEBS created for us .lab[ - Let's look at the OpenEBS LocalPV hostpath StorageClass: ```bash kubectl get storageclass openebs-hostpath -o yaml ``` ] .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Create a host path PVC - Let's create a Persistent Volume Claim using an explicit StorageClass .lab[ ```bash kubectl apply -f - <<EOF kind: PersistentVolumeClaim apiVersion: v1 metadata: name: local-hostpath-pvc spec: storageClassName: openebs-hostpath accessModes: - ReadWriteOnce resources: requests: storage: 1G EOF ``` ] .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Making sure that a PV was created for our PVC - Normally, the `openebs-hostpath` StorageClass created a PV for our PVC .lab[ - Look at the PV and PVC: ```bash kubectl get pv,pvc ``` ] .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Create a Pod to consume the PV .lab[ - Create a Pod using that PVC: ```bash kubectl apply -f ~/container.training/k8s/openebs-pod.yaml ``` - Here are the sections that declare and use the volume: ```yaml volumes: - name: my-storage persistentVolumeClaim: claimName: local-hostpath-pvc containers: ... volumeMounts: - mountPath: /mnt/storage name: my-storage ``` ] .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Verify that data is written on the node - Let's find the file written by the Pod on the node where the Pod is running .lab[ - Get the worker node where the pod is located ```bash kubectl get pod openebs-local-hostpath-pod -ojsonpath={.spec.nodeName} ``` - SSH into the node - Check the volume content ```bash sudo tail /var/openebs/local/pvc-*/greet.txt ``` ] .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Heads up! - The following labs and exercises will use the Jiva storage class - This storage class creates 3 replicas by default - It uses *anti-affinity* placement constraits to put these replicas on different nodes - **This requires a cluster with multiple nodes!** - It also requires the iSCSI client (aka *initiator*) to be installed on the nodes - On many platforms, the iSCSI client is preinstalled and will start automatically - If it doesn't, you might want to check [this documentation page] for details [this documentation page]: https://docs.openebs.io/docs/next/prerequisites.html .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## The default StorageClass - The PVC that we defined earlier specified an explicit StorageClass - We can also set a default StorageClass - It will then be used for all PVC that *don't* specify and explicit StorageClass - This is done with the annotation `storageclass.kubernetes.io/is-default-class` .lab[ - Check if we have a default StorageClass: ```bash kubectl get storageclasses ``` ] - The default StorageClass (if there is one) is shown with `(default)` .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## Setting a default StorageClass - Let's set the default StorageClass to use `openebs-jiva-default` .lab[ - Remove the annotation (just in case we already have a default class): ```bash kubectl annotate storageclass storageclass.kubernetes.io/is-default-class- --all ``` - Annotate the Jiva StorageClass: ```bash kubectl annotate storageclasses \ openebs-jiva-default storageclass.kubernetes.io/is-default-class=true ``` - Check the result: ```bash kuectl get storageclasses ``` ] .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- ## We're ready now! - We have a StorageClass that can provision PersistentVolumes - These PersistentVolumes will be replicated across nodes - They should be able to withstand single-node failures ??? :EN:- Understanding Container Attached Storage (CAS) :EN:- Deploying stateful apps with OpenEBS :FR:- Comprendre le "Container Attached Storage" (CAS) :FR:- Déployer une application "stateful" avec OpenEBS .debug[[k8s/openebs.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/openebs.md)] --- class: pic .interstitial[] --- name: toc-stateful-failover class: title Stateful failover .nav[ [Previous part](#toc-openebs-) | [Back to table of contents](#toc-part-4) | [Next part](#toc-designing-an-operator) ] .debug[(automatically generated title slide)] --- # Stateful failover - How can we achieve true durability? - How can we store data that would survive the loss of a node? -- - We need to use Persistent Volumes backed by highly available storage systems - There are many ways to achieve that: - leveraging our cloud's storage APIs - using NAS/SAN systems or file servers - distributed storage systems .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Our test scenario - We will use it to deploy a SQL database (PostgreSQL) - We will insert some test data in the database - We will disrupt the node running the database - We will see how it recovers .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Our Postgres Stateful set - The next slide shows `k8s/postgres.yaml` - It defines a Stateful set - With a `volumeClaimTemplate` requesting a 1 GB volume - That volume will be mounted to `/var/lib/postgresql/data` .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- .small[.small[ ```yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: postgres spec: selector: matchLabels: app: postgres serviceName: postgres template: metadata: labels: app: postgres spec: #schedulerName: stork initContainers: - name: rmdir image: alpine volumeMounts: - mountPath: /vol name: postgres command: ["sh", "-c", "if [ -d /vol/lost+found ]; then rmdir /vol/lost+found; fi"] containers: - name: postgres image: postgres:12 env: - name: POSTGRES_HOST_AUTH_METHOD value: trust volumeMounts: - mountPath: /var/lib/postgresql/data name: postgres volumeClaimTemplates: - metadata: name: postgres spec: accessModes: ["ReadWriteOnce"] resources: requests: storage: 1Gi ``` ]] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Creating the Stateful set - Before applying the YAML, watch what's going on with `kubectl get events -w` .lab[ - Apply that YAML: ```bash kubectl apply -f ~/container.training/k8s/postgres.yaml ``` <!-- ```hide kubectl wait pod postgres-0 --for condition=ready``` --> ] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Testing our PostgreSQL pod - We will use `kubectl exec` to get a shell in the pod - Good to know: we need to use the `postgres` user in the pod .lab[ - Get a shell in the pod, as the `postgres` user: ```bash kubectl exec -ti postgres-0 -- su postgres ``` <!-- autopilot prompt detection expects $ or # at the beginning of the line. ```wait postgres@postgres``` ```keys PS1="\u@\h:\w\n\$ "``` ```key ^J``` --> - Check that default databases have been created correctly: ```bash psql -l ``` ] (This should show us 3 lines: postgres, template0, and template1.) .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Inserting data in PostgreSQL - We will create a database and populate it with `pgbench` .lab[ - Create a database named `demo`: ```bash createdb demo ``` - Populate it with `pgbench`: ```bash pgbench -i demo ``` ] - The `-i` flag means "create tables" - If you want more data in the test tables, add e.g. `-s 10` (to get 10x more rows) .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Checking how much data we have now - The `pgbench` tool inserts rows in table `pgbench_accounts` .lab[ - Check that the `demo` base exists: ```bash psql -l ``` - Check how many rows we have in `pgbench_accounts`: ```bash psql demo -c "select count(*) from pgbench_accounts" ``` - Check that `pgbench_history` is currently empty: ```bash psql demo -c "select count(*) from pgbench_history" ``` ] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Testing the load generator - Let's use `pgbench` to generate a few transactions .lab[ - Run `pgbench` for 10 seconds, reporting progress every second: ```bash pgbench -P 1 -T 10 demo ``` - Check the size of the history table now: ```bash psql demo -c "select count(*) from pgbench_history" ``` ] Note: on small cloud instances, a typical speed is about 100 transactions/second. .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Generating transactions - Now let's use `pgbench` to generate more transactions - While it's running, we will disrupt the database server .lab[ - Run `pgbench` for 10 minutes, reporting progress every second: ```bash pgbench -P 1 -T 600 demo ``` - You can use a longer time period if you need more time to run the next steps <!-- ```tmux split-pane -h``` --> ] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Find out which node is hosting the database - We can find that information with `kubectl get pods -o wide` .lab[ - Check the node running the database: ```bash kubectl get pod postgres-0 -o wide ``` ] We are going to disrupt that node. -- By "disrupt" we mean: "disconnect it from the network". .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Node failover ⚠️ This will partially break your cluster! - We are going to disconnect the node running PostgreSQL from the cluster - We will see what happens, and how to recover - We will not reconnect the node to the cluster - This whole lab will take at least 10-15 minutes (due to various timeouts) ⚠️ Only do this lab at the very end, when you don't want to run anything else after! .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Disconnecting the node from the cluster .lab[ - Find out where the Pod is running, and SSH into that node: ```bash kubectl get pod postgres-0 -o jsonpath={.spec.nodeName} ssh nodeX ``` - Check the name of the network interface: ```bash sudo ip route ls default ``` - The output should look like this: ``` default via 10.10.0.1 `dev ensX` proto dhcp src 10.10.0.13 metric 100 ``` - Shutdown the network interface: ```bash sudo ip link set ensX down ``` ] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- class: extra-details ## Another way to disconnect the node - We can also use `iptables` to block all traffic exiting the node (except SSH traffic, so we can repair the node later if needed) .lab[ - SSH to the node to disrupt: ```bash ssh `nodeX` ``` - Allow SSH traffic leaving the node, but block all other traffic: ```bash sudo iptables -I OUTPUT -p tcp --sport 22 -j ACCEPT sudo iptables -I OUTPUT 2 -j DROP ``` ] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Watch what's going on - Let's look at the status of Nodes, Pods, and Events .lab[ - In a first pane/tab/window, check Nodes and Pods: ```bash watch kubectl get nodes,pods -o wide ``` - In another pane/tab/window, check Events: ```bash kubectl get events --watch ``` ] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Node Ready → NotReady - After \~30 seconds, the control plane stops receiving heartbeats from the Node - The Node is marked NotReady - It is not *schedulable* anymore (the scheduler won't place new pods there, except some special cases) - All Pods on that Node are also *not ready* (they get removed from service Endpoints) - ... But nothing else happens for now (the control plane is waiting: maybe the Node will come back shortly?) .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Pod eviction - After \~5 minutes, the control plane will evict most Pods from the Node - These Pods are now `Terminating` - The Pods controlled by e.g. ReplicaSets are automatically moved (or rather: new Pods are created to replace them) - But nothing happens to the Pods controlled by StatefulSets at this point (they remain `Terminating` forever) - Why? 🤔 -- - This is to avoid *split brain scenarios* .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- class: extra-details ## Split brain 🧠⚡️🧠 - Imagine that we create a replacement pod `postgres-0` on another Node - And 15 minutes later, the Node is reconnected and the original `postgres-0` comes back - Which one is the "right" one? - What if they have conflicting data? 😱 - We *cannot* let that happen! - Kubernetes won't do it - ... Unless we tell it to .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## The Node is gone - One thing we can do, is tell Kubernetes "the Node won't come back" (there are other methods; but this one is the simplest one here) - This is done with a simple `kubectl delete node` .lab[ - `kubectl delete` the Node that we disconnected ] .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Pod rescheduling - Kubernetes removes the Node - After a brief period of time (\~1 minute) the "Terminating" Pods are removed - A replacement Pod is created on another Node - ... But it doens't start yet! - Why? 🤔 .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Multiple attachment - By default, a disk can only be attached to one Node at a time (sometimes it's a hardware or API limitation; sometimes enforced in software) - In our Events, we should see `FailedAttachVolume` and `FailedMount` messages - After \~5 more minutes, the disk will be force-detached from the old Node - ... Which will allow attaching it to the new Node! 🎉 - The Pod will then be able to start - Failover is complete! .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Check that our data is still available - We are going to reconnect to the (new) pod and check .lab[ - Get a shell on the pod: ```bash kubectl exec -ti postgres-0 -- su postgres ``` <!-- ```wait postgres@postgres``` ```keys PS1="\u@\h:\w\n\$ "``` ```key ^J``` --> - Check how many transactions are now in the `pgbench_history` table: ```bash psql demo -c "select count(*) from pgbench_history" ``` <!-- ```key ^D``` --> ] If the 10-second test that we ran earlier gave e.g. 80 transactions per second, and we failed the node after 30 seconds, we should have about 2400 row in that table. .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- ## Double-check that the pod has really moved - Just to make sure the system is not bluffing! .lab[ - Look at which node the pod is now running on ```bash kubectl get pod postgres-0 -o wide ``` ] ??? :EN:- Using highly available persistent volumes :EN:- Example: deploying a database that can withstand node outages :FR:- Utilisation de volumes à haute disponibilité :FR:- Exemple : déployer une base de données survivant à la défaillance d'un nœud .debug[[k8s/stateful-failover.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/stateful-failover.md)] --- class: pic .interstitial[] --- name: toc-designing-an-operator class: title Designing an operator .nav[ [Previous part](#toc-stateful-failover) | [Back to table of contents](#toc-part-4) | [Next part](#toc-writing-an-tiny-operator) ] .debug[(automatically generated title slide)] --- # Designing an operator - Once we understand CRDs and operators, it's tempting to use them everywhere - Yes, we can do (almost) everything with operators ... - ... But *should we?* - Very often, the answer is **“no!”** - Operators are powerful, but significantly more complex than other solutions .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## When should we (not) use operators? - Operators are great if our app needs to react to cluster events (nodes or pods going down, and requiring extensive reconfiguration) - Operators *might* be helpful to encapsulate complexity (manipulate one single custom resource for an entire stack) - Operators are probably overkill if a Helm chart would suffice - That being said, if we really want to write an operator ... Read on! .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## What does it take to write an operator? - Writing a quick-and-dirty operator, or a POC/MVP, is easy - Writing a robust operator is hard - We will describe the general idea - We will identify some of the associated challenges - We will list a few tools that can help us .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Top-down vs. bottom-up - Both approaches are possible - Let's see what they entail, and their respective pros and cons .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Top-down approach - Start with high-level design (see next slide) - Pros: - can yield cleaner design that will be more robust - Cons: - must be able to anticipate all the events that might happen - design will be better only to the extent of what we anticipated - hard to anticipate if we don't have production experience .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## High-level design - What are we solving? (e.g.: geographic databases backed by PostGIS with Redis caches) - What are our use-cases, stories? (e.g.: adding/resizing caches and read replicas; load balancing queries) - What kind of outage do we want to address? (e.g.: loss of individual node, pod, volume) - What are our *non-features*, the things we don't want to address? (e.g.: loss of datacenter/zone; differentiating between read and write queries; <br/> cache invalidation; upgrading to newer major versions of Redis, PostGIS, PostgreSQL) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Low-level design - What Custom Resource Definitions do we need? (one, many?) - How will we store configuration information? (part of the CRD spec fields, annotations, other?) - Do we need to store state? If so, where? - state that is small and doesn't change much can be stored via the Kubernetes API <br/> (e.g.: leader information, configuration, credentials) - things that are big and/or change a lot should go elsewhere <br/> (e.g.: metrics, bigger configuration file like GeoIP) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- class: extra-details ## What can we store via the Kubernetes API? - The API server stores most Kubernetes resources in etcd - Etcd is designed for reliability, not for performance - If our storage needs exceed what etcd can offer, we need to use something else: - either directly - or by extending the API server <br/>(for instance by using the agregation layer, like [metrics server](https://github.com/kubernetes-incubator/metrics-server) does) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Bottom-up approach - Start with existing Kubernetes resources (Deployment, Stateful Set...) - Run the system in production - Add scripts, automation, to facilitate day-to-day operations - Turn the scripts into an operator - Pros: simpler to get started; reflects actual use-cases - Cons: can result in convoluted designs requiring extensive refactor .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## General idea - Our operator will watch its CRDs *and associated resources* - Drawing state diagrams and finite state automata helps a lot - It's OK if some transitions lead to a big catch-all "human intervention" - Over time, we will learn about new failure modes and add to these diagrams - It's OK to start with CRD creation / deletion and prevent any modification (that's the easy POC/MVP we were talking about) - *Presentation* and *validation* will help our users (more on that later) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Challenges - Reacting to infrastructure disruption can seem hard at first - Kubernetes gives us a lot of primitives to help: - Pods and Persistent Volumes will *eventually* recover - Stateful Sets give us easy ways to "add N copies" of a thing - The real challenges come with configuration changes (i.e., what to do when our users update our CRDs) - Keep in mind that [some] of the [largest] cloud [outages] haven't been caused by [natural catastrophes], or even code bugs, but by configuration changes [some]: https://www.datacenterdynamics.com/news/gcp-outage-mainone-leaked-google-cloudflare-ip-addresses-china-telecom/ [largest]: https://aws.amazon.com/message/41926/ [outages]: https://aws.amazon.com/message/65648/ [natural catastrophes]: https://www.datacenterknowledge.com/amazon/aws-says-it-s-never-seen-whole-data-center-go-down .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Configuration changes - It is helpful to analyze and understand how Kubernetes controllers work: - watch resource for modifications - compare desired state (CRD) and current state - issue actions to converge state - Configuration changes will probably require *another* state diagram or FSA - Again, it's OK to have transitions labeled as "unsupported" (i.e. reject some modifications because we can't execute them) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Tools - CoreOS / RedHat Operator Framework [GitHub](https://github.com/operator-framework) | [Blog](https://developers.redhat.com/blog/2018/12/18/introduction-to-the-kubernetes-operator-framework/) | [Intro talk](https://www.youtube.com/watch?v=8k_ayO1VRXE) | [Deep dive talk](https://www.youtube.com/watch?v=fu7ecA2rXmc) | [Simple example](https://medium.com/faun/writing-your-first-kubernetes-operator-8f3df4453234) - Kubernetes Operator Pythonic Framework (KOPF) [GitHub](https://github.com/nolar/kopf) | [Docs](https://kopf.readthedocs.io/) | [Step-by-step tutorial](https://kopf.readthedocs.io/en/stable/walkthrough/problem/) - Mesosphere Kubernetes Universal Declarative Operator (KUDO) [GitHub](https://github.com/kudobuilder/kudo) | [Blog](https://mesosphere.com/blog/announcing-maestro-a-declarative-no-code-approach-to-kubernetes-day-2-operators/) | [Docs](https://kudo.dev/) | [Zookeeper example](https://github.com/kudobuilder/frameworks/tree/master/repo/stable/zookeeper) - Kubebuilder (Go, very close to the Kubernetes API codebase) [GitHub](https://github.com/kubernetes-sigs/kubebuilder) | [Book](https://book.kubebuilder.io/) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Validation - By default, a CRD is "free form" (we can put pretty much anything we want in it) - When creating a CRD, we can provide an OpenAPI v3 schema ([Example](https://github.com/amaizfinance/redis-operator/blob/master/deploy/crds/k8s_v1alpha1_redis_crd.yaml#L34)) - The API server will then validate resources created/edited with this schema - If we need a stronger validation, we can use a Validating Admission Webhook: - run an [admission webhook server](https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#write-an-admission-webhook-server) to receive validation requests - register the webhook by creating a [ValidatingWebhookConfiguration](https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#configure-admission-webhooks-on-the-fly) - each time the API server receives a request matching the configuration, <br/>the request is sent to our server for validation .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Presentation - By default, `kubectl get mycustomresource` won't display much information (just the name and age of each resource) - When creating a CRD, we can specify additional columns to print ([Example](https://github.com/amaizfinance/redis-operator/blob/master/deploy/crds/k8s_v1alpha1_redis_crd.yaml#L6), [Docs](https://kubernetes.io/docs/tasks/access-kubernetes-api/custom-resources/custom-resource-definitions/#additional-printer-columns)) - By default, `kubectl describe mycustomresource` will also be generic - `kubectl describe` can show events related to our custom resources (for that, we need to create Event resources, and fill the `involvedObject` field) - For scalable resources, we can define a `scale` sub-resource - This will enable the use of `kubectl scale` and other scaling-related operations .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## About scaling - It is possible to use the HPA (Horizontal Pod Autoscaler) with CRDs - But it is not always desirable - The HPA works very well for homogenous, stateless workloads - For other workloads, your mileage may vary - Some systems can scale across multiple dimensions (for instance: increase number of replicas, or number of shards?) - If autoscaling is desired, the operator will have to take complex decisions (example: Zalando's Elasticsearch Operator ([Video](https://www.youtube.com/watch?v=lprE0J0kAq0))) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Versioning - As our operator evolves over time, we may have to change the CRD (add, remove, change fields) - Like every other resource in Kubernetes, [custom resources are versioned](https://kubernetes.io/docs/tasks/access-kubernetes-api/custom-resources/custom-resource-definition-versioning/ ) - When creating a CRD, we need to specify a *list* of versions - Versions can be marked as `stored` and/or `served` .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Stored version - Exactly one version has to be marked as the `stored` version - As the name implies, it is the one that will be stored in etcd - Resources in storage are never converted automatically (we need to read and re-write them ourselves) - Yes, this means that we can have different versions in etcd at any time - Our code needs to handle all the versions that still exist in storage .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Served versions - By default, the Kubernetes API will serve resources "as-is" (using their stored version) - It will assume that all versions are compatible storage-wise (i.e. that the spec and fields are compatible between versions) - We can provide [conversion webhooks](https://kubernetes.io/docs/tasks/access-kubernetes-api/custom-resources/custom-resource-definition-versioning/#webhook-conversion) to "translate" requests (the alternative is to upgrade all stored resources and stop serving old versions) .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Operator reliability - Remember that the operator itself must be resilient (e.g.: the node running it can fail) - Our operator must be able to restart and recover gracefully - Do not store state locally (unless we can reconstruct that state when we restart) - As indicated earlier, we can use the Kubernetes API to store data: - in the custom resources themselves - in other resources' annotations .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- ## Beyond CRDs - CRDs cannot use custom storage (e.g. for time series data) - CRDs cannot support arbitrary subresources (like logs or exec for Pods) - CRDs cannot support protobuf (for faster, more efficient communication) - If we need these things, we can use the [aggregation layer](https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/apiserver-aggregation/) instead - The aggregation layer proxies all requests below a specific path to another server (this is used e.g. by the metrics server) - [This documentation page](https://kubernetes.io/docs/concepts/extend-kubernetes/api-extension/custom-resources/#choosing-a-method-for-adding-custom-resources) compares the features of CRDs and API aggregation ??? :EN:- Guidelines to design our own operators :FR:- Comment concevoir nos propres opérateurs .debug[[k8s/operators-design.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-design.md)] --- class: pic .interstitial[] --- name: toc-writing-an-tiny-operator class: title Writing an tiny operator .nav[ [Previous part](#toc-designing-an-operator) | [Back to table of contents](#toc-part-4) | [Next part](#toc-owners-and-dependents) ] .debug[(automatically generated title slide)] --- # Writing an tiny operator - Let's look at a simple operator - It does have: - a control loop - resource lifecycle management - basic logging - It doesn't have: - CRDs (and therefore, resource versioning, conversion webhooks...) - advanced observability (metrics, Kubernetes Events) .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## Use case *When I push code to my source control system, I want that code to be built into a container image, and that image to be deployed in a staging environment. I want each branch/tag/commit (depending on my needs) to be deployed into its specific Kubernetes Namespace.* - The last part requires the CI/CD pipeline to manage Namespaces - ...And permissions in these Namespaces - This requires elevated privileges for the CI/CD pipeline (read: `cluster-admin`) - If the CI/CD pipeline is compromised, this can lead to cluster compromise - This can be a concern if the CI/CD pipeline is part of the repository (which is the default modus operandi with GitHub, GitLab, Bitbucket...) .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## Proposed solution - On-demand creation of Namespaces - Creation is triggered by creating a ConfigMap in a dedicated Namespace - Namespaces are set up with basic permissions - Credentials are generated for each Namespace - Credentials only give access to their Namespace - Credentials are exposed back to the dedicated configuration Namespace - Operator implemented as a shell script .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## An operator in shell... Really? - About 150 lines of code (including comments + white space) - Performance doesn't matter - operator work will be a tiny fraction of CI/CD pipeline work - uses *watch* semantics to minimize control plane load - Easy to understand, easy to audit, easy to tweak .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## Show me the code! - GitHub repository and documentation: https://github.com/jpetazzo/nsplease - Operator source code: https://github.com/jpetazzo/nsplease/blob/main/nsplease.sh .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## Main loop ```bash info "Waiting for ConfigMap events in $REQUESTS_NAMESPACE..." kubectl --namespace $REQUESTS_NAMESPACE get configmaps \ --watch --output-watch-events -o json \ | jq --unbuffered --raw-output '[.type,.object.metadata.name] | @tsv' \ | while read TYPE NAMESPACE; do debug "Got event: $TYPE $NAMESPACE" ``` - `--watch` to avoid active-polling the control plane - `--output-watch-events` to disregard e.g. resource deletion, edition - `jq` to process JSON easily .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## Resource ownership - Check out the `kubectl patch` commands - The created Namespace "owns" the corresponding ConfigMap and Secret - This means that deleting the Namespace will delete the ConfigMap and Secret - We don't need to watch for object deletion to clean up - Clean up will we done automatically even if operator is not running .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## Why no CRD? - It's easier to create a ConfigMap (e.g. `kubectl create configmap --from-literal=` one-liner) - We don't need the features of CRDs (schemas, printer columns, versioning...) - “This CRD could have been a ConfigMap!” (this doesn't mean *all* CRDs could be ConfigMaps, of course) .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- ## Discussion - A lot of simple, yet efficient logic, can be implemented in shell scripts - These can be used to prototype more complex operators - Not all use-cases require CRDs (keep in mind that correct CRDs are *a lot* of work!) - If the algorithms are correct, shell performance won't matter at all (but it will be difficult to keep a resource cache in shell) - Improvement idea: this operator could generate *events* (visible with `kubectl get events` and `kubectl describe`) .debug[[k8s/operators-example.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/operators-example.md)] --- class: pic .interstitial[] --- name: toc-owners-and-dependents class: title Owners and dependents .nav[ [Previous part](#toc-writing-an-tiny-operator) | [Back to table of contents](#toc-part-4) | [Next part](#toc-events) ] .debug[(automatically generated title slide)] --- # Owners and dependents - Some objects are created by other objects (example: pods created by replica sets, themselves created by deployments) - When an *owner* object is deleted, its *dependents* are deleted (this is the default behavior; it can be changed) - We can delete a dependent directly if we want (but generally, the owner will recreate another right away) - An object can have multiple owners .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- ## Finding out the owners of an object - The owners are recorded in the field `ownerReferences` in the `metadata` block .lab[ - Let's create a deployment running `nginx`: ```bash kubectl create deployment yanginx --image=nginx ``` - Scale it to a few replicas: ```bash kubectl scale deployment yanginx --replicas=3 ``` - Once it's up, check the corresponding pods: ```bash kubectl get pods -l app=yanginx -o yaml | head -n 25 ``` ] These pods are owned by a ReplicaSet named yanginx-xxxxxxxxxx. .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- ## Listing objects with their owners - This is a good opportunity to try the `custom-columns` output! .lab[ - Show all pods with their owners: ```bash kubectl get pod -o custom-columns=\ NAME:.metadata.name,\ OWNER-KIND:.metadata.ownerReferences[0].kind,\ OWNER-NAME:.metadata.ownerReferences[0].name ``` ] Note: the `custom-columns` option should be one long option (without spaces), so the lines should not be indented (otherwise the indentation will insert spaces). .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- ## Deletion policy - When deleting an object through the API, three policies are available: - foreground (API call returns after all dependents are deleted) - background (API call returns immediately; dependents are scheduled for deletion) - orphan (the dependents are not deleted) - When deleting an object with `kubectl`, this is selected with `--cascade`: - `--cascade=true` deletes all dependent objects (default) - `--cascade=false` orphans dependent objects .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- ## What happens when an object is deleted - It is removed from the list of owners of its dependents - If, for one of these dependents, the list of owners becomes empty ... - if the policy is "orphan", the object stays - otherwise, the object is deleted .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- ## Orphaning pods - We are going to delete the Deployment and Replica Set that we created - ... without deleting the corresponding pods! .lab[ - Delete the Deployment: ```bash kubectl delete deployment -l app=yanginx --cascade=false ``` - Delete the Replica Set: ```bash kubectl delete replicaset -l app=yanginx --cascade=false ``` - Check that the pods are still here: ```bash kubectl get pods ``` ] .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- class: extra-details ## When and why would we have orphans? - If we remove an owner and explicitly instruct the API to orphan dependents (like on the previous slide) - If we change the labels on a dependent, so that it's not selected anymore (e.g. change the `app: yanginx` in the pods of the previous example) - If a deployment tool that we're using does these things for us - If there is a serious problem within API machinery or other components (i.e. "this should not happen") .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- ## Finding orphan objects - We're going to output all pods in JSON format - Then we will use `jq` to keep only the ones *without* an owner - And we will display their name .lab[ - List all pods that *do not* have an owner: ```bash kubectl get pod -o json | jq -r " .items[] | select(.metadata.ownerReferences|not) | .metadata.name" ``` ] .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- ## Deleting orphan pods - Now that we can list orphan pods, deleting them is easy .lab[ - Add `| xargs kubectl delete pod` to the previous command: ```bash kubectl get pod -o json | jq -r " .items[] | select(.metadata.ownerReferences|not) | .metadata.name" | xargs kubectl delete pod ``` ] As always, the [documentation](https://kubernetes.io/docs/concepts/workloads/controllers/garbage-collection/) has useful extra information and pointers. ??? :EN:- Owners and dependents :FR:- Liens de parenté entre les ressources .debug[[k8s/owners-and-dependents.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/owners-and-dependents.md)] --- class: pic .interstitial[] --- name: toc-events class: title Events .nav[ [Previous part](#toc-owners-and-dependents) | [Back to table of contents](#toc-part-4) | [Next part](#toc-finalizers) ] .debug[(automatically generated title slide)] --- # Events - Kubernetes has an internal structured log of *events* - These events are ordinary resources: - we can view them with `kubectl get events` - they can be viewed and created through the Kubernetes API - they are stored in Kubernetes default database (e.g. etcd) - Most components will generate events to let us know what's going on - Events can be *related* to other resources .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## Reading events - `kubectl get events` (or `kubectl get ev`) - Can use `--watch` ⚠️ Looks like `tail -f`, but events aren't necessarily sorted! - Can use `--all-namespaces` - Cluster events (e.g. related to nodes) are in the `default` namespace - Viewing all "non-normal" events: ```bash kubectl get ev -A --field-selector=type!=Normal ``` (as of Kubernetes 1.19, `type` can be either `Normal` or `Warning`) .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## Reading events (take 2) - When we use `kubectl describe` on an object, `kubectl` retrieves the associated events .lab[ - See the API requests happening when we use `kubectl describe`: ```bash kubectl describe service kubernetes --namespace=default -v6 >/dev/null ``` ] .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## Generating events - This is rarely (if ever) done manually (i.e. by crafting some YAML) - But controllers (e.g. operators) need this! - It's not mandatory, but it helps with *operability* (e.g. when we `kubectl describe` a CRD, we will see associated events) .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## ⚠️ Work in progress - "Events" can be : - "old-style" events (in core API group, aka `v1`) - "new-style" events (in API group `events.k8s.io`) - See [KEP 383](https://github.com/kubernetes/enhancements/blob/master/keps/sig-instrumentation/383-new-event-api-ga-graduation/README.md) in particular this [comparison between old and new APIs](https://github.com/kubernetes/enhancements/blob/master/keps/sig-instrumentation/383-new-event-api-ga-graduation/README.md#comparison-between-old-and-new-apis) .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## Experimenting with events - Let's create an event related to a Node, based on [k8s/event-node.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/event-node.yaml) .lab[ - Edit `k8s/event-node.yaml` - Update the `name` and `uid` of the `involvedObject` - Create the event with `kubectl create -f` - Look at the Node with `kubectl describe` ] .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## Experimenting with events - Let's create an event related to a Pod, based on [k8s/event-pod.yaml](https://github.com/jpetazzo/container.training/tree/master/k8s/event-pod.yaml) .lab[ - Create a pod - Edit `k8s/event-pod.yaml` - Edit the `involvedObject` section (don't forget the `uid`) - Create the event with `kubectl create -f` - Look at the Pod with `kubectl describe` ] .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## Generating events in practice - In Go, use an `EventRecorder` provided by the `kubernetes/client-go` library - [EventRecorder interface](https://github.com/kubernetes/client-go/blob/release-1.19/tools/record/event.go#L87) - [kubebuilder book example](https://book-v1.book.kubebuilder.io/beyond_basics/creating_events.html) - It will take care of formatting / aggregating events - To get an idea of what to put in the `reason` field, check [kubelet events]( https://github.com/kubernetes/kubernetes/blob/release-1.19/pkg/kubelet/events/event.go) .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- ## Cluster operator perspective - Events are kept 1 hour by default - This can be changed with the `--event-ttl` flag on the API server - On very busy clusters, events can be kept on a separate etcd cluster - This is done with the `--etcd-servers-overrides` flag on the API server - Example: ``` --etcd-servers-overrides=/events#http://127.0.0.1:12379 ``` ??? :EN:- Consuming and generating cluster events :FR:- Suivre l'activité du cluster avec les *events* .debug[[k8s/events.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/events.md)] --- class: pic .interstitial[] --- name: toc-finalizers class: title Finalizers .nav[ [Previous part](#toc-events) | [Back to table of contents](#toc-part-4) | [Next part](#toc-) ] .debug[(automatically generated title slide)] --- # Finalizers - Sometimes, we.red[¹] want to prevent a resource from being deleted: - perhaps it's "precious" (holds important data) - perhaps other resources depend on it (and should be deleted first) - perhaps we need to perform some clean up before it's deleted - *Finalizers* are a way to do that! .footnote[.red[¹]The "we" in that sentence generally stands for a controller. <br/>(We can also use finalizers directly ourselves, but it's not very common.)] .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## Examples - Prevent deletion of a PersistentVolumeClaim which is used by a Pod - Prevent deletion of a PersistentVolume which is bound to a PersistentVolumeClaim - Prevent deletion of a Namespace that still contains objects - When a LoadBalancer Service is deleted, make sure that the corresponding external resource (e.g. NLB, GLB, etc.) gets deleted.red[¹] - When a CRD gets deleted, make sure that all the associated resources get deleted.red[²] .footnote[.red[¹²]Finalizers are not the only solution for these use-cases.] .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## How do they work? - Each resource can have list of `finalizers` in its `metadata`, e.g.: ```yaml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: my-pvc annotations: ... finalizers: - kubernetes.io/pvc-protection ``` - If we try to delete an resource that has at least one finalizer: - the resource is *not* deleted - instead, its `deletionTimestamp` is set to the current time - we are merely *marking the resource for deletion* .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## What happens next? - The controller that added the finalizer is supposed to: - watch for resources with a `deletionTimestamp` - execute necessary clean-up actions - then remove the finalizer - The resource is deleted once all the finalizers have been removed (there is no timeout, so this could take forever) - Until then, the resource can be used normally (but no further finalizer can be *added* to the resource) .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## Finalizers in review Let's review the examples mentioned earlier. For each of them, we'll see if there are other (perhaps better) options. .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## Volume finalizer - Kubernetes applies the following finalizers: - `kubernetes.io/pvc-protection` on PersistentVolumeClaims - `kubernetes.io/pv-protection` on PersistentVolumes - This prevents removing them when they are in use - Implementation detail: the finalizer is present *even when the resource is not in use* - When the resource is ~~deleted~~ marked for deletion, the controller will check if the finalizer can be removed (Perhaps to avoid race conditions?) .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## Namespace finalizer - Kubernetes applies a finalizer named `kubernetes` - It prevents removing the namespace if it still contains objects - *Can we remove the namespace anyway?* - remove the finalizer - delete the namespace - force deletion - It *seems to works* but, in fact, the objects in the namespace still exist (and they will re-appear if we re-create the namespace) See [this blog post](https://www.openshift.com/blog/the-hidden-dangers-of-terminating-namespaces) for more details about this. .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## LoadBalancer finalizer - Scenario: We run a custom controller to implement provisioning of LoadBalancer Services. When a Service with type=LoadBalancer is deleted, we want to make sure that the corresponding external resources are properly deleted. - Rationale for using a finalizer: Normally, we would watch and observe the deletion of the Service; but if the Service is deleted while our controller is down, we could "miss" the deletion and forget to clean up the external resource. The finalizer ensures that we will "see" the deletion and clean up the external resource. .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## Counterpoint - We could also: - Tag the external resources <br/>(to indicate which Kubernetes Service they correspond to) - Periodically reconcile them against Kubernetes resources - If a Kubernetes resource does no longer exist, delete the external resource - This doesn't have to be a *pre-delete* hook (unless we store important information in the Service, e.g. as annotations) .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## CRD finalizer - Scenario: We have a CRD that represents a PostgreSQL cluster. It provisions StatefulSets, Deployments, Services, Secrets, ConfigMaps. When the CRD is deleted, we want to delete all these resources. - Rationale for using a finalizer: Same as previously; we could observe the CRD, but if it is deleted while the controller isn't running, we would miss the deletion, and the other resources would keep running. .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## Counterpoint - We could use the same technique as described before (tag the resources with e.g. annotations, to associate them with the CRD) - Even better: we could use `ownerReferences` (this feature is *specifically* designed for that use-case!) .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## CRD finalizer (take two) - Scenario: We have a CRD that represents a PostgreSQL cluster. It provisions StatefulSets, Deployments, Services, Secrets, ConfigMaps. When the CRD is deleted, we want to delete all these resources. We also want to store a final backup of the database. We also want to update final usage metrics (e.g. for billing purposes). - Rationale for using a finalizer: We need to take some actions *before* the resources get deleted, not *after*. .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)] --- ## Wrapping up - Finalizers are a great way to: - prevent deletion of a resource that is still in use - have a "guaranteed" pre-delete hook - They can also be (ab)used for other purposes - Code spelunking exercise: *check where finalizers are used in the Kubernetes code base and why!* ??? :EN:- Using "finalizers" to manage resource lifecycle :FR:- Gérer le cycle de vie des ressources avec les *finalizers* .debug[[k8s/finalizers.md](https://github.com/jpetazzo/container.training/tree/2022-02-enix/slides/k8s/finalizers.md)]